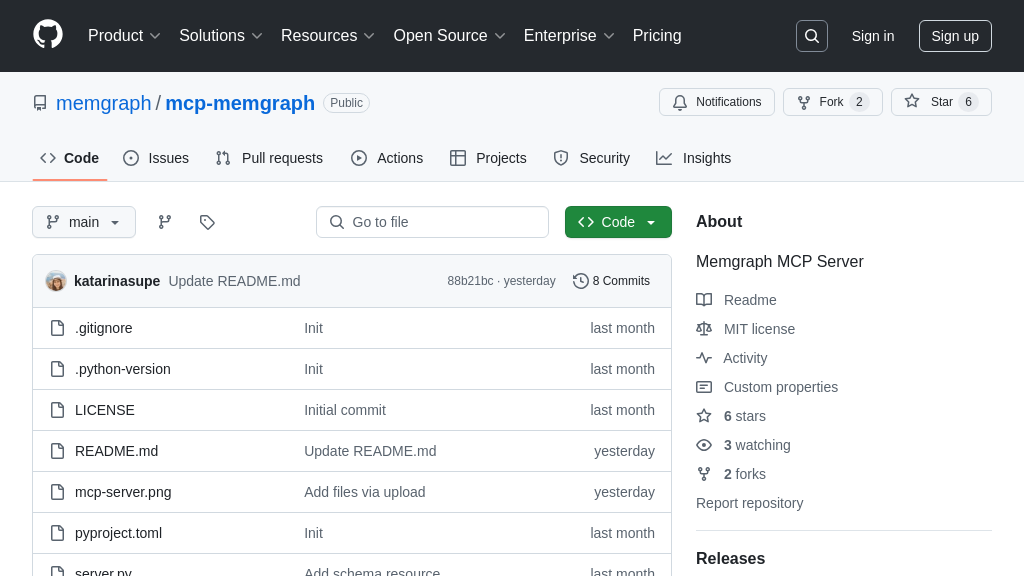

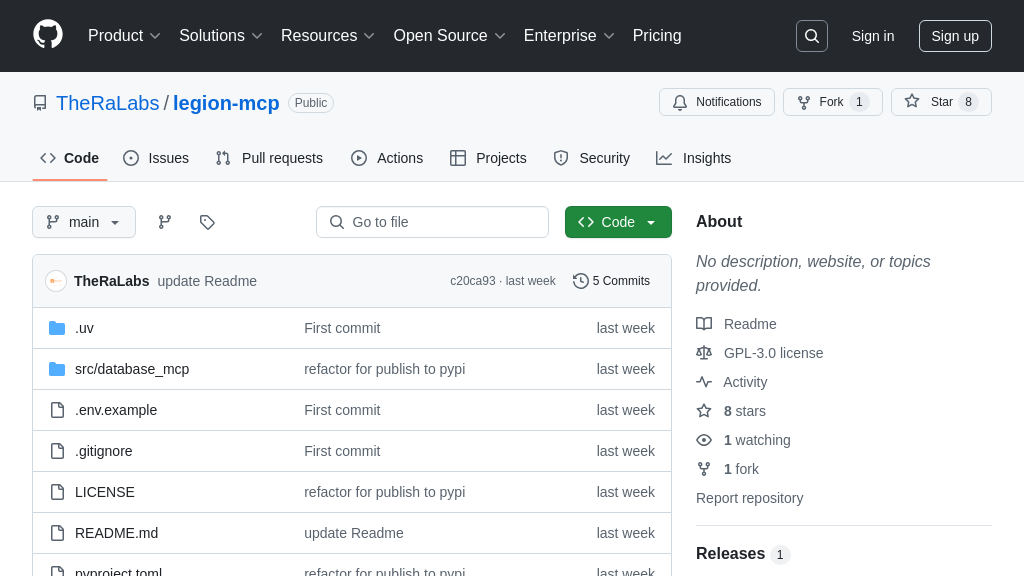

legion-mcp

Database MCP Server: Connect AI models to databases using MCP for intelligent data interaction.

legion-mcp Solution Overview

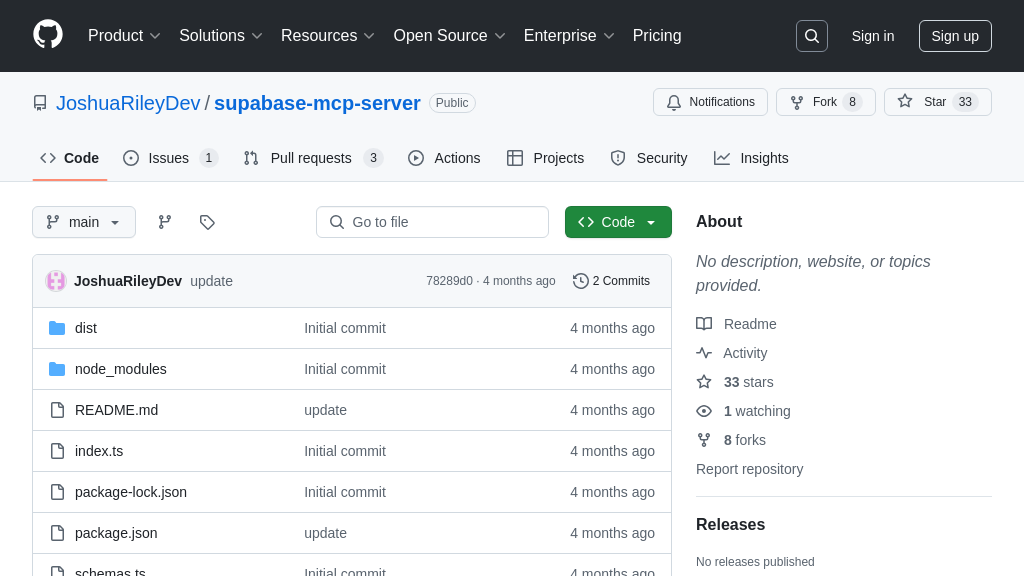

Legion AI的Database MCP Server是一个强大的工具,旨在通过Legion Query Runner简化AI模型与数据库的交互。作为一个MCP服务器,它将数据库操作转化为AI助手可用的资源、工具和提示,从而显著增强了AI的上下文感知能力。

该服务器支持多种数据库,并提供灵活的部署选项,包括独立的MCP服务器和FastAPI集成。开发者可以通过标准输入/输出或HTTP/SSE等多种传输机制,轻松地将SQL查询执行、模式检索等功能集成到AI应用中。通过环境变量或JSON配置,可以轻松定制服务器行为。

Database MCP Server的核心价值在于它消除了AI模型直接访问数据库的复杂性,提供了一个安全、标准化的接口。这使得开发者能够构建更智能、数据驱动的AI应用,而无需担心底层数据库的复杂性。它通过MCP协议,为AI模型提供结构化的数据库信息,从而提升AI的推理和决策能力。

legion-mcp Key Capabilities

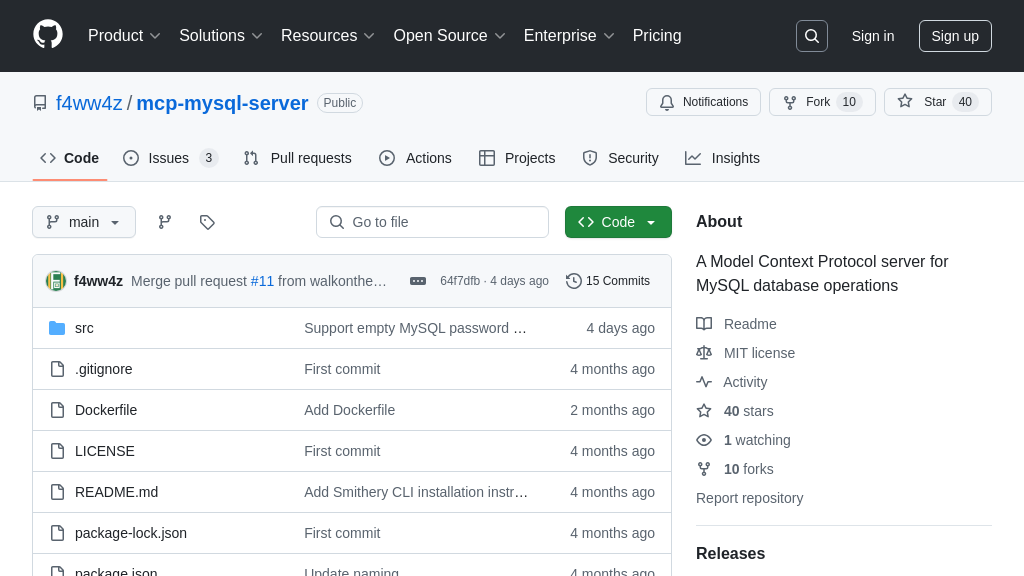

Database Access via Query Runner

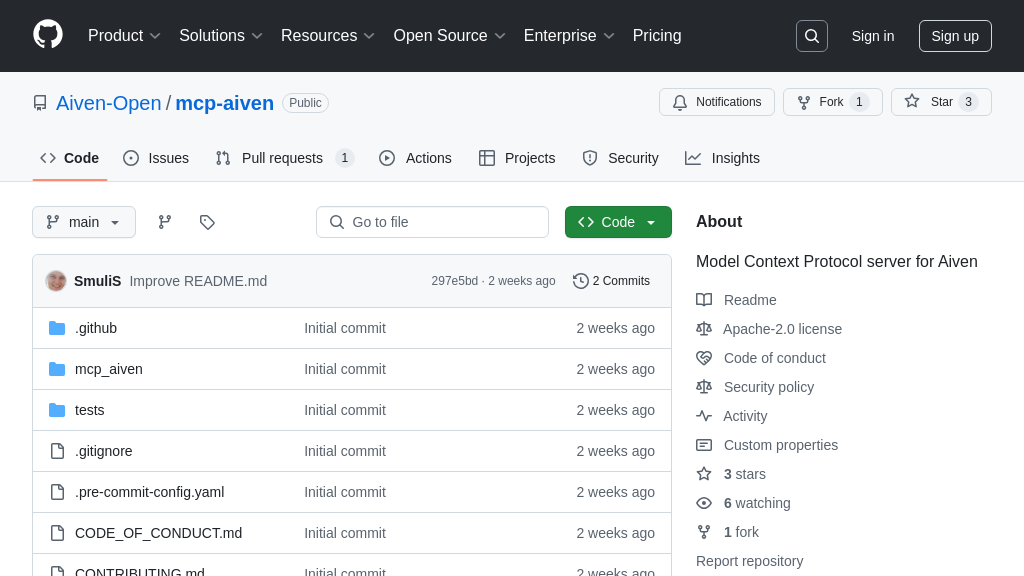

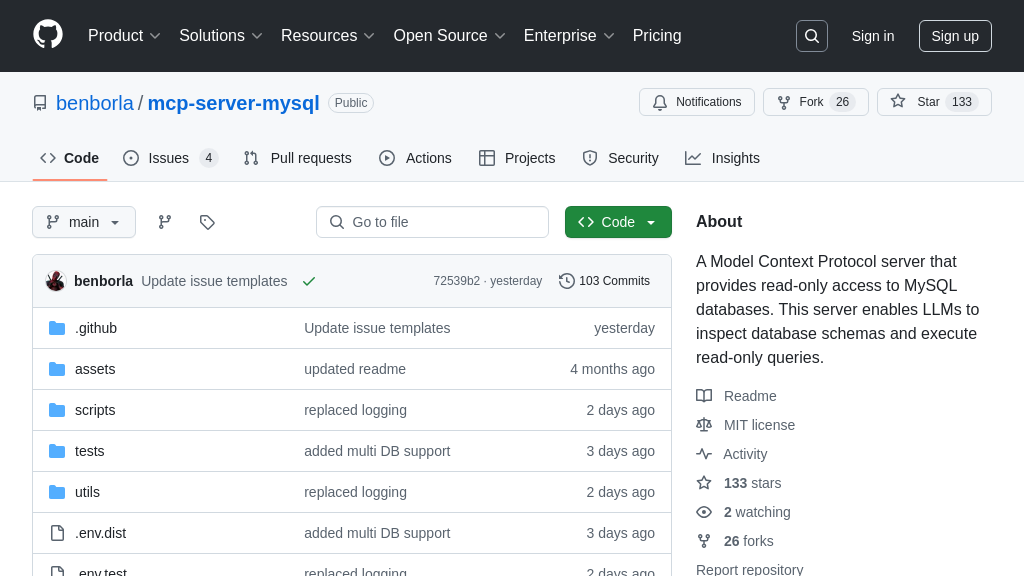

Legion-mcp leverages the Legion Query Runner library to provide a unified interface for accessing and querying various database systems. This abstraction eliminates the need for developers to write database-specific code, simplifying integration with AI models. The Query Runner supports a wide range of databases, including PostgreSQL, MySQL, SQL Server, BigQuery, and SQLite, allowing AI models to interact with diverse data sources through a consistent API. This feature is crucial for AI applications that require access to structured data stored in relational databases.

For example, an AI-powered customer service chatbot can use legion-mcp to retrieve customer information from a database to personalize interactions. The chatbot formulates a query, legion-mcp executes it via Query Runner, and the results are presented to the chatbot for generating a tailored response. The specific database connection details are configured through environment variables or a JSON configuration file, ensuring secure and flexible deployment.

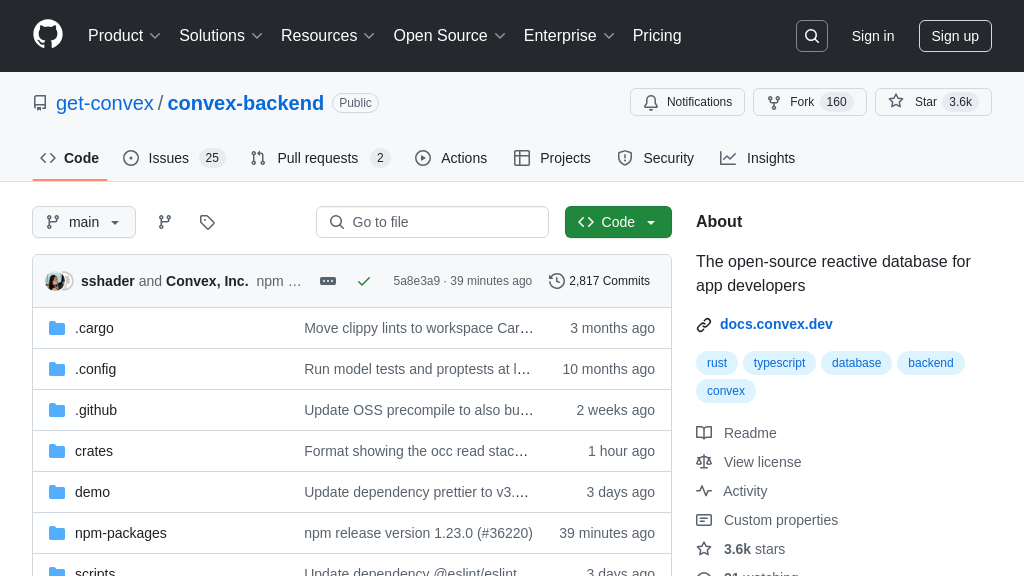

MCP-Enabled Database Operations

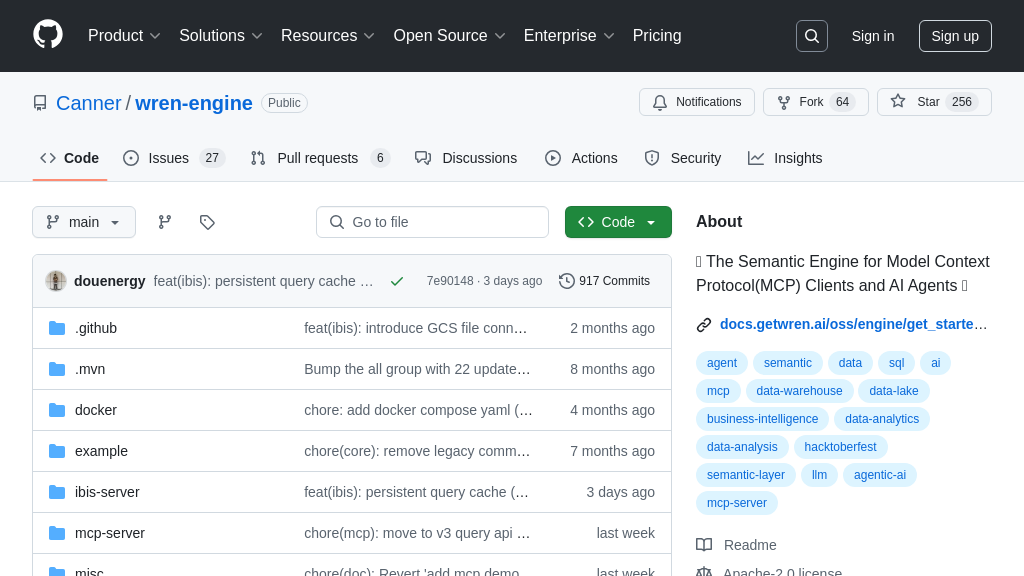

Legion-mcp exposes database operations as MCP resources, tools, and prompts, enabling seamless integration with AI models within the MCP ecosystem. This allows AI assistants to discover and utilize database functionalities dynamically. By adhering to the MCP standard, legion-mcp ensures interoperability with other MCP-compliant components, fostering a modular and extensible AI application architecture. The server translates AI model requests into database queries, executes them, and returns the results in a format suitable for AI consumption.

Consider an AI-driven data analysis tool that needs to extract insights from a sales database. Legion-mcp exposes tools like execute_query and get_table_columns, allowing the AI to formulate and execute queries to retrieve sales data and understand the database schema. The AI can then use this information to generate reports, identify trends, and provide actionable recommendations. This integration is facilitated by the MCP Python SDK, which handles the communication and data serialization between the AI model and the legion-mcp server.

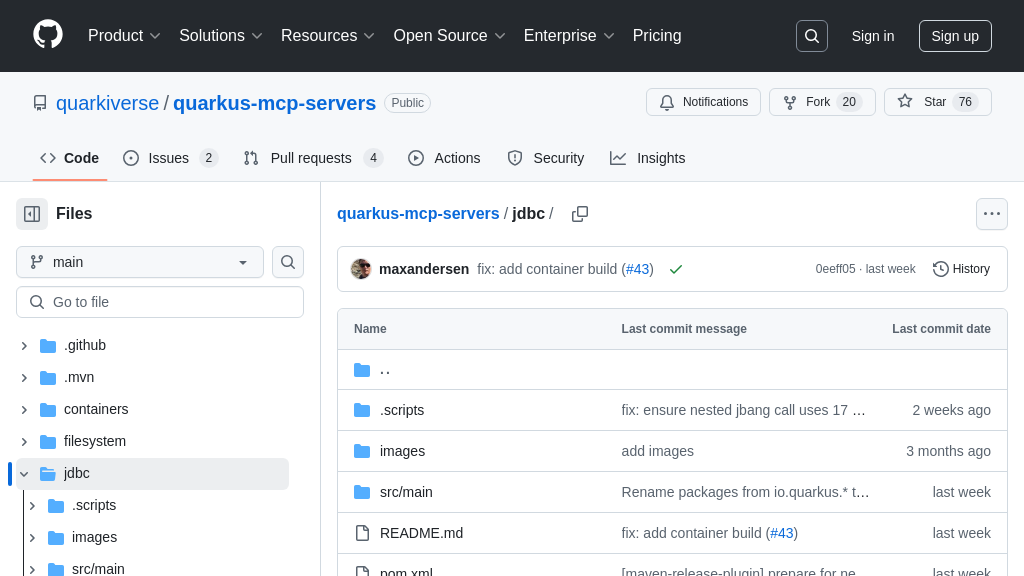

Flexible Deployment Options

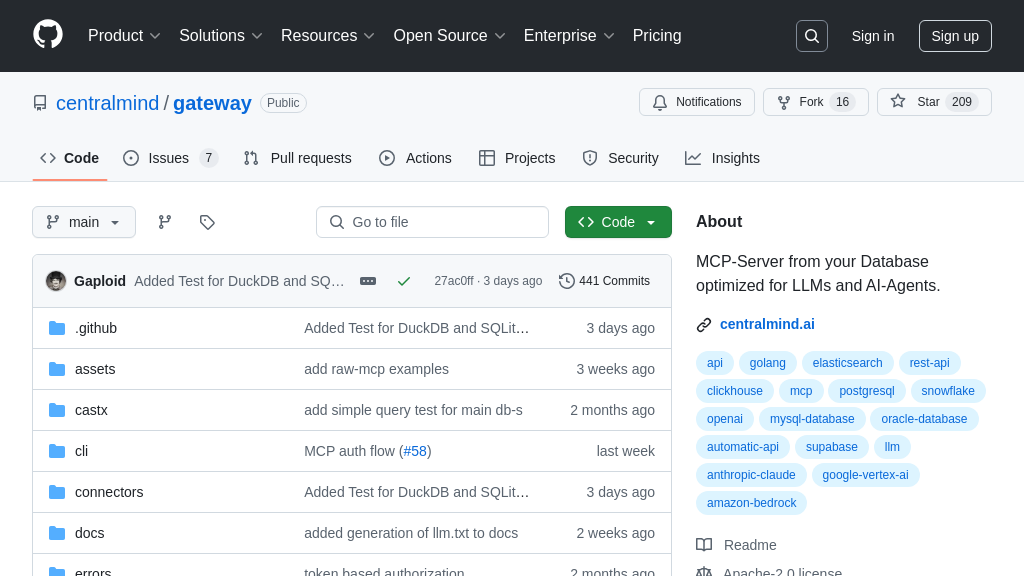

Legion-mcp offers multiple deployment options, including a standalone MCP server and FastAPI integration, providing flexibility to adapt to different application architectures and deployment environments. The standalone server can be deployed independently, while the FastAPI integration allows embedding legion-mcp functionality within existing FastAPI applications. This adaptability simplifies the integration process and reduces the overhead of deploying a separate server.

For instance, a development team building an AI-powered data pipeline might choose to integrate legion-mcp directly into their existing FastAPI application to streamline the deployment process. Alternatively, a team deploying a large-scale AI service might opt for a standalone legion-mcp server to isolate database access and improve scalability. The choice of deployment option depends on the specific requirements of the application and the existing infrastructure. The configuration is managed through environment variables, command-line arguments, or MCP settings JSON, providing further flexibility in deployment and management.