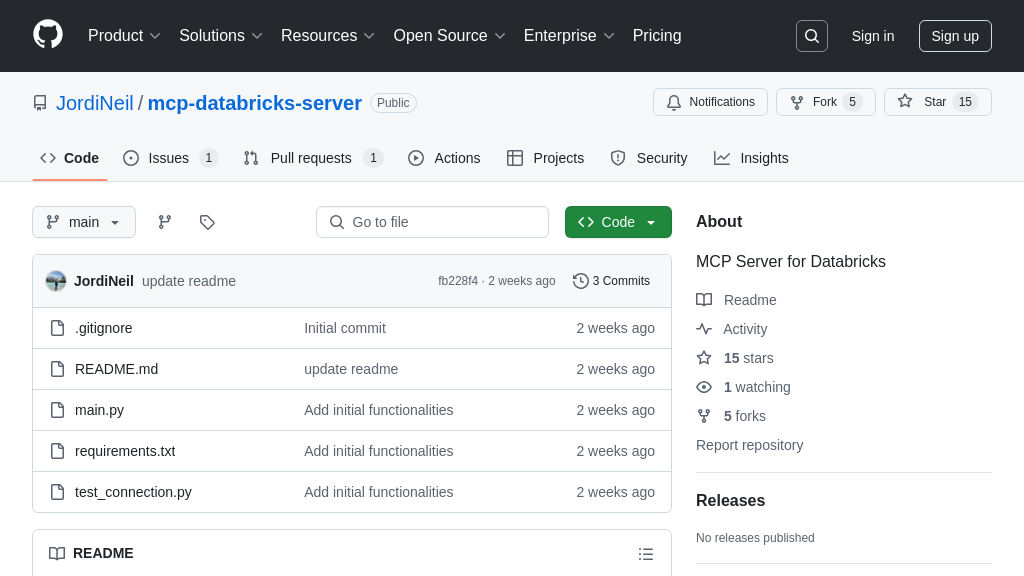

mcp-databricks-server

mcp-databricks-server: Connect AI models to Databricks for natural language SQL queries and job management.

mcp-databricks-server Solution Overview

The Databricks MCP Server is a vital component of the MCP ecosystem, enabling seamless interaction between AI models and the Databricks environment. This server empowers Large Language Models (LLMs) to execute SQL queries on Databricks SQL warehouses, list jobs, and retrieve job statuses using natural language. By connecting to the Databricks API, it allows developers to leverage the power of Databricks directly within their AI workflows.

Key features include the ability to run SQL queries, list all Databricks jobs, and obtain detailed information about specific jobs. This eliminates the need for manual data extraction and manipulation, streamlining the AI development process. The server is implemented in Python and integrates seamlessly with LLMs via standard input/output or HTTP/SSE, adhering to the MCP architecture. The core value lies in enabling natural language interaction with Databricks, simplifying data access and analysis for AI models.

mcp-databricks-server Key Capabilities

SQL Query Execution

The mcp-databricks-server empowers AI models to directly interact with Databricks SQL warehouses by executing SQL queries. This functionality allows LLMs to retrieve specific data, perform aggregations, and gain insights from data stored within Databricks. The server receives SQL queries from the AI model via the MCP protocol, transmits them to the Databricks SQL warehouse, and returns the query results to the AI model in a structured format. This enables AI models to answer complex questions, generate reports, and make data-driven decisions based on real-time information from Databricks. For example, an AI assistant could use this feature to answer a user's question like "What were the top 5 best-selling products last month?" by constructing and executing the appropriate SQL query. The tool name is run_sql_query(sql: str).

Databricks Job Management

This feature provides AI models with the ability to manage Databricks jobs, including listing existing jobs and retrieving their status. By listing jobs, the AI model can gain an overview of the current workload and identify specific jobs of interest. Retrieving the status of a job allows the AI model to monitor its progress, detect failures, and trigger appropriate actions. For instance, an AI-powered monitoring system could use this feature to automatically restart failed jobs or send alerts when a job exceeds a certain runtime threshold. The tools list_jobs() and get_job_status(job_id: int) are used for this purpose. This functionality streamlines job management and enables proactive intervention, improving the efficiency and reliability of Databricks workflows.

Detailed Job Information Retrieval

Beyond simply checking the status of a Databricks job, the mcp-databricks-server allows AI models to retrieve comprehensive details about a specific job. This includes information such as the job's configuration, execution history, error messages, and resource consumption. By accessing this detailed information, AI models can gain a deeper understanding of the job's behavior, diagnose performance bottlenecks, and optimize its configuration. For example, an AI-powered debugging tool could use this feature to automatically identify the root cause of a job failure by analyzing the error messages and execution history. The tool get_job_details(job_id: int) provides this functionality. This level of detail empowers AI models to proactively manage and optimize Databricks jobs, leading to improved performance and reduced operational costs.

Secure Databricks API Interaction

The mcp-databricks-server acts as a secure intermediary between AI models and the Databricks API. It handles authentication and authorization, ensuring that AI models only have access to the resources and actions they are permitted to use. The server stores Databricks credentials securely and enforces access control policies, preventing unauthorized access to sensitive data and resources. This is crucial for maintaining the security and integrity of the Databricks environment. By abstracting away the complexities of Databricks API authentication, the mcp-databricks-server simplifies the integration of AI models with Databricks, while ensuring a secure and compliant environment. The server uses environment variables for configuration, promoting secure credential management.