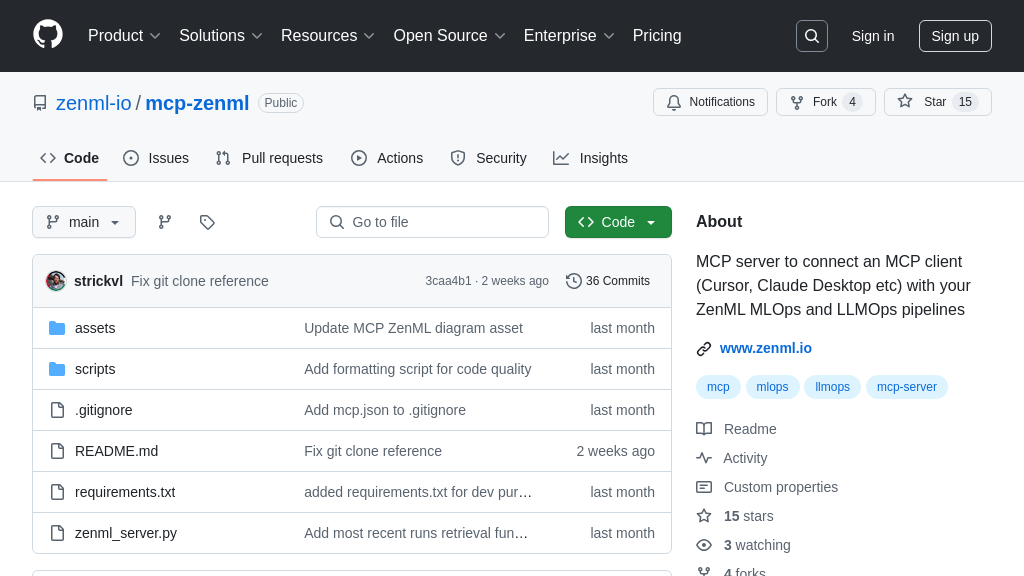

mcp-zenml

MCP server for ZenML, connecting MLOps pipelines to AI models. Access ZenML data from Claude, Cursor and more!

mcp-zenml Solution Overview

mcp-zenml is an MCP server designed to seamlessly connect your ZenML MLOps and LLMOps pipelines with MCP clients like Claude Desktop and Cursor. It exposes key ZenML functionalities, providing live access to information about users, stacks, pipelines, runs, artifacts, and more. Developers can leverage this to trigger new pipeline runs directly from their AI model interfaces, streamlining workflows.

This server acts as a bridge, enabling AI models to understand and interact with the state of your ML pipelines. By providing a standardized MCP interface, mcp-zenml simplifies the integration of ZenML's powerful features into AI-driven applications. It empowers developers to build more intelligent and context-aware AI solutions, leveraging the full potential of their MLOps infrastructure. The server is implemented in Python and utilizes the ZenML API, offering a straightforward setup process detailed in the documentation.

mcp-zenml Key Capabilities

Access to ZenML Metadata

The mcp-zenml server provides a standardized way to access metadata stored within a ZenML instance. This includes information about users, stacks, pipelines, pipeline runs, artifacts, and other core ZenML entities. By exposing this data through the Model Context Protocol, AI models can gain a deeper understanding of the ML pipelines they interact with. For example, an LLM could use this information to debug a failing pipeline run by examining the metadata of the artifacts produced by each step. This allows for more informed decision-making and improved model performance. The server achieves this by querying the ZenML API and formatting the responses according to the MCP specification.

Triggering Pipeline Runs

Beyond read-only access, mcp-zenml enables the triggering of new pipeline runs directly from an MCP client. This functionality is contingent on the existence of a pre-defined run template within the ZenML environment. This allows AI models to initiate new training or inference workflows based on real-time insights or user requests. Imagine a scenario where an LLM detects data drift in a production model. It could then use mcp-zenml to trigger a new pipeline run to retrain the model on the updated data, ensuring optimal performance. This feature streamlines the interaction between AI models and the ML pipelines they depend on, fostering a more dynamic and responsive system.

Integration with ZenML Cloud

The mcp-zenml server is designed to seamlessly integrate with ZenML Cloud, providing access to remote ZenML instances. This allows users to leverage the managed services and collaborative features of ZenML Cloud while still benefiting from the contextual awareness provided by MCP. To connect to ZenML Cloud, users need to provide the server URL and an API key, which can be obtained from the ZenML Cloud UI. This integration simplifies the deployment and management of MCP-enabled AI applications in a cloud environment. For instance, a data scientist can use Claude Desktop to interact with a ZenML Cloud instance, querying pipeline metadata and triggering new runs without needing to manage the underlying infrastructure.

Technical Implementation

The mcp-zenml server is implemented in Python and leverages the ZenML Python client to interact with the ZenML API. It uses uv to manage dependencies and execution. The server exposes its functionality through standard input/output, adhering to the MCP specification. The configuration is managed through a JSON file that specifies the command to run the server, along with environment variables for authentication and logging. This design allows for easy deployment and integration with various MCP clients, such as Claude Desktop and Cursor. The server is designed to be lightweight and efficient, minimizing its impact on system resources.