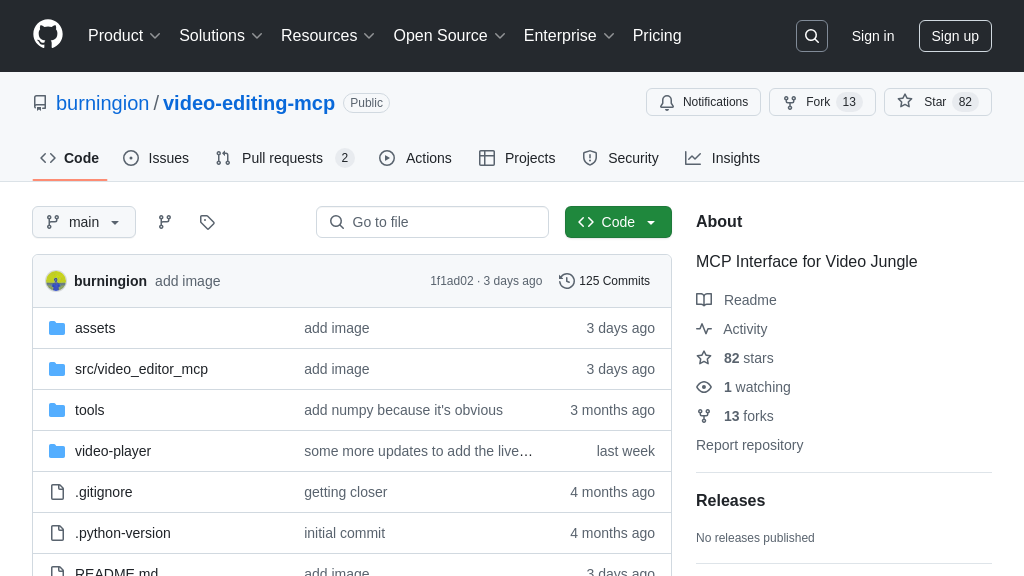

video-editing-mcp

AI-powered video editing with video-editing-mcp: upload, edit, search, and generate videos using your favorite LLMs.

video-editing-mcp Solution Overview

The Video Editor MCP server empowers AI models with video understanding and editing capabilities. It acts as a bridge between Large Language Models (LLMs) and Video Jungle, enabling users to upload, search, and generate videos using natural language prompts. Key features include a custom vj:// URI scheme for video resource management, embedding-based video search, and tools for generating video edits from single or multiple video sources.

This MCP solution addresses the developer need for seamless integration of video content into AI workflows. By leveraging Video Jungle's API, it allows AI models to analyze video content, identify key moments, and create tailored edits. The core value lies in its ability to automate video editing tasks, making video content more accessible and actionable for AI-driven applications. It integrates via standard input/output, and HTTP/SSE, offering flexibility in deployment and usage. To use the tools, you'll need to sign up for Video Jungle and add your API key.

video-editing-mcp Key Capabilities

Video Upload and Management

The add-video tool allows users to upload video content to the Video Jungle platform directly through the MCP server. This functionality streamlines the process of integrating video assets into AI workflows. The tool accepts a video URL as input, downloads the video, and stores it within the user's Video Jungle library. Crucially, the uploaded video undergoes multimodal analysis, examining both audio and visual components. This analysis generates rich metadata, enabling subsequent search and editing operations. The tool returns a vj:// URI, a custom URI scheme, which serves as a unique identifier for the uploaded video, facilitating easy referencing and manipulation within the MCP ecosystem. This feature simplifies video asset management and prepares videos for AI-driven editing and analysis. For example, a user could upload a YouTube tutorial video and then use the search function to find specific segments.

Semantic Video Search

The search-videos tool empowers users to perform semantic searches across their video library. Unlike simple keyword searches, this tool leverages embeddings to understand the content and context of the videos. This allows users to find relevant video segments even if the exact keywords are not present in the video's title or description. The search results include detailed metadata about the video content, such as the presence of specific objects, actions, or spoken words, along with their timestamps. This granular information enables precise video editing and content generation. For instance, a user could search for "close-up shots of a flower" within a collection of nature videos, even if the videos are not explicitly tagged with that phrase. This feature significantly enhances the efficiency of video content discovery and repurposing.

AI-Powered Video Editing

The generate-edit-from-videos and generate-edit-from-single-video tools provide AI-driven video editing capabilities. These tools enable users to automatically generate video edits based on search results or content analysis. The generate-edit-from-videos tool creates a compilation from multiple video sources, while generate-edit-from-single-video extracts specific segments from a single video. The editing process is guided by the metadata generated during the video analysis phase, allowing for precise and context-aware edits. For example, a user could instruct the system to create a highlight reel of all instances where a specific phrase is spoken in a video. This feature automates the tedious process of manual video editing, saving time and effort while ensuring accuracy and relevance. The current implementation relies on the context within the current chat session.

Local Video File Search

The search-local-videos tool allows users to search video files stored locally on their MacOS devices using Apple's tagging system. By setting the environment variable LOAD_PHOTOS_DB=1, the MCP server can access the user's Photos app and search for videos based on tags. This feature enables users to integrate their local video content into AI-powered workflows. For example, a user could search for "Skateboard" to find all videos tagged with that keyword in their Photos library. This functionality bridges the gap between local and cloud-based video assets, providing a unified platform for video management and editing. This tool requires MacOS and access to the Photos application.