ableton-mcp

ableton-mcp is an MCP tool integrating Claude AI with Ableton Live. Control your DAW using AI prompts for music production, track creation, and session manipulation via the Model Context Protocol.

ableton-mcp Solution Overview

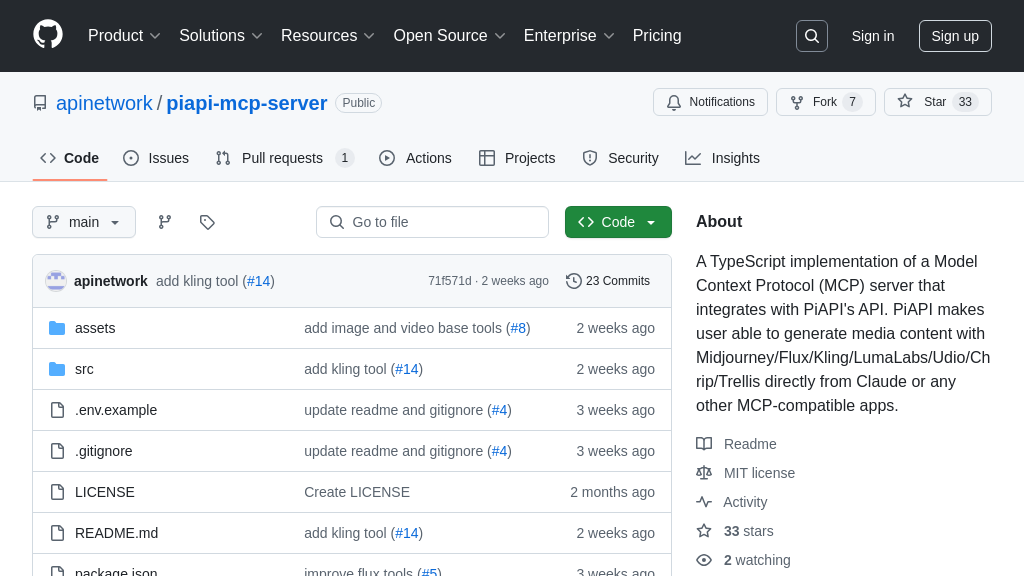

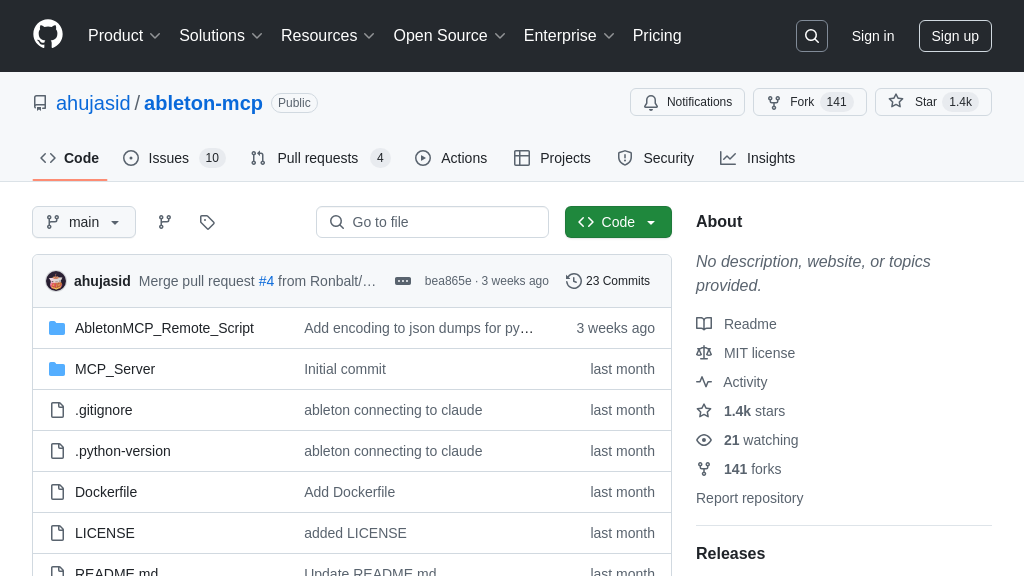

AbletonMCP is a specialized MCP Server that seamlessly integrates AI models, such as Claude, with Ableton Live, revolutionizing music production. It empowers developers and musicians to interact with and control their Ableton Live sessions using natural language prompts. This solution facilitates bi-directional communication, enabling features like creating/modifying MIDI and audio tracks, loading instruments and effects directly from the Ableton browser, generating and editing MIDI clips, and managing session playback and tempo. The integration relies on a Python MCP server that connects to a custom Ableton MIDI Remote Script via TCP sockets, interpreting AI instructions into tangible actions within the DAW. The primary value of AbletonMCP is enabling AI-assisted music creation and arrangement, streamlining complex workflows, and unlocking novel creative avenues by giving AI direct control over the Ableton Live environment. Integration is supported through tools like Smithery or manual configuration for clients like Claude Desktop or Cursor.

ableton-mcp Key Capabilities

AI-Driven Track & Clip Creation

AbletonMCP empowers AI models like Claude to directly participate in the music creation process within Ableton Live by enabling the programmatic creation and modification of both MIDI and audio tracks. This core functionality bridges the gap between high-level creative prompts and tangible musical elements in a Digital Audio Workstation (DAW). When a user prompts the AI (e.g., "Create a new MIDI track with a synth bass sound"), the MCP client (like Claude Desktop) sends a structured command through the ableton-mcp server. This server translates the request into a specific JSON message, which is then transmitted via a TCP socket connection to the Ableton Remote Script running within Ableton Live. The Remote Script interprets this message and utilizes Ableton Live's Python API to execute the action, such as instantiating a new track. Furthermore, the AI can populate MIDI clips with notes, defining melodies, rhythms, or chord progressions, effectively translating musical ideas into playable data within the Ableton session. This allows developers and musicians to rapidly prototype ideas, generate variations, or build foundational song structures using natural language commands, significantly accelerating the initial stages of music production.

Session & Transport Control

This feature grants the connected AI model control over Ableton Live's main session parameters and transport functions, enabling automated or prompt-driven manipulation of the project's playback and overall structure. Through the MCP integration, the AI can perform actions typically done manually by a user, such as starting and stopping playback, setting the project tempo (BPM), triggering specific clips or entire scenes, and potentially adjusting other global parameters like time signature. The technical flow involves the AI issuing a command (e.g., "Set the tempo to 140 BPM and play the scene containing the chorus"), which is relayed by the ableton-mcp server to the Ableton Remote Script. The script then interacts with the Live Object Model (LOM) to execute the requested transport or session command. This capability is invaluable for tasks like auditioning different arrangements automatically, setting up specific playback conditions for recording or practice, or even performing generative music pieces where the AI dynamically controls the flow and tempo of the Ableton session based on certain rules or inputs. It transforms the AI from a passive generator into an active controller within the live music environment.

Instrument & Effect Management

AbletonMCP provides the AI with the ability to browse and load instruments, audio effects, and sounds directly from the Ableton Live browser onto specific tracks. This functionality allows users to leverage Ableton's extensive built-in library and potentially user libraries through AI prompts, streamlining the sound design and mixing process. For instance, a user could ask Claude to "Load a 'Grand Piano' instrument onto track 1" or "Add a reverb effect with a long decay time to the drum track." The ableton-mcp server processes these requests, sending corresponding commands to the Ableton Remote Script. The script then interacts with Ableton Live's browser API to locate the specified device or preset and instantiate it on the target track. This significantly enhances the AI's utility, moving beyond simple note generation to active participation in shaping the sonic character of the music. It solves the problem of manually searching and loading devices, allowing developers and producers to maintain creative flow by simply describing the desired sound or effect using natural language, letting the AI handle the interaction with Ableton's browser and device management system.