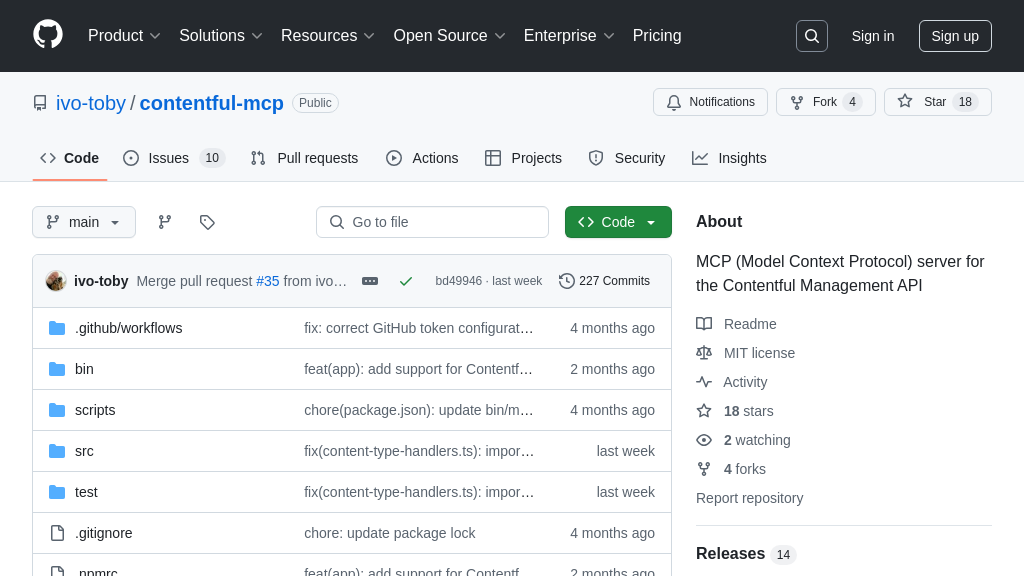

contentful-mcp

Contentful MCP server: AI-powered content management via seamless integration with Contentful's API.

contentful-mcp Solution Overview

Contentful MCP is a server that connects AI models to Contentful's Content Management API, enabling comprehensive content management capabilities. It provides tools for creating, reading, updating, and deleting entries and assets, along with space and content type management. Features like bulk publishing and unpublishing streamline content workflows.

This MCP server is designed to prevent context window overflow in LLMs, using smart pagination to handle large datasets efficiently. Developers can leverage this server to build AI-powered content management solutions, automate content updates, and ensure content consistency. It integrates seamlessly with AI models through standard MCP protocols, allowing for AI-driven content creation, modification, and delivery. By using either a Content Management API token or App Identity, the server ensures secure access and operation within defined Contentful spaces and environments.

contentful-mcp Key Capabilities

Full Content Management via MCP

The Contentful MCP server provides complete CRUD (Create, Read, Update, Delete) operations for entries and assets within Contentful, all accessible through a standardized MCP interface. This allows AI models to interact with and manage content stored in Contentful without needing to understand the complexities of the Contentful Management API directly. The server translates MCP requests into the appropriate Contentful API calls, enabling AI to create new content, retrieve existing content, modify content, and delete content as needed. This includes managing various content fields, handling different content types, and working with assets like images and documents.

For example, an AI model could use this functionality to automatically update product descriptions on a website based on real-time sales data or to generate new blog posts based on trending topics. The AI could create a new entry with the create_entry tool, populate its fields using the update_entry tool, and then publish it using the publish_entry tool. This simplifies content workflows and allows AI to be directly integrated into content management processes. Technically, this is achieved by mapping MCP tools like create_entry, update_entry, and delete_entry to corresponding Contentful Management API endpoints.

Bulk Content Operations

This feature enables AI models to perform batch operations on content, such as publishing, unpublishing, or validating multiple entries and assets simultaneously. Instead of processing each item individually, the AI can submit a single request to perform the desired action on a list of content items. This significantly improves efficiency and reduces the number of API calls required, especially when dealing with large content repositories. The bulk operations are performed asynchronously, allowing the AI to continue with other tasks while the operations are processed in the background. The server provides status updates on the progress of the bulk operations, including the number of successful and failed operations.

Consider a scenario where an AI model needs to update the publication status of hundreds of articles based on a new policy. Instead of making individual API calls for each article, the AI can use the bulk_publish or bulk_unpublish tools to process them in a single batch. This not only saves time but also reduces the load on the Contentful API. The implementation involves creating asynchronous tasks that process each content item in the batch and track the overall progress of the operation.

Smart Pagination for LLMs

To prevent context window overflow in Large Language Models (LLMs), the Contentful MCP server implements smart pagination for list operations like search_entries and list_assets. Instead of returning all results in a single response, the server limits the number of items per page to a maximum of 3. Each response includes metadata such as the total number of available items, the current page of items, the number of remaining items, and a "skip" value for retrieving the next page. This allows the LLM to efficiently handle large datasets without exceeding its context window limits. The server also includes a message prompting the LLM to offer retrieving more items, guiding the user through the pagination process.

For example, if an AI model needs to retrieve all assets in a Contentful space, it can use the list_assets tool. The server will return the first 3 assets along with pagination information. The AI can then use the "skip" value to request the next page of assets, and so on, until all assets have been retrieved. This approach ensures that the LLM can process the entire dataset without running into context window limitations. The pagination logic is implemented in the server-side handlers for list operations, ensuring that the responses are always within the specified limits.

Space and Environment Management

The Contentful MCP server provides tools for managing Contentful spaces and environments, enabling AI models to create, update, and delete these organizational units. This allows AI to dynamically provision and manage content environments based on specific needs, such as creating a new environment for testing content changes or setting up a dedicated space for a particular project. The server exposes tools for listing available spaces, retrieving space details, listing environments within a space, creating new environments, and deleting environments.

For instance, an AI-powered deployment pipeline could use these tools to automatically create a new Contentful environment for each release, ensuring that content changes are isolated and tested before being deployed to production. The AI could use the create_environment tool to create a new environment, populate it with content from a staging environment, and then run automated tests to validate the content. The implementation involves mapping MCP tools like create_environment and delete_environment to the corresponding Contentful Management API endpoints for space and environment management.

App Identity Authentication

Instead of relying on a Content Management API token, the Contentful MCP server supports authentication using Contentful App Identity. This allows AI models to securely access and manage content within a specific Contentful space and environment using temporary App Tokens. To use this authentication method, the AI model needs to provide the App ID, the private key associated with the app, and the Space ID and Environment ID where the app is installed. The server then requests a temporary App Token from Contentful and uses it to perform content operations.

This approach is particularly useful when using the MCP server in backend systems or chat agents, as it eliminates the need to store and manage long-lived Management API tokens. For example, a chat agent could use App Identity to securely update content in a Contentful space based on user input, without requiring direct access to the Management API token. The implementation involves using the Contentful App Framework to generate temporary App Tokens based on the provided credentials.