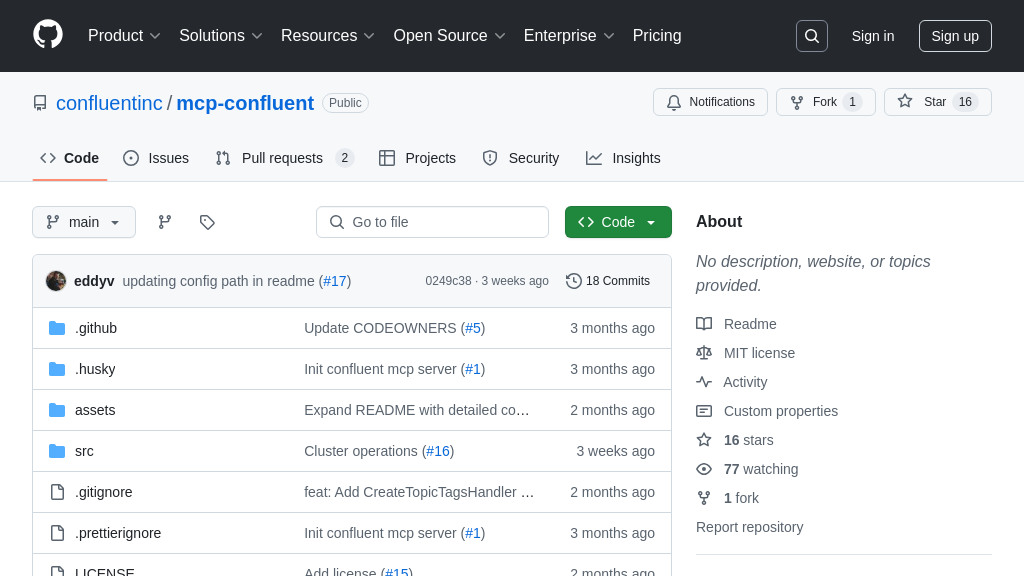

mcp-confluent

mcp-confluent: An MCP server for AI model integration with Confluent Kafka and Confluent Cloud.

mcp-confluent Solution Overview

mcp-confluent is an MCP server designed to facilitate seamless interaction between AI models and Confluent Kafka, including Confluent Cloud REST APIs. This server acts as a crucial bridge, enabling AI applications to leverage real-time data streams and event processing capabilities offered by Confluent's ecosystem. It simplifies the integration process by providing a standardized interface for AI models to access and manipulate Kafka data.

Key features include configuration via environment variables, allowing flexible deployment and management. Developers can use this server with various MCP clients like Claude Desktop or Goose CLI, configuring them to connect to the server's address. By abstracting away the complexities of Kafka API interactions, mcp-confluent empowers developers to focus on building intelligent applications that react to real-time data, unlocking the full potential of AI-driven data streaming. It's built using TypeScript and can be easily extended with new tools.

mcp-confluent Key Capabilities

Kafka Interaction via MCP

The mcp-confluent server acts as an intermediary, enabling AI models to interact with Confluent Kafka and Confluent Cloud REST APIs through the standardized MCP interface. It abstracts the complexities of Kafka's API, allowing AI models to focus on data processing and analysis. The server handles authentication, request formatting, and response parsing, ensuring secure and reliable communication with Confluent Cloud. This abstraction simplifies the integration process for AI developers, eliminating the need to write custom Kafka clients or manage low-level API details. The server leverages environment variables for configuration, allowing for easy adaptation to different Confluent Cloud environments.

Use Case: An AI model needs to consume real-time data from a Kafka topic for fraud detection. Instead of directly connecting to Kafka, the model interacts with the mcp-confluent server via MCP. The server fetches the data from Kafka and presents it to the model in a standardized format, enabling the model to perform its analysis without needing to understand Kafka's specific protocols.

Technical Detail: The server uses the Confluent Kafka client libraries to interact with Kafka. It authenticates using API keys and secrets defined in the .env file.

Dynamic Tool Configuration

mcp-confluent allows for dynamic enabling and disabling of tools. This is achieved through the enabledTools set in index.ts. This feature allows administrators to control which functionalities are exposed to AI models through the MCP interface. By selectively enabling tools, the attack surface can be reduced and resource consumption optimized. This dynamic configuration is particularly useful in multi-tenant environments where different AI models may require different sets of functionalities. The tool configuration is read during server startup, allowing for immediate adaptation to changing requirements.

Use Case: In a data analytics platform, some AI models need access to schema registry functionalities while others only require data streaming capabilities. The administrator can enable the schema registry tool only for the models that need it, improving security and resource utilization.

Technical Detail: The ToolFactory class uses a map of tool names to handler classes. The enabledTools set determines which tools are instantiated and made available through the MCP interface.

Simplified Confluent Cloud Authentication

The server simplifies the authentication process for accessing Confluent Cloud resources. By centralizing authentication logic within the mcp-confluent server, AI models are relieved of the burden of managing API keys, secrets, and other credentials directly. The server securely stores and manages these credentials, providing a single point of authentication for all AI model interactions with Confluent Cloud. This approach enhances security and simplifies the deployment and management of AI-powered applications that rely on Confluent Cloud services.

Use Case: An AI model needs to access a Kafka topic and a Schema Registry in Confluent Cloud. Instead of managing separate API keys and secrets for each service, the model relies on the mcp-confluent server to handle authentication. The server uses the credentials stored in the .env file to authenticate with both Kafka and the Schema Registry, providing seamless access to the required resources.

Technical Detail: The server uses environment variables (e.g., KAFKA_API_KEY, SCHEMA_REGISTRY_API_KEY) to store the credentials. These credentials are used to create authenticated clients for interacting with Confluent Cloud services.