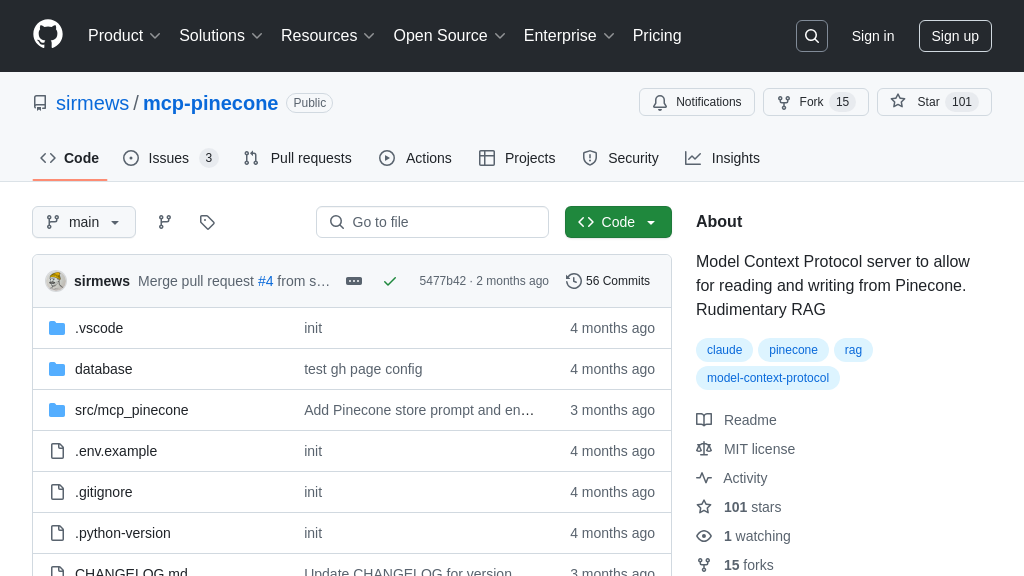

mcp-pinecone

mcp-pinecone: An MCP server enabling Claude Desktop to read and write to Pinecone, enhancing AI models with RAG.

mcp-pinecone Solution Overview

mcp-pinecone is a Model Context Protocol server that empowers AI models like Claude to interact with Pinecone, a vector database. It provides resources for reading and writing to a Pinecone index, enabling Retrieval-Augmented Generation (RAG) capabilities. Key tools include semantic-search for finding relevant records, read-document for retrieving specific documents, list-documents for index overview, pinecone-stats for index insights, and process-document for efficient document chunking, embedding, and upserting.

This server streamlines the integration of Pinecone with AI models, eliminating the complexities of manual data handling. By using mcp-pinecone, developers can quickly implement semantic search and knowledge retrieval, enhancing the AI's ability to access and reason about external information. It leverages Pinecone's inference API for embeddings and token-based chunking, simplifying the development process. Installation is straightforward via Smithery or uv, making it easy to deploy and configure within the MCP ecosystem.

mcp-pinecone Key Capabilities

Semantic Search in Pinecone

The semantic-search tool allows AI models to perform similarity searches within a Pinecone index. It leverages the vector embeddings stored in Pinecone to find documents that are semantically similar to a given query. When a query is submitted, the tool generates an embedding for the query text and then searches the Pinecone index for the closest matching embeddings. The results are returned as a list of documents ranked by similarity score. This enables AI models to retrieve relevant information from a large corpus of documents based on meaning rather than keyword matching.

For example, a Claude user could ask, "What are the key differences between Llama 2 and GPT-4?". The semantic-search tool would embed this query and search the Pinecone index for documents discussing these models. The AI model can then use the retrieved documents to formulate a comprehensive answer for the user. This is particularly useful for RAG applications where the AI model needs to access up-to-date information stored in a vector database.

Technically, this involves using Pinecone's API to perform a vector similarity search. The query is embedded using Pinecone's inference API, and the resulting vector is used to query the Pinecone index.

Document Processing and Upserting

The process-document tool automates the process of preparing and storing documents in a Pinecone index. It takes a document as input, chunks it into smaller segments, generates embeddings for each chunk, and then upserts these embeddings into the Pinecone index. This tool simplifies the process of building a knowledge base for AI models by handling the complexities of chunking, embedding, and indexing. The chunking is token-based, and embeddings are generated via Pinecone's inference API.

Consider a scenario where a company wants to enable its AI model to answer questions about its internal documentation. The process-document tool can be used to automatically ingest and index all the company's documents into a Pinecone index. Once the documents are indexed, the AI model can use the semantic-search tool to retrieve relevant information and answer user questions. This eliminates the need for manual data preparation and indexing, saving time and resources.

The tool uses a token-based chunker (inspired by Langchain) to divide the document into manageable segments. Each chunk is then embedded using Pinecone's inference API, and the resulting vectors are upserted into the specified Pinecone index.

Pinecone Index Statistics

The pinecone-stats tool provides insights into the state of the Pinecone index. It retrieves key metrics such as the number of records, the dimensionality of the vectors, and the available namespaces. This information is valuable for monitoring the health and performance of the index, as well as for optimizing query performance. By understanding the characteristics of the index, developers can make informed decisions about indexing strategies, vector dimensions, and other parameters.

For instance, if the number of records in the index is growing rapidly, the developer might need to consider scaling up the Pinecone infrastructure. Similarly, if the query performance is degrading, the developer might need to re-evaluate the indexing strategy or adjust the vector dimensions. The pinecone-stats tool provides the necessary information to make these decisions.

This tool directly queries the Pinecone API to retrieve index statistics. The retrieved information is then formatted and presented to the user in a clear and concise manner.

Read and List Documents

The read-document and list-documents tools provide basic functionalities for accessing and managing documents within the Pinecone index. read-document allows retrieving a specific document from the index given its ID, while list-documents provides a list of all documents currently stored. These tools are essential for managing and verifying the data stored in the Pinecone index.

For example, a developer might use list-documents to verify that all the expected documents have been successfully indexed. They might then use read-document to inspect the content of a specific document and ensure that it has been processed correctly. These tools are particularly useful for debugging and troubleshooting indexing issues.

read-document uses the Pinecone API to fetch a specific vector by its ID. list-documents retrieves a list of all IDs in the index, potentially paginated for large indexes.

Integration Advantages

mcp-pinecone simplifies the integration of Pinecone vector database with AI models in the MCP ecosystem. By providing a standardized interface for interacting with Pinecone, it eliminates the need for developers to write custom code for each AI model. This promotes code reuse, reduces development time, and improves the overall maintainability of AI applications. The use of MCP also ensures that the integration is secure and compliant with industry standards. Furthermore, the mcp-pinecone server can be easily deployed and managed using standard MCP tools and infrastructure. This makes it easy to scale the solution to meet the demands of growing AI applications.