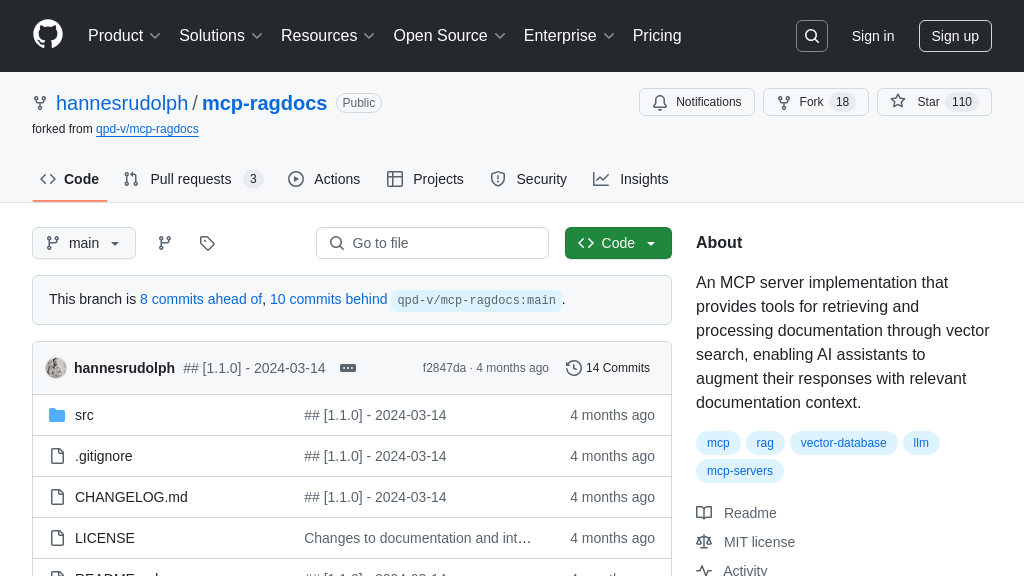

mcp-ragdocs

Enhance AI with documentation using mcp-ragdocs, an MCP Server for vector-based semantic search and context augmentation.

mcp-ragdocs Solution Overview

mcp-ragdocs is an MCP server designed to enhance AI model capabilities by providing access to documentation via vector search. It addresses the developer need for AI assistants that can provide contextually relevant information from extensive documentation sources. This server enables semantic search across multiple documentation sources, automatically processing and indexing content for real-time context augmentation of LLMs.

Key features include tools for searching documentation using natural language queries (search_documentation), listing available sources (list_sources), and managing the documentation processing queue. By leveraging vector embeddings and a vector database, mcp-ragdocs allows AI models to ground their responses in accurate and up-to-date information. This improves the quality and reliability of AI-driven applications, making it a valuable asset for developers building documentation-aware AI assistants and context-aware tooling. It integrates seamlessly through standard MCP client-server architecture, using tools like OpenAI for embeddings and Qdrant for vector storage.

mcp-ragdocs Key Capabilities

Vector-Based Documentation Search

The core of mcp-ragdocs lies in its ability to perform vector-based searches across a collection of documentation. This functionality allows AI models to retrieve relevant information from a knowledge base using natural language queries. When a query is submitted, the system converts it into a vector embedding using the OpenAI API. This embedding is then compared to pre-computed vector embeddings of the documentation content stored in a Qdrant vector database. The system returns the documentation excerpts that are most semantically similar to the query, ranked by relevance. This enables AI models to access and utilize information that is contextually appropriate, even if the exact keywords are not present in the query.

For example, a developer using an AI assistant could ask, "How do I handle authentication errors in this API?". The mcp-ragdocs server would search through the API documentation and return relevant sections describing error codes, authentication procedures, and troubleshooting steps. This allows the AI assistant to provide a more accurate and helpful response, grounded in the official documentation.

Technically, this feature relies on the OpenAI API for generating embeddings and Qdrant for efficient vector storage and similarity search. The search_documentation tool exposes this functionality to MCP clients.

Automated Documentation Processing

mcp-ragdocs automates the process of ingesting and indexing documentation from various sources. This feature eliminates the need for manual data preparation, saving developers significant time and effort. The system can extract content from web pages using the extract_urls tool, process the text, and generate vector embeddings for each document. These embeddings are then stored in the Qdrant vector database, making them available for semantic search. The automated processing pipeline includes error handling and retry logic to ensure that documentation is indexed reliably.

Consider a scenario where a company updates its internal documentation website. Using mcp-ragdocs, the new documentation can be automatically added to the knowledge base by queuing the URLs of the updated pages. The system will then process these pages in the background, ensuring that the AI models always have access to the latest information. The list_queue, run_queue, and clear_queue tools provide control over the processing pipeline.

This feature leverages web scraping techniques, natural language processing (NLP) for text extraction, and the OpenAI API for generating embeddings. The extracted URLs are added to a queue and processed sequentially.

Real-Time Context Augmentation

mcp-ragdocs provides real-time context augmentation for Large Language Models (LLMs). This means that when an AI model generates a response, it can dynamically incorporate relevant information retrieved from the documentation. This ensures that the AI's responses are not only accurate but also grounded in the most up-to-date information available. The context augmentation process is seamless and transparent to the user, enhancing the overall quality and reliability of the AI's output.

Imagine an AI chatbot assisting users with a software product. When a user asks a question about a specific feature, the chatbot can use mcp-ragdocs to search the product documentation for relevant information. The chatbot can then incorporate this information into its response, providing the user with a comprehensive and accurate answer. This eliminates the need for the user to manually search through the documentation, saving them time and effort.

This feature relies on the search_documentation tool to retrieve relevant excerpts and the LLM's ability to incorporate this information into its response. The MCP client is responsible for orchestrating the interaction between the LLM and the mcp-ragdocs server.

Documentation Source Management

mcp-ragdocs provides tools for managing documentation sources, allowing users to add, remove, and list the sources used for context augmentation. The list_sources tool provides a comprehensive list of all indexed documentation, including source URLs, titles, and last update times. The remove_documentation tool allows users to remove specific documentation sources from the system by their URLs. This level of control ensures that the knowledge base remains accurate and up-to-date.

For example, if a documentation source becomes outdated or irrelevant, a developer can use the remove_documentation tool to remove it from the system. This prevents the AI model from using outdated information when generating responses. Similarly, the list_sources tool can be used to verify that specific sources have been indexed correctly.

This feature relies on the Qdrant vector database to store metadata about the documentation sources. The list_sources and remove_documentation tools provide an interface for managing this metadata.