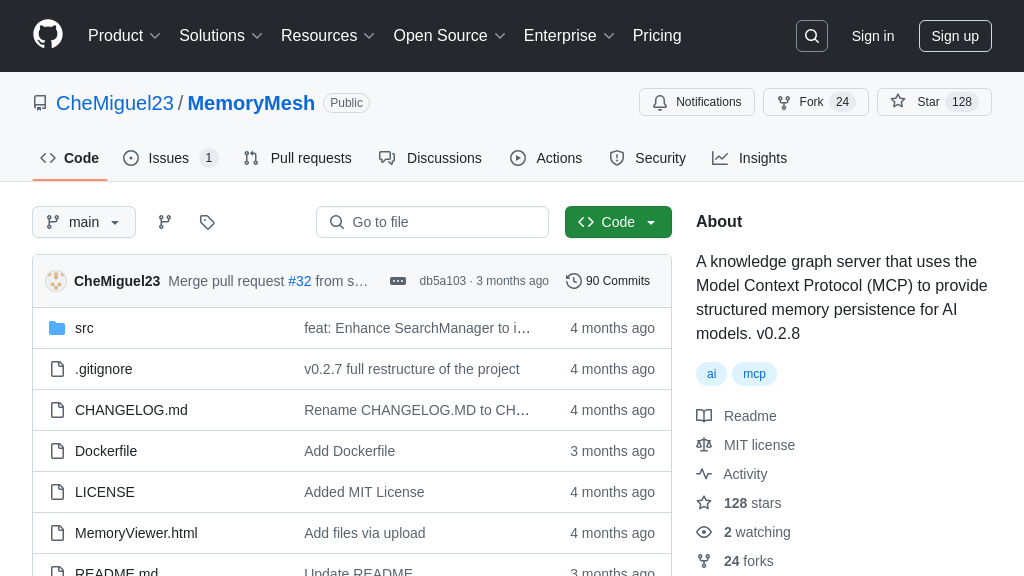

server-memory

server-memory: MCP server for persistent AI model memory using knowledge graphs.

server-memory Solution Overview

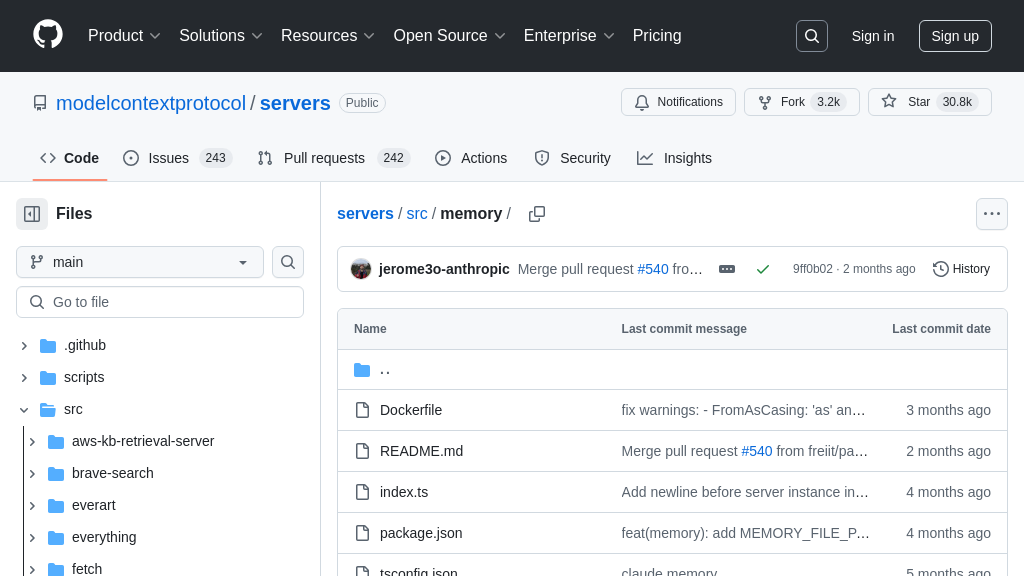

The server-memory is an MCP server designed to provide AI models with persistent memory using a local knowledge graph. It allows models like Claude to remember user information across multiple interactions, enhancing personalization and context awareness.

This server stores information as entities (nodes) with associated observations and relations, defining connections between entities. Developers can use the provided API tools to create, delete, and search entities, add observations, and manage relations within the knowledge graph. Key functionalities include create_entities, add_observations, search_nodes, and more, enabling seamless integration with AI models.

By utilizing server-memory, developers can address the challenge of maintaining context in AI conversations, leading to more engaging and relevant user experiences. The server can be easily integrated using Docker or NPX, and its behavior can be customized through environment variables.

server-memory Key Capabilities

Persistent Knowledge Storage

The server-memory component provides a persistent storage solution for AI models, specifically designed to retain and recall information across multiple interactions. It utilizes a local knowledge graph to store entities, relations, and observations, allowing the AI model to "remember" details about users, events, or any other relevant context. This persistence is crucial for creating personalized and context-aware AI experiences. For example, in a customer service application, the AI can remember a user's past interactions, preferences, and issues, leading to more efficient and satisfying support. The knowledge graph is stored locally as a JSON file, ensuring data privacy and control. The location of this file can be customized using the MEMORY_FILE_PATH environment variable.

Dynamic Knowledge Graph Management

This server offers a comprehensive API for managing the knowledge graph. The API includes functionalities to create, update, and delete entities, relations, and observations. The create_entities, create_relations, and add_observations tools allow the AI model to dynamically expand its knowledge base as it interacts with the world. Conversely, the delete_entities, delete_observations, and delete_relations tools enable the AI to refine its understanding by removing outdated or incorrect information. This dynamic management capability is essential for maintaining the accuracy and relevance of the AI's knowledge. For instance, if a user updates their address, the AI can use the delete_observations tool to remove the old address and the add_observations tool to store the new one.

Semantic Search and Retrieval

The server-memory component facilitates efficient information retrieval through its search_nodes and open_nodes tools. The search_nodes tool allows the AI model to perform semantic searches across entity names, types, and observation content, enabling it to quickly identify relevant information based on a query. The open_nodes tool allows the AI to retrieve specific entities and their relations by name. This combination of search and retrieval capabilities enables the AI to access and utilize the stored knowledge effectively. Imagine an AI assistant helping a user plan a trip. The AI could use search_nodes to find all entities related to "hotels in Paris" and then use open_nodes to retrieve detailed information about specific hotels, such as their amenities and prices.

Integration Advantages

The server-memory component is designed for seamless integration into the MCP ecosystem. Its client-server architecture allows AI models to access and manage the knowledge graph through a standardized interface. The provided configuration examples for Claude Desktop demonstrate the ease with which this server can be deployed and utilized. Furthermore, the use of standard input/output for communication ensures compatibility with a wide range of AI models and platforms. This ease of integration reduces the development effort required to incorporate persistent memory into AI applications. The server's modular design also allows developers to customize and extend its functionality to meet specific needs.