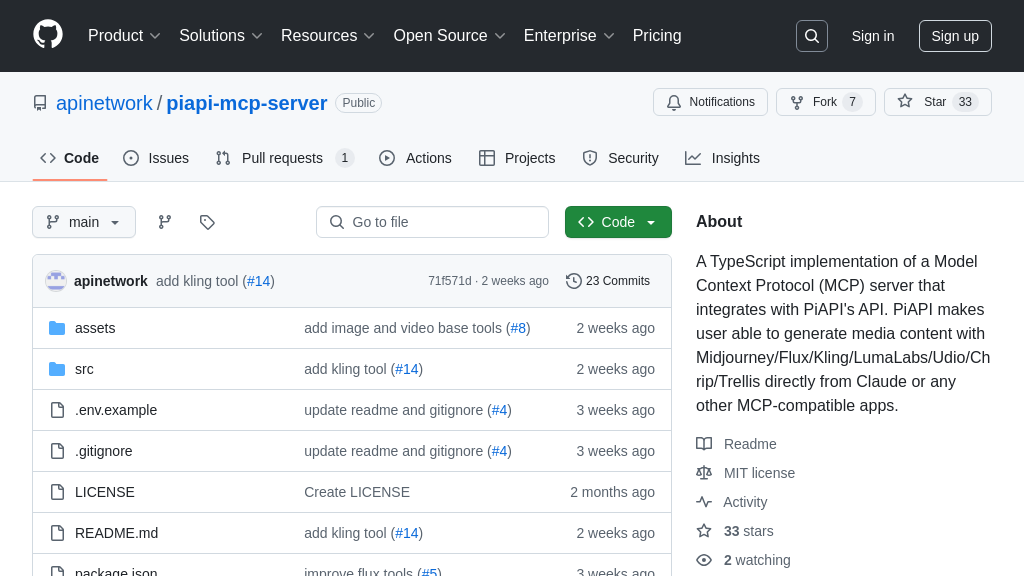

wikimedia-enterprise-model-context-protocol

Wikimedia Enterprise MCP: Python tool for AI models to access Wikimedia data via MCP.

wikimedia-enterprise-model-context-protocol Solution Overview

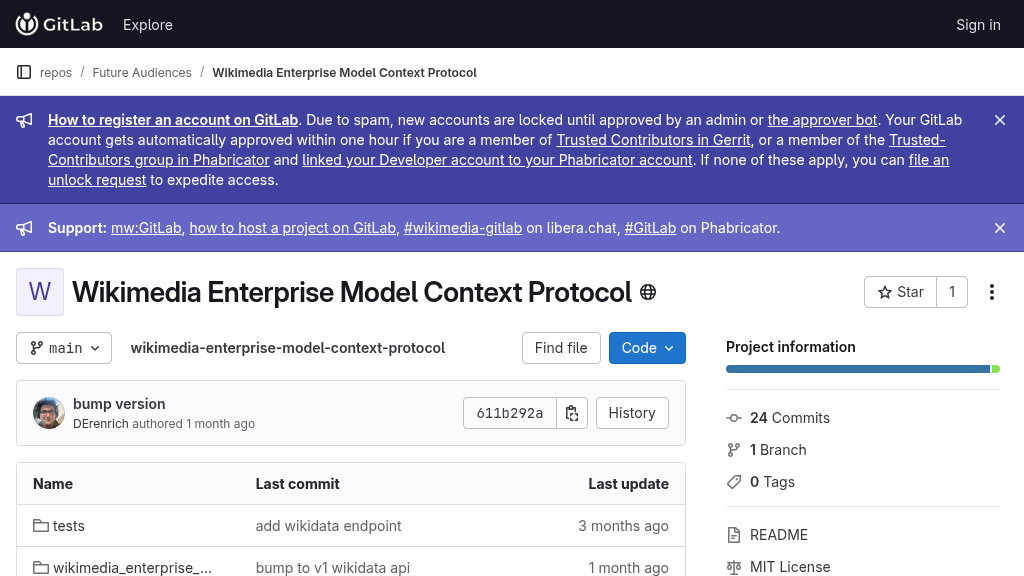

Wikimedia Enterprise Model Context Protocol is a Python implementation designed to connect AI models with the vast knowledge repository of the Wikimedia Enterprise API. This MCP solution empowers AI to access and utilize structured data from Wikipedia and its sister projects, enhancing model accuracy and contextual understanding. It acts as a bridge, enabling seamless interaction between AI clients and the Wikimedia Enterprise API via standard MCP servers like Anthropic Desktop.

Developers can leverage this tool to build AI applications that require up-to-date, reliable information, such as question answering systems, content summarization tools, and knowledge graph enrichment services. By configuring the solution with Wikimedia Enterprise API credentials, AI models can securely retrieve and process data, unlocking new possibilities for intelligent applications. The implementation utilizes poetry for dependency management and is designed for easy integration with existing MCP workflows.

wikimedia-enterprise-model-context-protocol Key Capabilities

API Data Access via MCP

The Wikimedia Enterprise Model Context Protocol enables AI models to securely access structured data from the Wikimedia Enterprise API through a standardized MCP interface. This allows models to retrieve information such as article content, metadata, and usage statistics directly from Wikimedia's knowledge base. The protocol acts as a bridge, translating MCP requests into API calls and delivering the results back to the AI model in a structured format. This eliminates the need for AI models to implement custom API integrations, simplifying the process of incorporating Wikimedia data into their workflows.

Example: An AI model designed for question answering can use this MCP to fetch the content of a specific Wikipedia article in response to a user query. The model sends a request to the MCP server, which then retrieves the article content from the Wikimedia Enterprise API and returns it to the model.

Authentication Management

This MCP implementation handles authentication with the Wikimedia Enterprise API, relieving AI models and developers from managing API keys and credentials directly. It uses a .env file to store the WME_USERNAME and WME_PASSWORD, which are then used to authenticate requests to the API. This centralized authentication mechanism enhances security and simplifies the process of accessing Wikimedia Enterprise data. By abstracting away the complexities of authentication, the MCP allows developers to focus on building AI applications that leverage Wikimedia's vast knowledge base.

Example: When an AI model requests data from the Wikimedia Enterprise API, the MCP server automatically authenticates the request using the stored credentials. This ensures that the model has the necessary permissions to access the data and prevents unauthorized access.

Integration with Anthropic Desktop

The Wikimedia Enterprise Model Context Protocol is designed for seamless integration with MCP servers like Anthropic Desktop. This allows developers to easily incorporate Wikimedia data into AI models running within the Anthropic ecosystem. The integration is facilitated through a claude_desktop_config.json file, which configures Anthropic Desktop to recognize and utilize the Wikimedia Enterprise MCP server. This tight integration simplifies the deployment and management of AI models that rely on Wikimedia data.

Example: A developer can configure Anthropic Desktop to use the Wikimedia Enterprise MCP server by adding a configuration entry to the claude_desktop_config.json file. This allows AI models running within Anthropic Desktop to access Wikimedia data through the MCP interface without requiring any additional configuration.

Technical Implementation

The implementation is written in Python and uses poetry for dependency management, ensuring a consistent and reproducible development environment. The project includes pre-commit hooks for code formatting and linting, promoting code quality and consistency. The file structure is well-organized, with separate directories for the main code, tests, and configuration files. The use of standard Python libraries and tools makes the implementation easy to understand, modify, and extend.