mattermost-mcp-host

Mattermost MCP Host: AI-powered Mattermost integration via MCP for intelligent tool execution.

mattermost-mcp-host Solution Overview

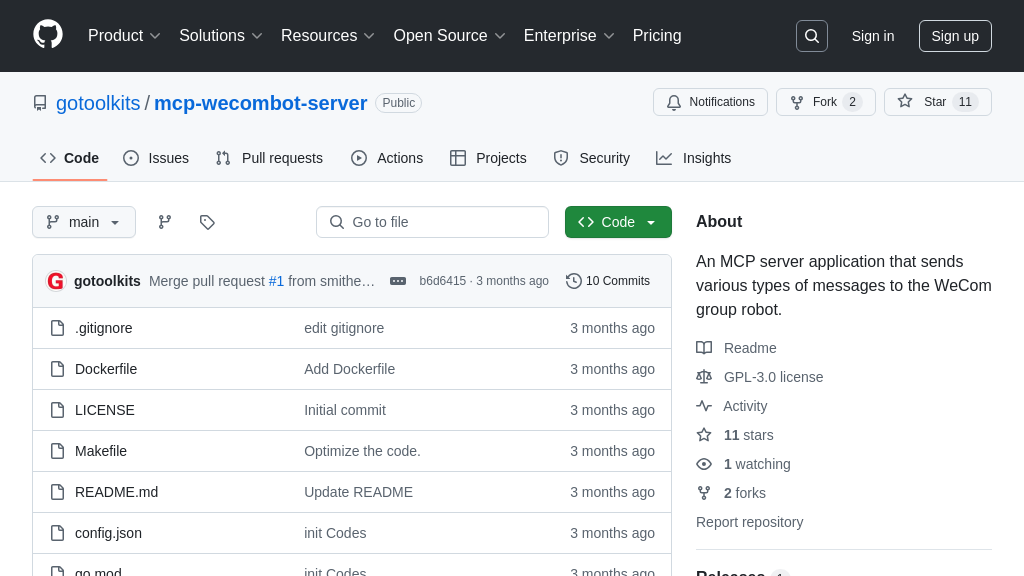

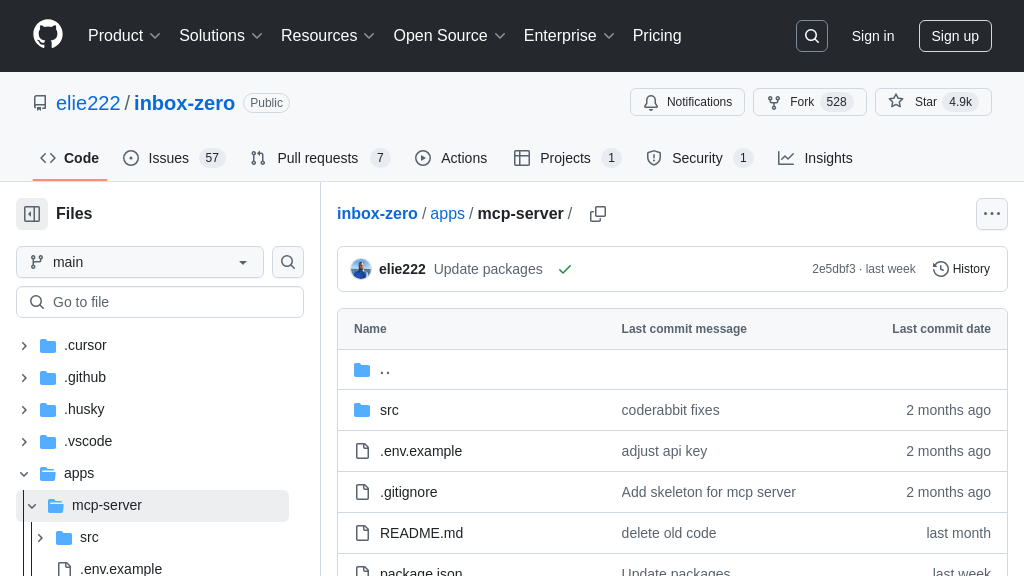

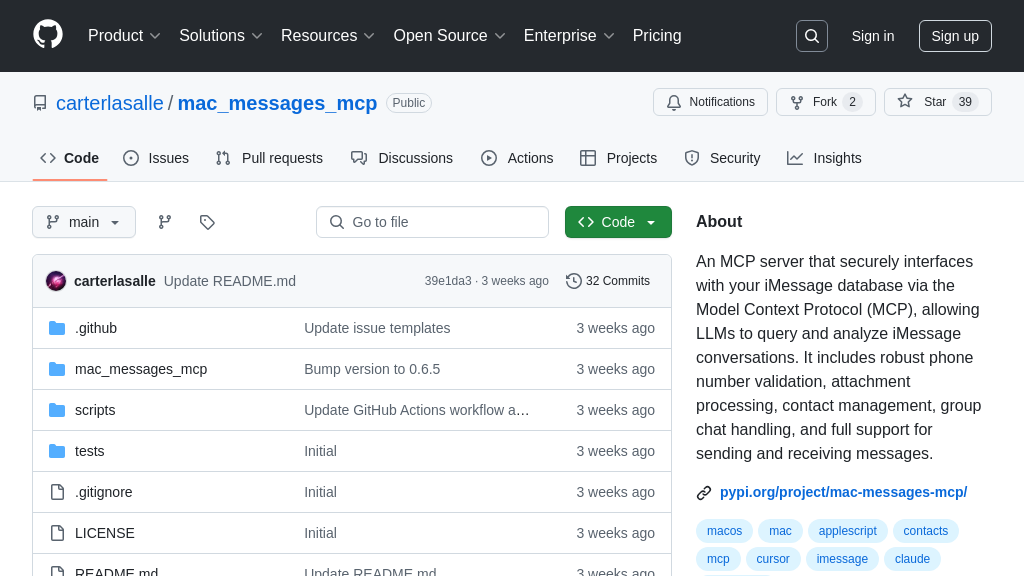

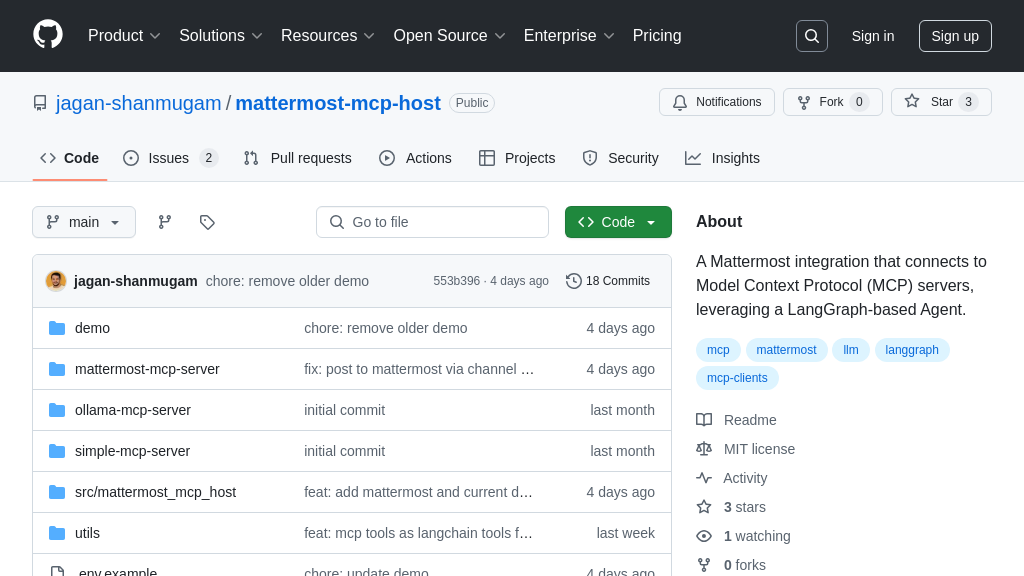

Mattermost MCP Host is a Mattermost integration that bridges the gap between your AI models and your team's communication platform. It connects to MCP servers, enabling AI agents to interact with users and execute tools directly within Mattermost. Leveraging a LangGraph agent, it intelligently interprets user requests and orchestrates responses using dynamically loaded tools from connected MCP servers.

This integration maintains conversational context within Mattermost threads, ensuring coherent and relevant interactions. Users can directly interact with MCP servers using commands to list available resources or call specific tools. By converting MCP tools to Langchain structured tools, it allows the AI agent to make intelligent decisions about tool usage, including chaining multiple calls to fulfill complex requests. The core value lies in providing a seamless, intelligent interface for accessing and utilizing AI models and external tools without leaving the Mattermost environment. It connects to MCP servers via stdio and HTTP/SSE, offering a versatile integration approach.

mattermost-mcp-host Key Capabilities

LangGraph Agent Orchestration

The Mattermost MCP Host leverages a LangGraph agent to intelligently process user requests and orchestrate responses. This agent acts as the central processing unit, understanding the user's intent and determining the best course of action. It dynamically loads available tools from connected MCP servers, converting them into a format compatible with the Langchain framework. The agent then uses these tools to fulfill the user's request, potentially chaining multiple tool calls together to achieve complex goals. This orchestration capability allows the system to handle a wide range of tasks, from simple information retrieval to complex data manipulation and analysis.

For example, a user might ask the bot to "summarize the latest news about AI and post it to the channel." The LangGraph agent would first use a web search tool to retrieve relevant news articles, then use a summarization tool to condense the information, and finally use a Mattermost posting tool to share the summary with the channel. This entire process is handled automatically by the agent, providing a seamless user experience. The agent uses LLMs to decide which tools to use and in what order, enabling complex workflows.

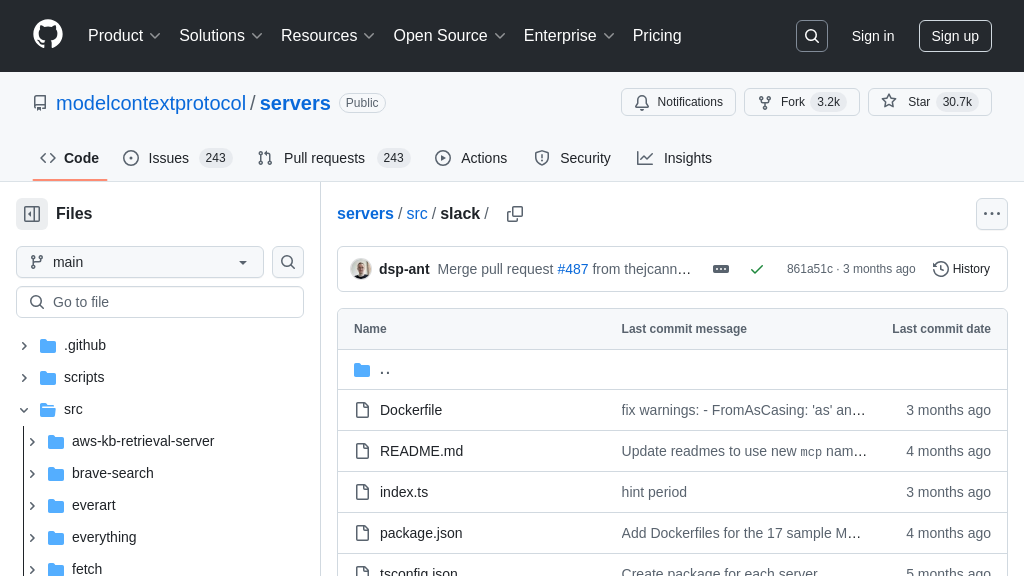

Dynamic MCP Tool Integration

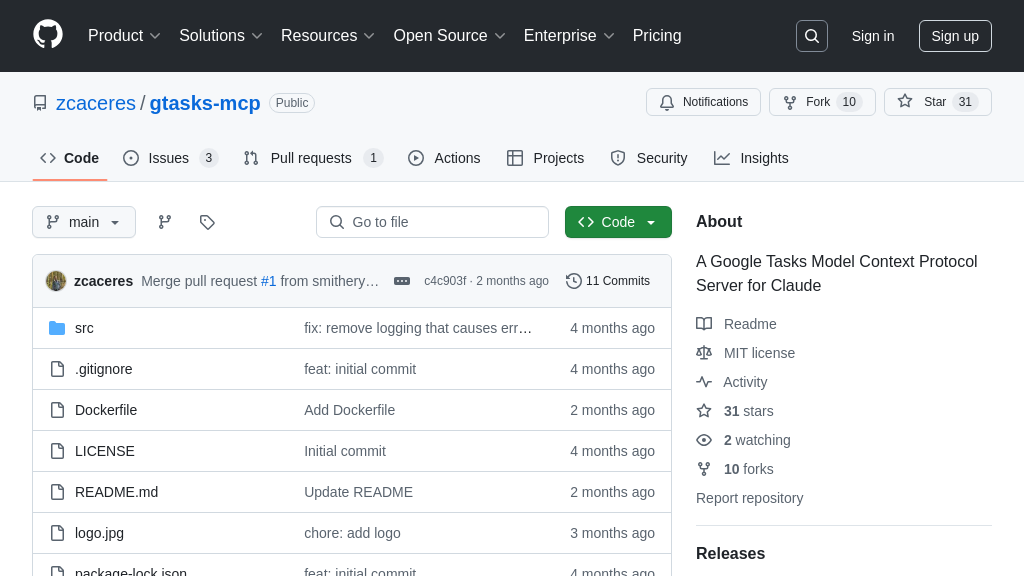

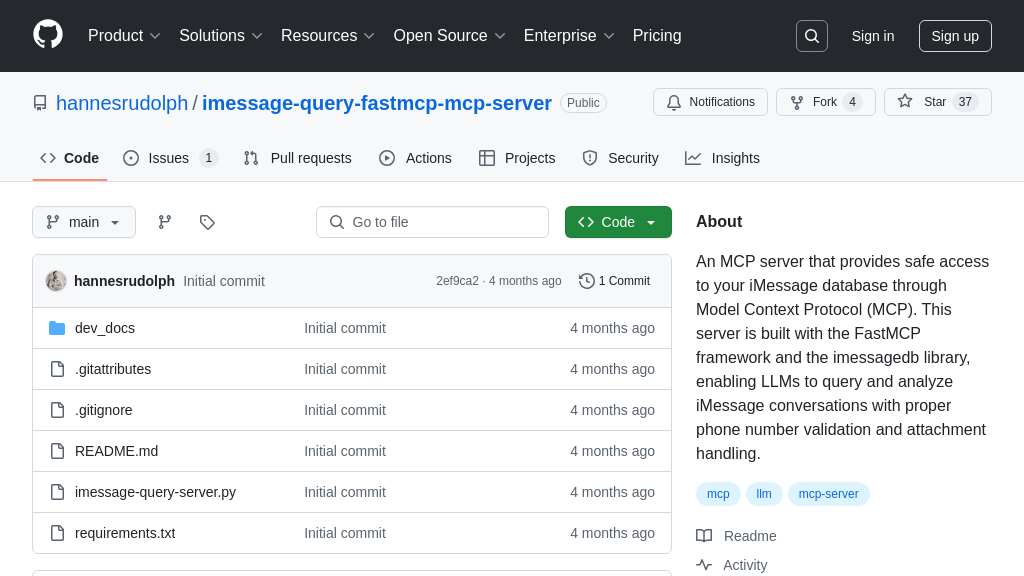

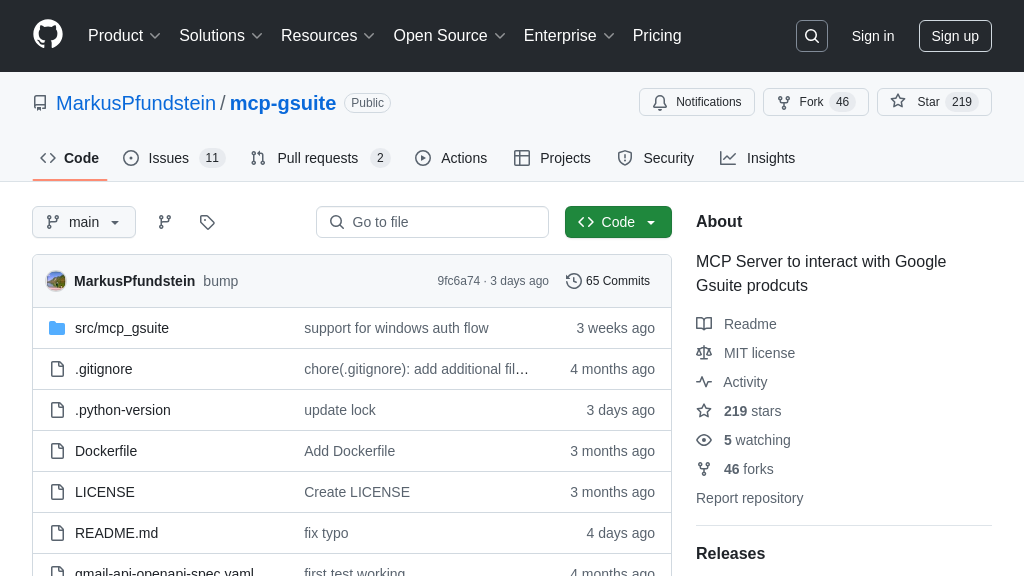

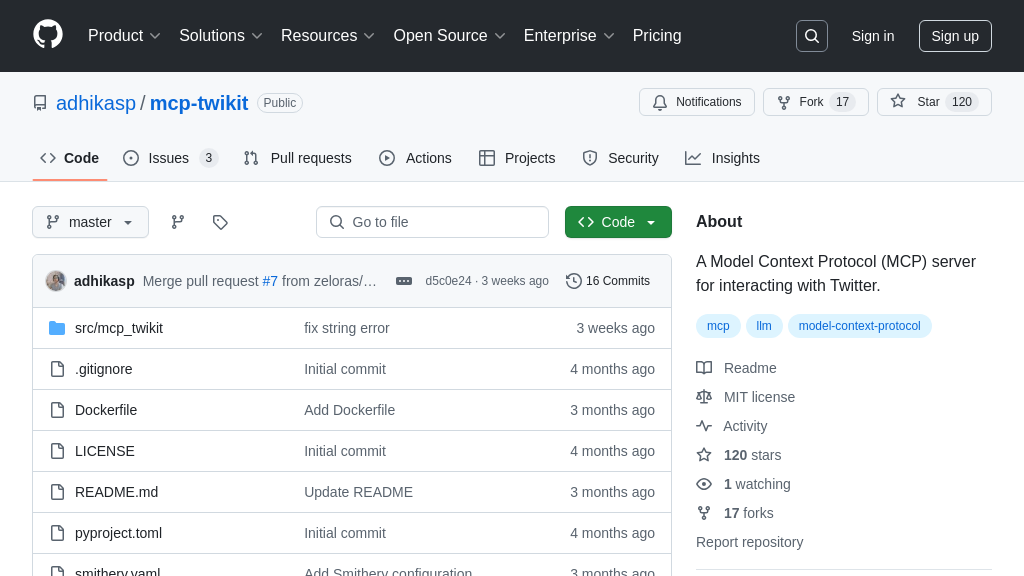

This feature enables the Mattermost MCP Host to automatically discover and integrate tools from connected MCP servers. Upon establishing a connection with an MCP server (defined in mcp-servers.json), the system queries the server for its available tools. These tools are then dynamically loaded and made accessible to the LangGraph agent. This dynamic loading process eliminates the need for manual configuration or code changes whenever a new tool is added to an MCP server. The system automatically adapts to the available tools, ensuring that the AI agent always has access to the latest capabilities.

Consider a scenario where a new data analysis tool is deployed on an MCP server. Once the Mattermost MCP Host is connected to that server, it will automatically detect the new tool and make it available to the LangGraph agent. Users can then immediately start using the new tool through the Mattermost interface, without requiring any updates to the Mattermost MCP Host itself. This dynamic integration significantly simplifies the process of extending the system's capabilities and ensures that users always have access to the latest tools. The conversion of MCP tools to Langchain structured tools allows for seamless integration with the LangGraph agent.

Direct Command Interface

The Mattermost MCP Host provides a direct command interface that allows users to interact with MCP servers using a command prefix (default #). This interface enables users to directly list available servers, tools, resources, and prompts, as well as call specific tools with JSON arguments. This feature is particularly useful for developers and advanced users who want to have fine-grained control over the system's behavior. It provides a way to bypass the natural language interface and directly execute commands on the MCP servers.

For instance, a user can type #my-server tools to list all available tools on the MCP server named "my-server". Similarly, they can type #my-server call echo '{"message": "Hello MCP!"}' to call the "echo" tool on "my-server" with the argument {"message": "Hello MCP!"}. This direct command interface provides a powerful and flexible way to interact with the MCP ecosystem, allowing users to leverage the full potential of the connected MCP servers. The command prefix is configurable via the .env file.

Thread-Aware Conversations

The Mattermost MCP Host maintains conversational context within Mattermost threads, ensuring coherent and relevant interactions. This feature allows users to engage in natural and flowing conversations with the AI agent, without having to repeat information or re-establish context with each message. The system tracks the history of messages within a thread and uses this information to inform the agent's responses. This thread-awareness significantly improves the user experience, making it easier and more intuitive to interact with the AI agent.

Imagine a user asking the bot "What is the weather in London?". The bot responds with the current weather conditions. The user then replies "What about tomorrow?". The bot, thanks to its thread-awareness, understands that the user is still referring to the weather in London and provides the forecast for the next day. Without this feature, the user would have to explicitly specify "What is the weather in London tomorrow?" for the bot to understand the request. The system passes the message along with thread history to the LangGraph Agent.