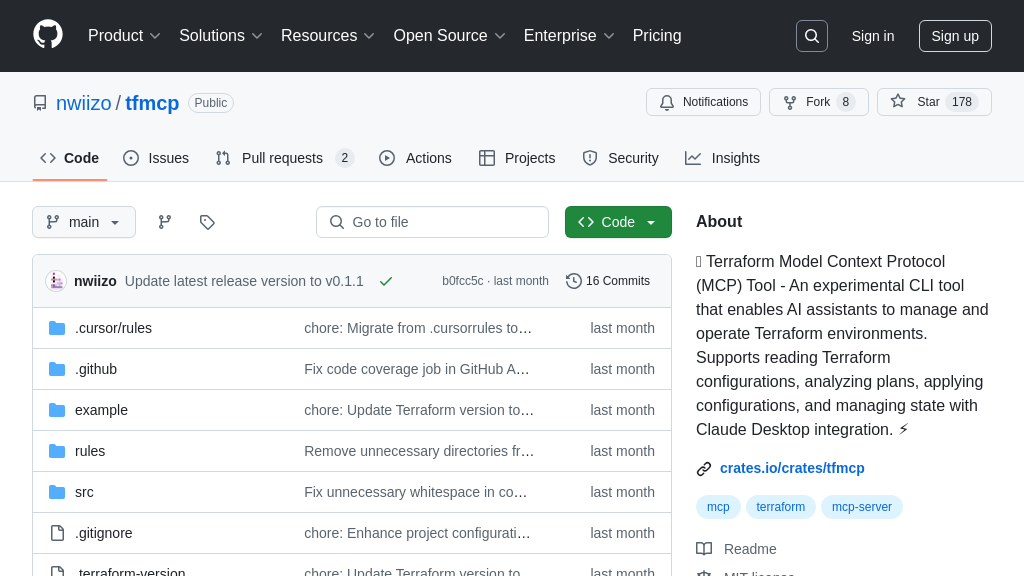

kubernetes-mcp-server

Kubernetes MCP Server: Connect AI models to Kubernetes/OpenShift for automated resource management and workflow integration.

kubernetes-mcp-server Solution Overview

Kubernetes MCP Server is a powerful MCP server implementation designed for Kubernetes and OpenShift environments. It empowers AI models to interact directly with your cluster, enabling functionalities like retrieving pod logs, executing commands within containers, and even deploying new container images. Unlike simple wrappers around kubectl or helm, this server directly leverages the Kubernetes API, eliminating external dependencies.

This solution automatically detects configuration changes within your Kubernetes setup, ensuring your AI models always have an up-to-date view of the cluster state. It supports CRUD operations on any Kubernetes resource, offering granular control. By providing AI models with contextual awareness of your Kubernetes infrastructure, this server streamlines tasks like automated deployment troubleshooting, resource optimization, and intelligent scaling, ultimately accelerating development and improving operational efficiency. Integration is simple, using tools like npx or VS Code extensions for rapid deployment.

kubernetes-mcp-server Key Capabilities

Automated Kubernetes Configuration

The Kubernetes MCP Server automatically detects and updates its configuration based on changes in the Kubernetes environment. This feature eliminates the need for manual configuration updates when the Kubernetes cluster changes, such as when new nodes are added or existing ones are reconfigured. The server monitors the .kube/config file or the in-cluster configuration for any modifications and dynamically adjusts its settings to maintain a consistent and up-to-date connection to the Kubernetes API. This ensures that AI models always have access to the latest cluster information, preventing errors and improving reliability.

For example, if a new service is deployed in the Kubernetes cluster, the MCP server will automatically detect this change and update its internal representation of the cluster state. This allows AI models to immediately interact with the new service without requiring any manual intervention. This is achieved by leveraging the Kubernetes API's watch functionality to monitor configuration changes.

Generic Kubernetes Resource Operations

This feature enables AI models to perform CRUD (Create, Read, Update, Delete) operations on any Kubernetes or OpenShift resource. This provides a flexible and powerful way for AI models to interact with the Kubernetes cluster, allowing them to manage deployments, services, config maps, and other resources. Instead of being limited to a predefined set of operations, AI models can dynamically interact with the Kubernetes API to perform any action that is supported by the cluster. This allows AI models to automate complex tasks, such as scaling deployments based on real-time metrics or creating new services in response to user requests.

Imagine an AI model designed to optimize resource utilization in a Kubernetes cluster. Using this feature, the model can dynamically adjust the resource requests and limits of deployments based on observed performance metrics. The model can read the current resource utilization of each pod, analyze the data, and then update the deployment configuration to optimize resource allocation. This is achieved through the Kubernetes API, using the client-go library in the server's implementation.

Pod Interaction and Management

The Kubernetes MCP Server provides specific functionalities for interacting with and managing Pods within a Kubernetes cluster. This includes the ability to list Pods across namespaces, retrieve specific Pods by name, delete Pods, view Pod logs, and execute commands within a Pod. This feature allows AI models to monitor the health and status of Pods, diagnose issues, and perform automated remediation actions. The ability to execute commands within a Pod enables AI models to perform tasks such as collecting diagnostic information or restarting a failing container.

For instance, an AI model could be used to automatically detect and resolve issues with failing Pods. If a Pod is detected to be in a CrashLoopBackOff state, the AI model can use the MCP server to retrieve the Pod's logs and analyze them for errors. Based on the analysis, the model can then execute commands within the Pod to attempt to resolve the issue, such as restarting a specific container or updating a configuration file. This functionality leverages the Kubernetes API's Pod management endpoints and the kubectl exec functionality.