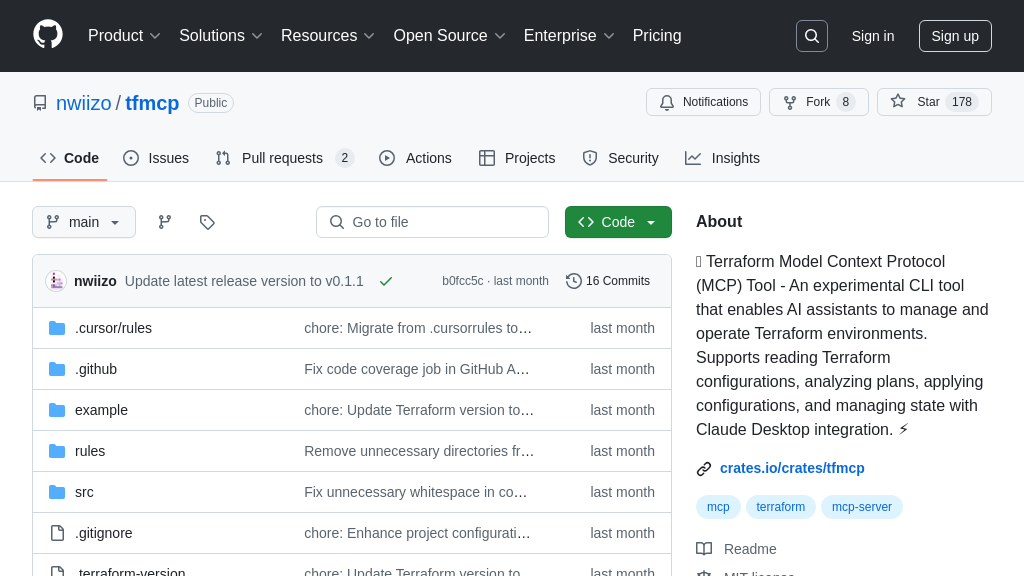

mcp-k8s-go

mcp-k8s-go: Connect AI models to Kubernetes securely. List namespaces, pods, and execute commands.

mcp-k8s-go Solution Overview

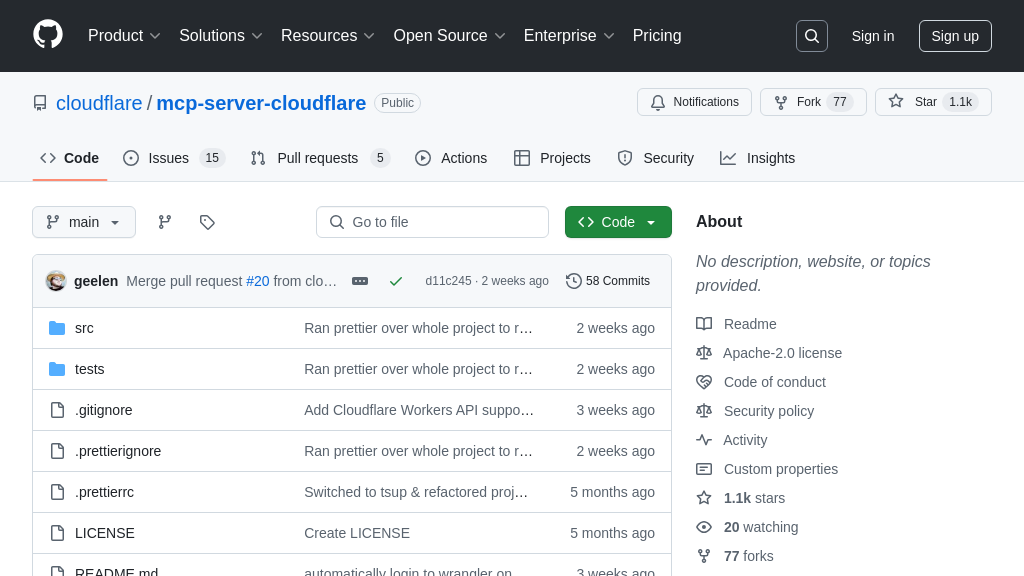

MCP K8S Go is a Go-based MCP server designed to seamlessly connect AI models with Kubernetes clusters. As a crucial component in the MCP ecosystem, it empowers AI models to securely access and manipulate Kubernetes resources. This server bridges the gap between AI and Kubernetes, enabling AI-driven automation and intelligent management of containerized applications.

Key features include listing Kubernetes contexts, namespaces, nodes, and resources, as well as retrieving specific resource details, events, and pod logs. It also supports executing commands within Kubernetes pods. By leveraging MCP K8S Go, developers can build AI solutions that intelligently interact with and manage their Kubernetes infrastructure.

Integration is straightforward, with options ranging from using the MCP Inspector to direct integration with Claude via Smithery or manual configuration. This solution unlocks the potential for AI to optimize resource allocation, automate deployments, and proactively address issues within Kubernetes environments, streamlining operations and enhancing efficiency.

mcp-k8s-go Key Capabilities

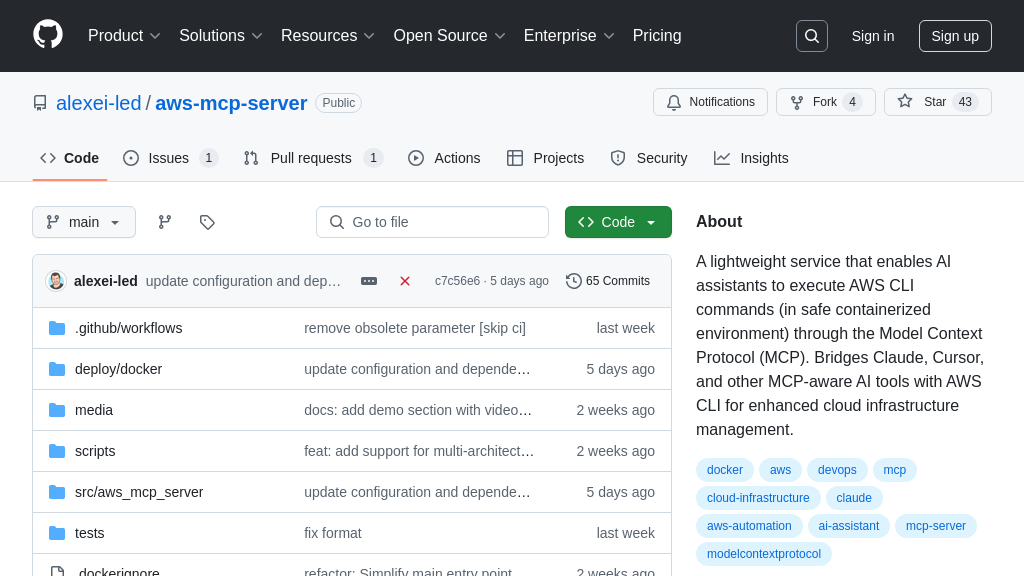

Secure Kubernetes Resource Access

mcp-k8s-go acts as a secure intermediary, enabling AI models to interact with Kubernetes clusters without direct access. It leverages the Kubernetes configuration file (kubeconfig) to manage connections and authenticate requests. By using mcp-k8s-go, AI models can query, modify, and monitor Kubernetes resources in a controlled environment, reducing the risk of unauthorized access or accidental damage to the cluster. The server can be configured to only allow access to specific Kubernetes contexts, further enhancing security. This is crucial in production environments where AI models need to interact with sensitive infrastructure components.

For example, an AI model could use mcp-k8s-go to automatically scale a deployment based on real-time traffic analysis, ensuring optimal resource utilization without exposing the cluster to external vulnerabilities. The --allowed-contexts flag restricts the AI's access to only the necessary namespaces and resources.

Kubernetes Context Management

This feature allows mcp-k8s-go to manage and switch between multiple Kubernetes contexts defined in the kubeconfig file. This is particularly useful in environments where AI models need to interact with different Kubernetes clusters or namespaces, such as development, staging, and production. By specifying the desired context, the AI model can seamlessly access the appropriate resources without requiring manual configuration changes. The list-k8s-contexts tool provides a way to list available contexts, enabling the AI model to dynamically discover and select the correct environment.

Imagine an AI-powered deployment pipeline that automatically deploys applications to different Kubernetes environments based on the stage of the release cycle. mcp-k8s-go can be configured to use the development context for initial testing, the staging context for pre-production validation, and the production context for the final release, all managed through a single AI model.

Real-time Kubernetes Event Monitoring

mcp-k8s-go provides the ability to monitor Kubernetes events in real-time, allowing AI models to react to changes in the cluster state. By using the list-k8s-events tool, AI models can receive notifications about pod failures, deployment updates, and other important events. This enables proactive problem detection and automated remediation, improving the overall reliability and stability of the Kubernetes environment. The event data can be used to train AI models to predict potential issues and take preventative measures.

Consider an AI-driven monitoring system that automatically detects and responds to pod failures. mcp-k8s-go can stream Kubernetes events to the AI model, which can then trigger automated actions such as restarting the failed pod, scaling up the deployment, or alerting the operations team. This ensures minimal downtime and reduces the need for manual intervention.

Pod Log Retrieval and Command Execution

The ability to retrieve pod logs (get-k8s-pod-logs) and execute commands within pods (k8s-pod-exec) directly from the AI model significantly enhances debugging and troubleshooting capabilities. Instead of requiring manual access to the Kubernetes cluster, developers can leverage the AI to gather diagnostic information and perform corrective actions. This streamlines the development workflow and reduces the time required to resolve issues. The k8s-pod-exec feature allows for running diagnostic tools or scripts within the pod's environment, providing valuable insights into its behavior.

For instance, an AI-powered debugging tool could automatically analyze pod logs to identify error patterns and suggest potential solutions. If the AI detects a configuration issue, it could use k8s-pod-exec to run a script that automatically corrects the configuration, minimizing downtime and improving application performance.

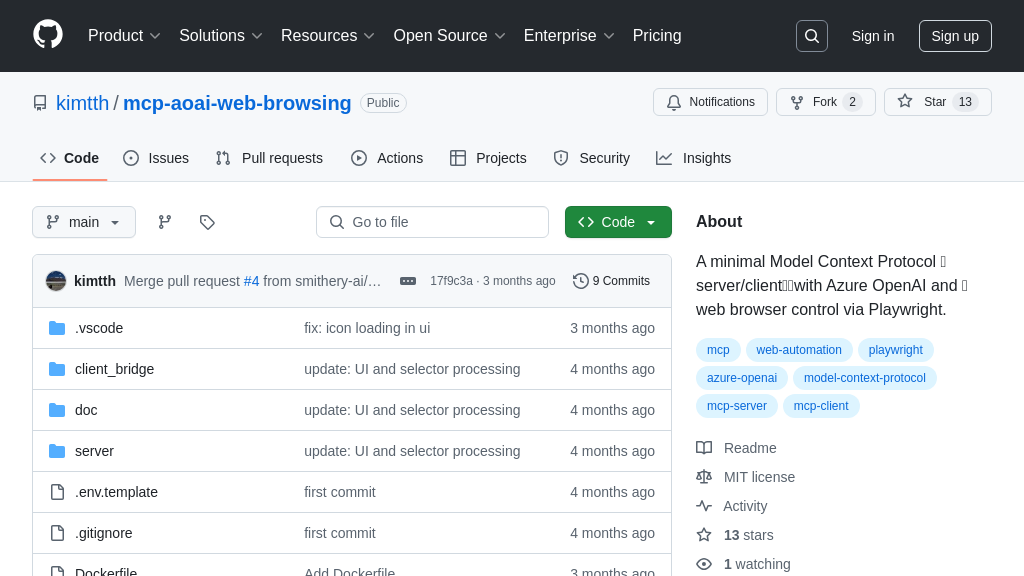

Integration Advantages

mcp-k8s-go offers seamless integration with various AI clients, including Claude, through multiple installation methods (Smithery, mcp-get, manual installation from NPM or GitHub releases, and building from source). This flexibility allows developers to choose the integration approach that best suits their needs and technical expertise. The provided configuration examples for claude_desktop_config.json simplify the setup process and ensure compatibility with the Claude AI client. The availability of pre-built packages and detailed installation instructions lowers the barrier to entry and enables rapid deployment of AI-powered Kubernetes management solutions.