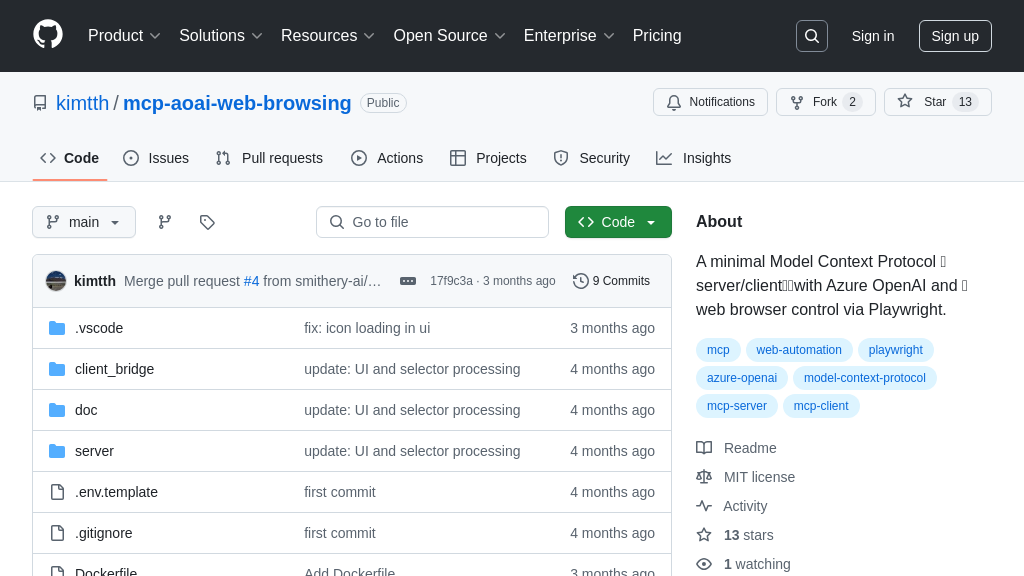

mcp-aoai-web-browsing

Explore mcp-aoai-web-browsing: An MCP server/client for Azure OpenAI and web browsing with Playwright. Enable AI web automation!

mcp-aoai-web-browsing Solution Overview

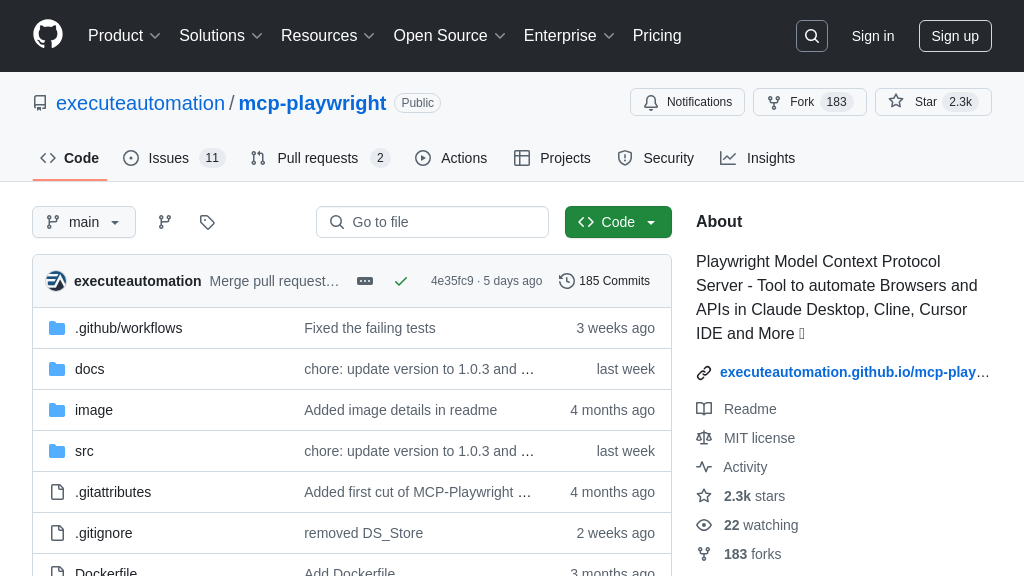

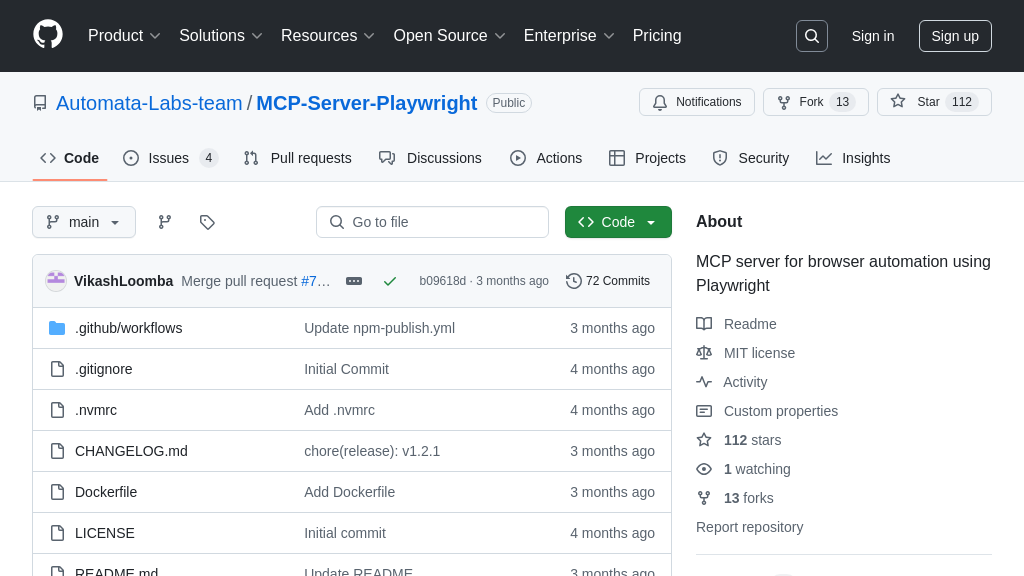

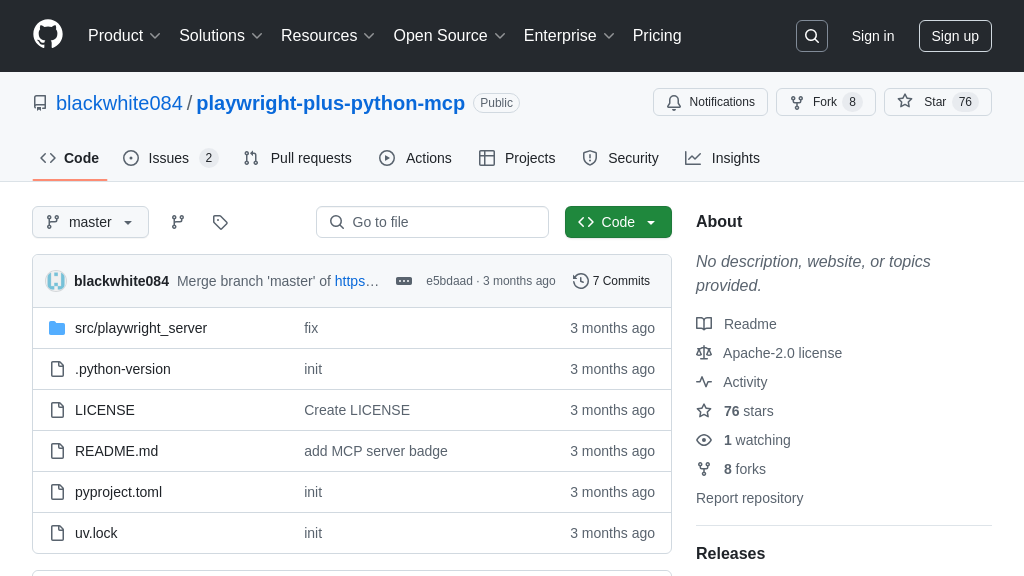

mcp-aoai-web-browsing is an MCP server and client implementation that empowers Azure OpenAI models with web browsing capabilities. Leveraging FastMCP, this solution enables secure and controlled interactions between the AI model and a web browser, automated using Playwright. The client facilitates communication with the server, allowing the AI to navigate web pages using the playwright_navigate tool. This tool translates natural language requests into specific browser actions, effectively extending the AI's knowledge base and enabling real-time information retrieval. By converting MCP responses into the OpenAI function calling format, it ensures seamless integration and a familiar development experience. This solution streamlines the process of connecting AI models to the web, resolving the challenge of accessing and utilizing online information within AI applications. It offers a practical demonstration of how MCP components can be orchestrated to create powerful, context-aware AI systems.

mcp-aoai-web-browsing Key Capabilities

Web Navigation via Playwright

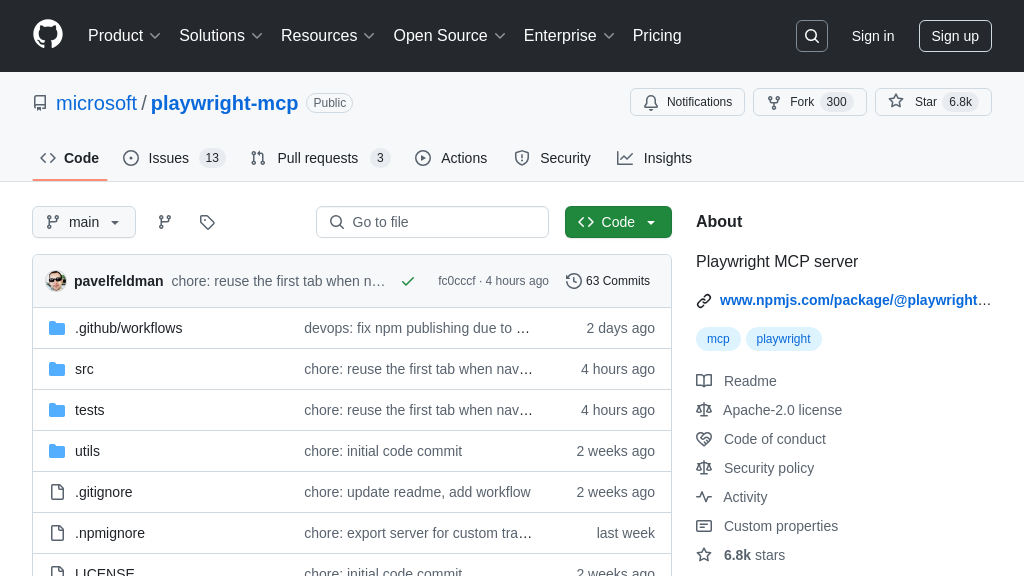

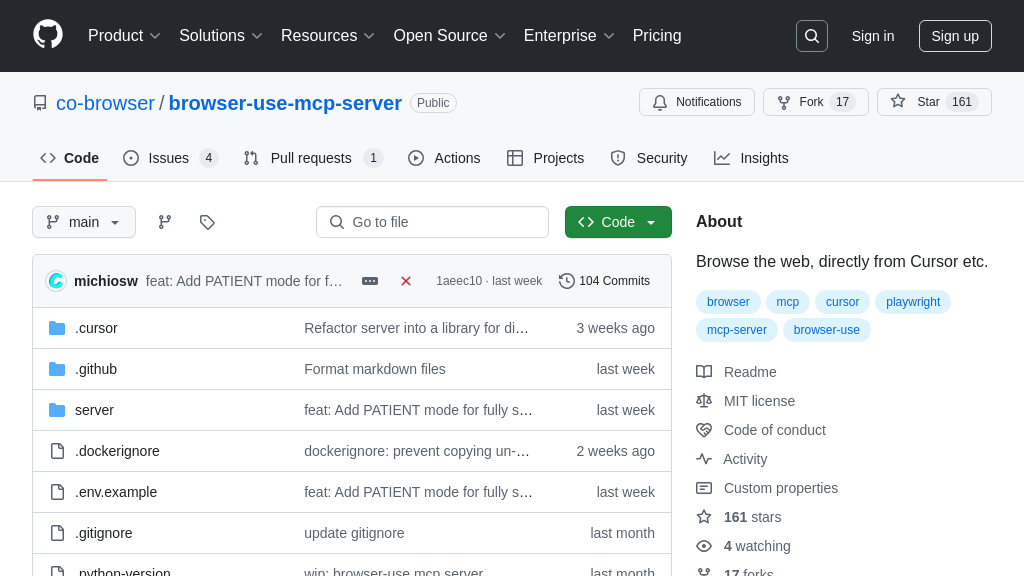

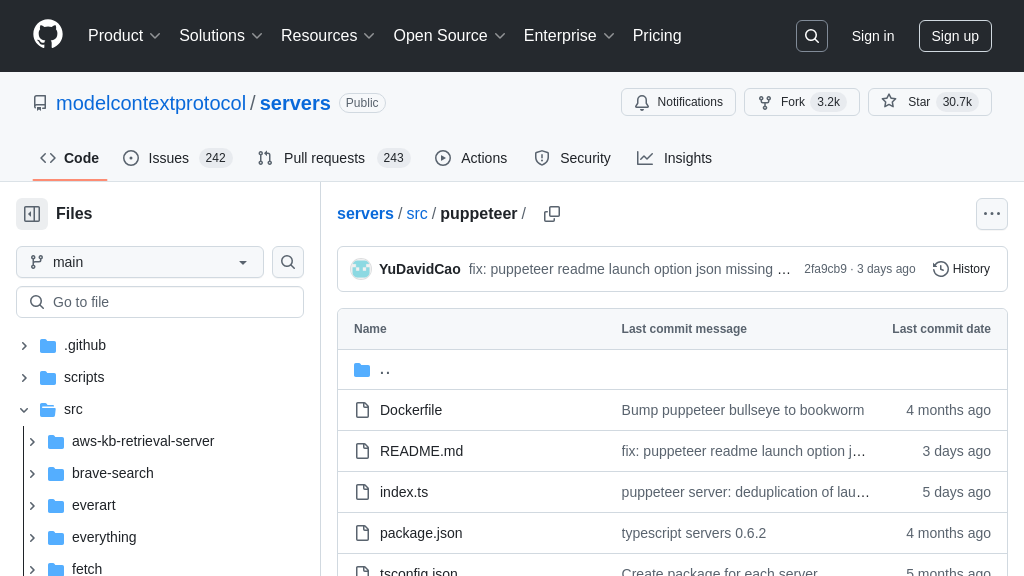

The core functionality of mcp-aoai-web-browsing lies in its ability to enable AI models to interact with and navigate web pages using Playwright, a powerful browser automation library. The AI model, in this case Azure OpenAI, can send instructions to the MCP server to navigate to specific URLs. The server then uses Playwright to control a web browser, loading the requested page. This allows the AI to access and process information from the web in a controlled and automated manner. The playwright_navigate tool, exposed through the MCP server, is the primary mechanism for this interaction.

For example, an AI assistant could use this feature to research a specific topic by navigating to relevant websites, gathering information, and summarizing the content for the user. The AI could also use this to verify information, such as checking the current price of a product on an e-commerce site. The tool accepts a URL as input, and optionally a timeout and wait_until parameter to control the page loading behavior.

OpenAI Function Calling Integration

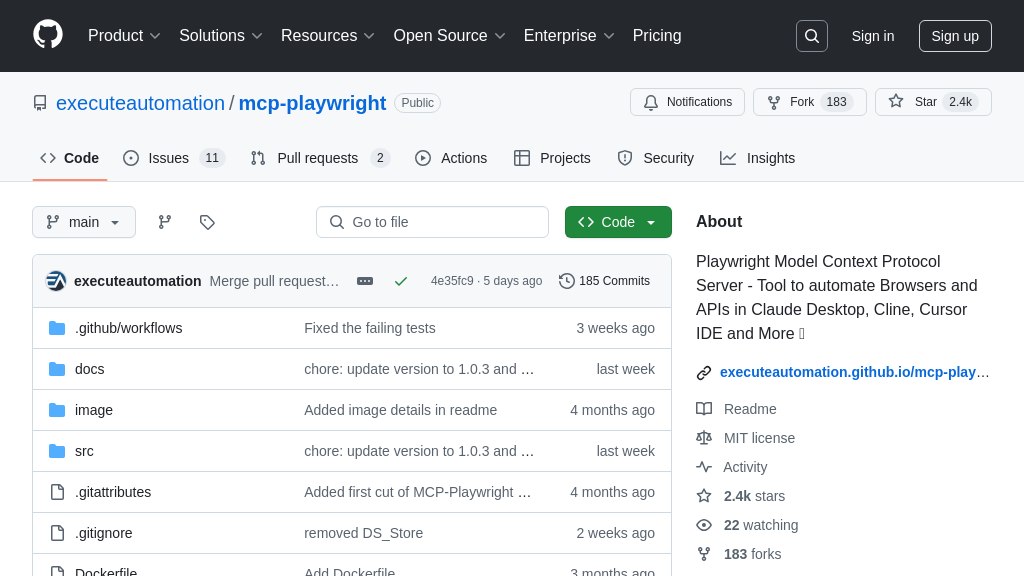

This MCP solution seamlessly integrates with the OpenAI function calling format, allowing developers to leverage the power of OpenAI models with structured tool interactions. The MCP server exposes its tools, such as playwright_navigate, with descriptions and input schemas that are compatible with OpenAI's function calling mechanism. This allows the AI model to intelligently decide when and how to use the web browsing capabilities. The MCP-LLM Bridge plays a crucial role in converting MCP responses into the OpenAI function calling format.

Consider a scenario where a user asks an AI model to "find the latest news about AI safety and summarize the key points." The AI model, recognizing the need for web access, can use the function calling mechanism to invoke the playwright_navigate tool with relevant search URLs. The server navigates to these pages, extracts the content, and returns it to the AI model, which then summarizes the key points for the user. This integration simplifies the development process and allows developers to build more sophisticated AI applications.

Secure and Controlled Web Access

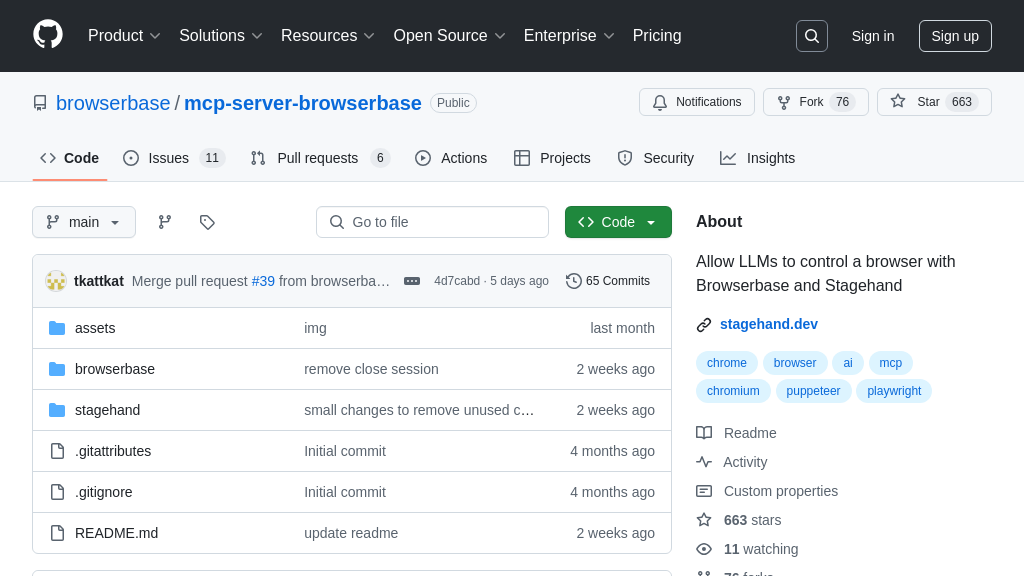

mcp-aoai-web-browsing provides a secure and controlled way for AI models to access the web. By using the Model Context Protocol (MCP), the solution establishes a clear separation of concerns between the AI model and the web browsing environment. The MCP server acts as a gatekeeper, controlling which URLs the AI model can access and limiting the potential for malicious activity. This is particularly important when dealing with sensitive data or untrusted AI models.

For instance, in a financial application, the AI model might need to access stock prices or financial news. By using mcp-aoai-web-browsing, the application can ensure that the AI model only accesses authorized websites and that all interactions are logged and monitored. The use of FastMCP further enhances security by providing a robust and efficient server implementation.

Custom MCP-LLM Bridge for Stability

The solution incorporates a custom MCP-LLM Bridge implementation designed to ensure a stable and reliable connection between the MCP server and the AI model. This bridge is crucial for maintaining consistent communication and preventing disruptions during long-running tasks. By directly passing the server object into the bridge, the solution avoids potential issues with object references and ensures that the bridge always has access to the necessary resources.

In a real-world scenario, this stability is essential for tasks such as continuous monitoring of web pages or automated data extraction. Without a stable connection, the AI model might lose its connection to the web browsing environment, leading to incomplete or inaccurate results. The custom MCP-LLM Bridge helps to mitigate this risk and ensures that the AI model can reliably interact with the web over extended periods.