all-in-one-model-context-protocol

MyMCP Server: All-in-one MCP server with AI search and multi-service integrations for enhanced development workflows.

all-in-one-model-context-protocol Solution Overview

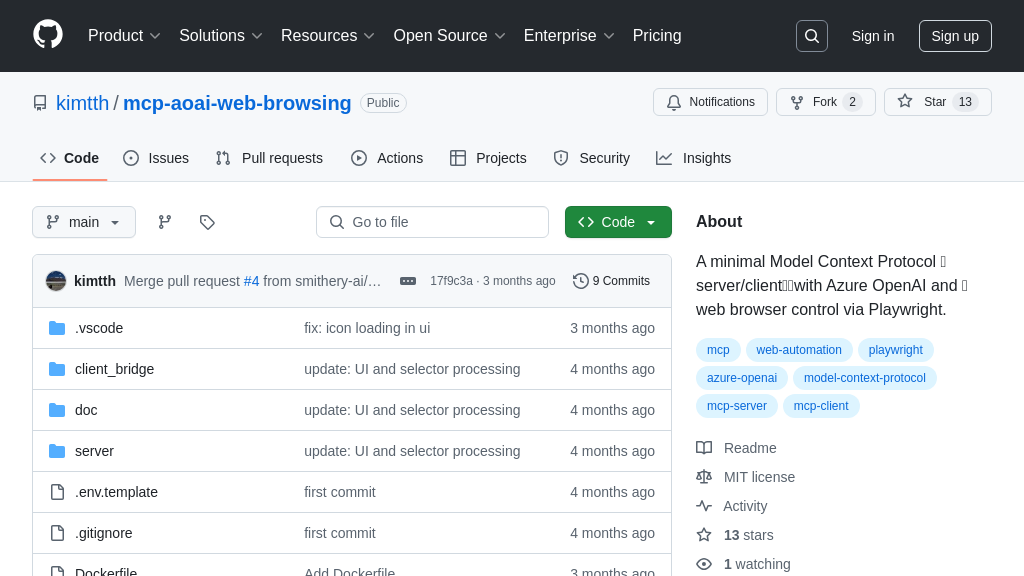

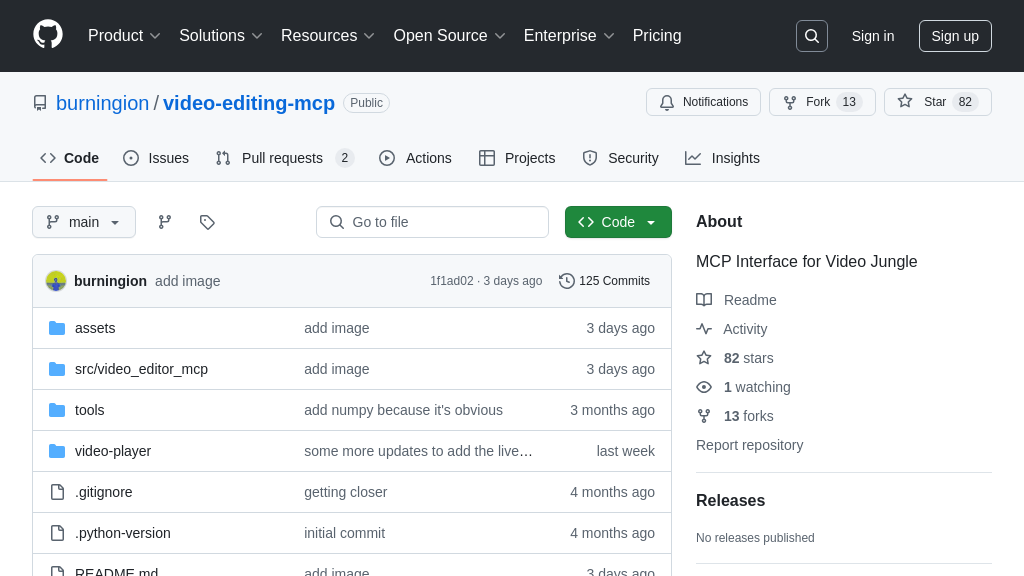

All-in-one-model-context-protocol is a versatile MCP server designed to streamline AI-enhanced development workflows. This solution acts as a central hub, integrating with popular services like GitLab, Jira, Confluence, and YouTube, offering AI-powered search and a suite of developer tools.

Key features include the ability to connect AI models to real-time information via web search, manage project tasks within Jira, access code repositories in GitLab, and retrieve content from Confluence and YouTube. It also provides advanced reasoning capabilities through Deepseek AI and supports Retrieval Augmented Generation (RAG) for enhanced context.

Developers can leverage this server to build AI applications that understand and interact with their existing development ecosystem. Installation is simplified through Smithery or manual Go installation. By enabling specific tool groups, developers can tailor the server to their needs, unlocking powerful integrations and AI-driven insights. This MCP server empowers AI models to access and utilize a wide range of external data and services, fostering more intelligent and automated development processes.

all-in-one-model-context-protocol Key Capabilities

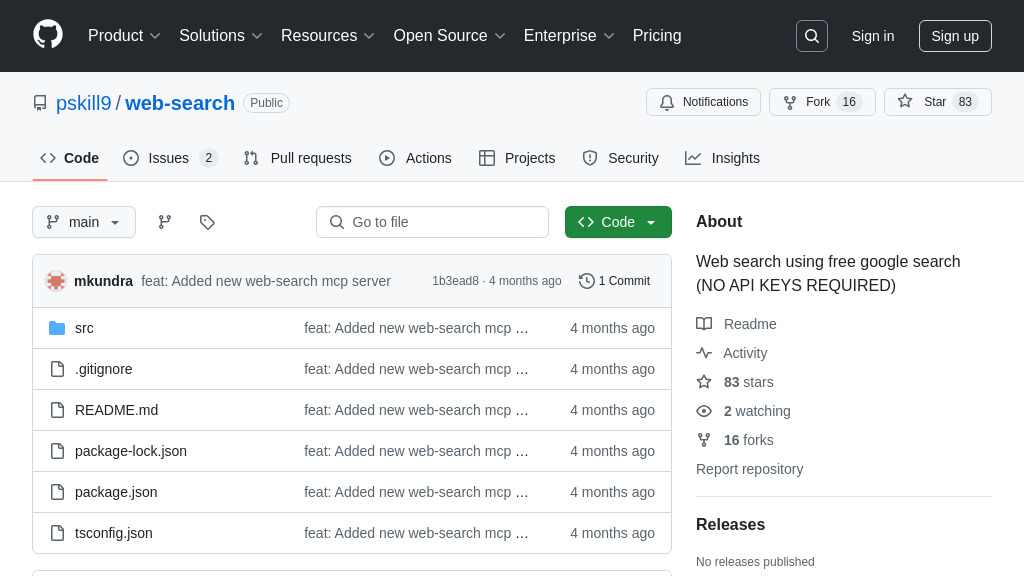

AI-Powered Unified Search

The all-in-one-model-context-protocol provides a centralized AI-driven search capability across multiple integrated services like GitLab, Jira, and Confluence. This feature allows users to quickly find relevant information scattered across different platforms using natural language queries. The AI component understands the context of the query and retrieves the most pertinent results, significantly reducing the time and effort required to manually search each platform individually. This is achieved by indexing the content from these services and using AI models to semantically understand and match user queries.

For example, a developer can ask, "Find all Jira issues related to the recent authentication bug that John reported," and the system will return relevant Jira issues, Confluence documentation, and GitLab commits, even if the query terms don't exactly match the content in each system. This unified search streamlines information retrieval and enhances productivity. The underlying technology likely involves vector embeddings and similarity search, potentially leveraging Qdrant for vector storage and retrieval.

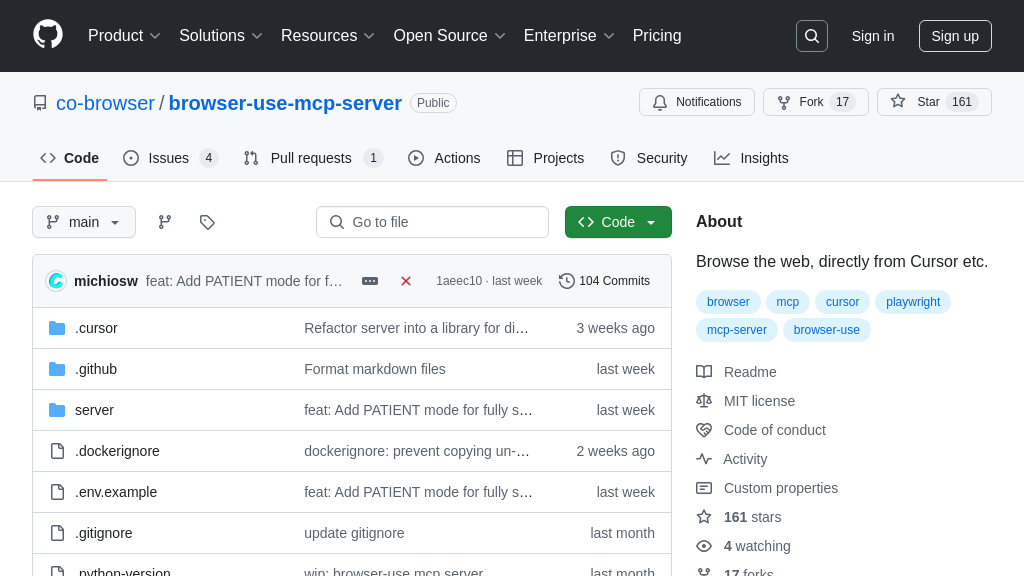

Multi-Service Integration Hub

This MCP solution acts as a central hub, seamlessly integrating with popular development and collaboration tools such as GitLab, Jira, Confluence, and YouTube. This integration allows AI models to access and utilize data from these diverse sources, providing a comprehensive context for AI reasoning and decision-making. By connecting these services, the all-in-one-model-context-protocol eliminates data silos and enables AI to perform tasks that would otherwise require manual data gathering and processing from multiple platforms.

For instance, an AI model can automatically generate release notes by pulling commit messages from GitLab, summarizing resolved Jira issues, and linking relevant Confluence documentation. This integration simplifies complex workflows and empowers AI to automate tasks that span multiple systems. The integration is facilitated through the use of API keys and tokens for each service, allowing secure and authorized access to data.

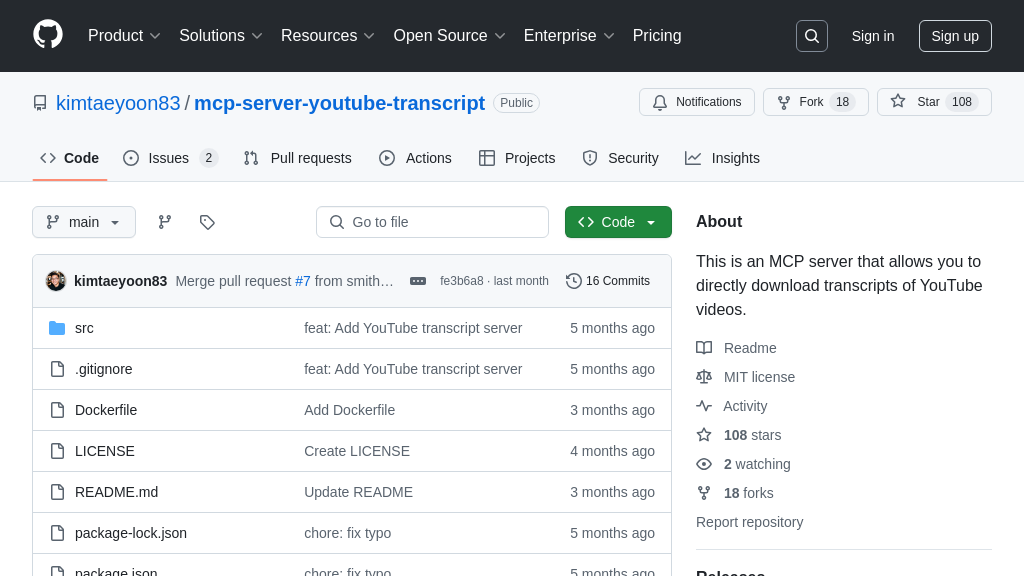

RAG-Enabled Knowledge Retrieval

The all-in-one-model-context-protocol incorporates Retrieval-Augmented Generation (RAG) capabilities, enabling AI models to access and utilize external knowledge sources to enhance their responses. This feature allows the system to index and search through documents, files, and other content, providing AI models with the context needed to answer questions accurately and comprehensively. The RAG implementation supports creating, deleting, listing, and searching memory collections, allowing users to manage their knowledge base effectively.

For example, a data scientist can use the RAG functionality to index a collection of research papers and then ask the AI model to summarize the latest findings on a specific topic. The AI model will retrieve relevant information from the indexed papers and generate a summary based on the retrieved content. This RAG capability enhances the AI model's ability to provide informed and context-aware responses. The implementation likely uses vector databases like Qdrant to store and retrieve the indexed content efficiently.

Tool Management & Orchestration

The solution provides a tool_manager that allows users to dynamically enable or disable specific tools within the MCP ecosystem. This feature offers granular control over the capabilities exposed to AI models, enhancing security and customization. By selectively enabling tools, users can tailor the AI's access to specific functionalities and data sources, ensuring that it only interacts with the necessary resources. Furthermore, the tool_use_plan tool facilitates the orchestration of these tools, allowing the AI to create a plan for solving a given request using the available tools in a coordinated manner.

For example, an administrator can disable the gmail_send_message tool to prevent the AI from sending emails, while enabling the jira_create_issue tool to allow it to create Jira issues. This fine-grained control ensures that the AI operates within predefined boundaries and adheres to security policies. The tool management system likely uses a configuration file or database to store the enabled/disabled status of each tool.