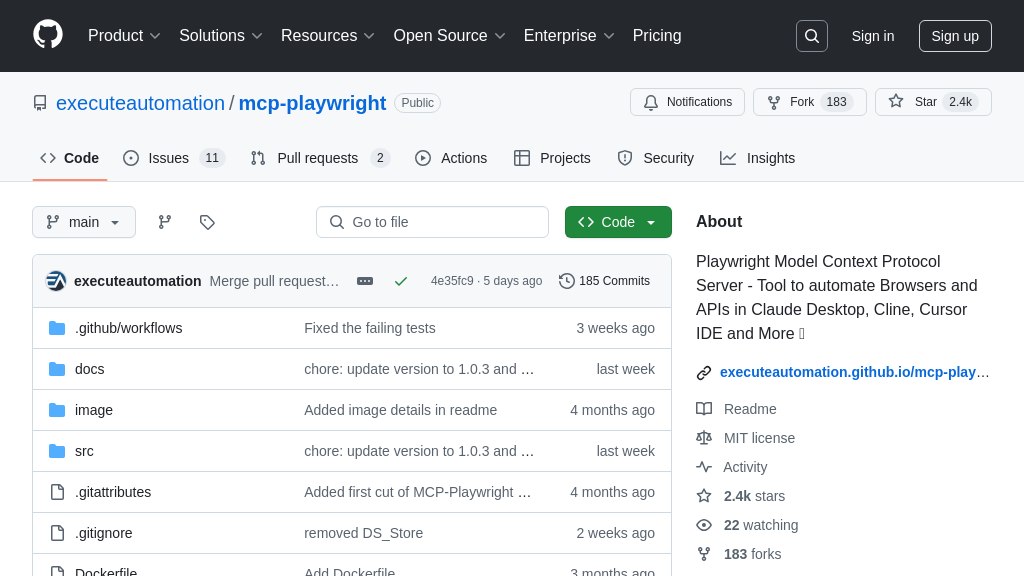

any-chat-completions-mcp

Integrate any OpenAI SDK-compatible Chat Completion API with Claude using this MCP server. Enhance Claude with diverse AI chat models.

any-chat-completions-mcp Solution Overview

any-chat-completions-mcp is an MCP server designed to seamlessly integrate various OpenAI SDK-compatible chat completion APIs, such as Perplexity, Groq, and xAI, with platforms like Claude. This TypeScript-based solution empowers developers to extend Claude's capabilities by connecting it to a wider range of AI Chat Providers.

At its core is the chat tool, which intelligently relays user queries to a configured AI Chat Provider. By simply modifying the Claude Desktop configuration file, developers can easily add multiple providers, each accessible as a distinct tool within the Claude interface. This eliminates vendor lock-in and allows users to leverage the strengths of different models.

The key value lies in its flexibility and ease of integration. Developers can quickly experiment with different AI models without complex code changes. any-chat-completions-mcp streamlines the process of connecting Claude to preferred chat completion APIs, fostering innovation and customization. It communicates over stdio, and debugging is facilitated by the MCP Inspector.

any-chat-completions-mcp Key Capabilities

Universal Chat API Integration

The any-chat-completions-mcp server's core function is to bridge Claude with any Chat Completion API that is compatible with the OpenAI SDK. This allows Claude users to access a multitude of AI models beyond Anthropic's own, including models from OpenAI, Perplexity, Groq, and others, without requiring direct integration by Anthropic. The server acts as an intermediary, translating Claude's requests into a format understood by the target API and relaying the response back to Claude. This is achieved through the chat tool, which takes a user's question and forwards it to the configured AI Chat Provider.

For example, a user might want to compare the responses of GPT-4o and Llama-3 on a particular query. By configuring two instances of the any-chat-completions-mcp server, one for each model, the user can easily switch between them within the Claude interface and evaluate their performance side-by-side. This is configured by setting different environment variables for each server instance, specifying the API key, name, model, and base URL for each provider.

Simplified Model Access

This MCP server simplifies the process of accessing and utilizing various AI models. Instead of developers needing to implement custom integrations for each AI provider, they can leverage the standardized MCP interface provided by any-chat-completions-mcp. This reduces the complexity and time required to incorporate different AI models into Claude workflows. The server abstracts away the specific API details of each provider, presenting a unified interface to Claude.

Consider a scenario where a developer wants to use a specialized AI model for a specific task, such as code generation or creative writing. With any-chat-completions-mcp, they can quickly configure a new server instance pointing to the relevant API endpoint and immediately start using the model within Claude. This eliminates the need to write custom code or manage API keys directly within the Claude environment, streamlining the development process and promoting code reusability.

Dynamic Provider Configuration

The any-chat-completions-mcp server supports dynamic configuration of AI Chat Providers through environment variables. This allows users to easily switch between different providers, models, and API keys without modifying the server's code. The configuration is managed through the claude_desktop_config.json file, where multiple server instances can be defined with different environment variables. This flexibility enables users to experiment with different AI models and optimize their performance for specific tasks.

For instance, a user might want to switch between a fast, low-cost model for simple queries and a more powerful, but expensive model for complex tasks. By defining multiple server instances with different AI_CHAT_MODEL and AI_CHAT_KEY environment variables, they can easily switch between them within Claude, selecting the appropriate model for each task. This dynamic configuration allows for efficient resource utilization and cost optimization.

Integration Advantages

The any-chat-completions-mcp server offers seamless integration with the Claude desktop application through the Model Context Protocol. By adhering to the MCP standard, the server can be easily added to Claude's configuration, allowing users to access external AI models directly within the Claude interface. This integration provides a unified and consistent user experience, eliminating the need to switch between different applications or interfaces. The server communicates with Claude over standard input/output (stdio), ensuring secure and reliable data transfer.

The integration process involves adding a server configuration to the claude_desktop_config.json file, specifying the command to execute the server and the necessary environment variables. Once configured, the server appears as a tool within the Claude desktop application, allowing users to interact with the external AI model as if it were a native Claude feature. This seamless integration enhances the usability and accessibility of external AI models, making them an integral part of the Claude ecosystem.