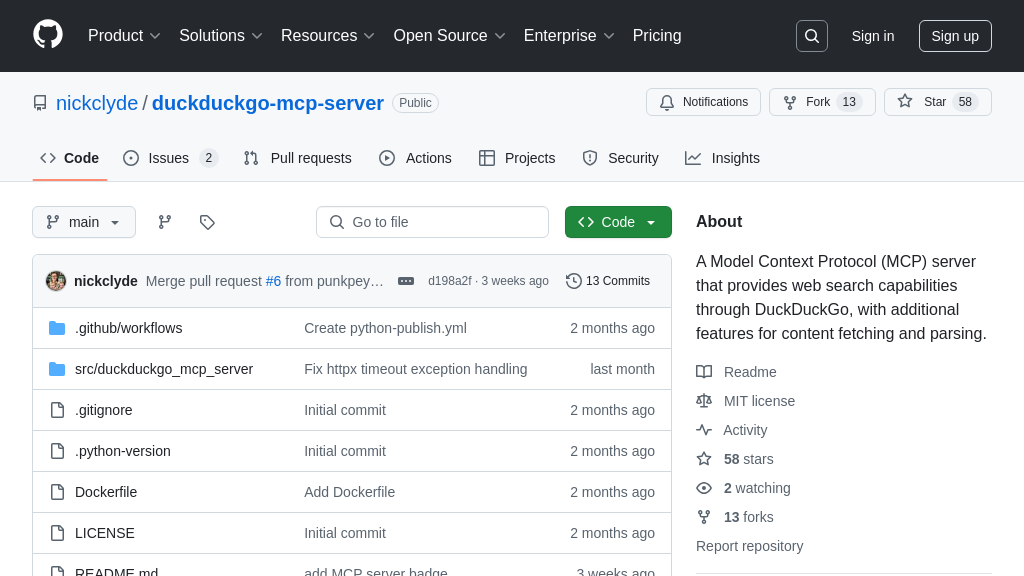

duckduckgo-mcp-server

An MCP server providing DuckDuckGo web search for AI models, featuring content fetching and rate limiting.

duckduckgo-mcp-server Solution Overview

The DuckDuckGo Search MCP Server is a vital component for AI models requiring real-time web search capabilities. As an MCP server, it seamlessly connects AI models to the DuckDuckGo search engine, enabling access to up-to-date information. It offers tools for both web searching and content fetching, intelligently parsing webpage content for optimal AI consumption.

Key features include advanced rate limiting to prevent service disruptions, comprehensive error handling, and LLM-friendly output formatting, ensuring that AI models receive clean, relevant data. By integrating this server, developers can equip their AI models with the ability to perform web searches, extract valuable information from web pages, and make informed decisions based on current data. Installation is streamlined via Smithery or uv, making it easy to incorporate into existing MCP ecosystems.

duckduckgo-mcp-server Key Capabilities

Web Search via DuckDuckGo

The duckduckgo-mcp-server provides AI models with the ability to perform web searches using the DuckDuckGo search engine. This functionality allows models to access real-time information and expand their knowledge beyond their training data. The server handles the complexities of interacting with the DuckDuckGo API, including formatting the search query and parsing the results into a structured format suitable for consumption by large language models (LLMs). This includes removing ads, cleaning redirect URLs, and truncating long content.

For example, an AI model tasked with summarizing current events could use this feature to search for the latest news articles on a specific topic. The server would return a list of relevant search results, including titles, URLs, and snippets, which the model could then use to generate a concise summary. The search tool limits requests to 30 per minute.

Content Fetching and Parsing

Beyond simply providing search results, the duckduckgo-mcp-server can also fetch and parse the content of webpages. This feature allows AI models to delve deeper into the information found through web searches and extract relevant data from the linked pages. The server intelligently extracts the main text content from a webpage, removing irrelevant HTML tags and formatting to provide a clean and structured output. This is particularly useful for tasks such as question answering, where the model needs to find specific information within a document.

Imagine an AI model designed to answer questions about a particular product. Using the search tool, the model could find the product's official webpage. Then, using the content fetching tool, it could extract the product description, specifications, and customer reviews from the page. This information could then be used to answer user questions about the product in a comprehensive and informative way. The fetch_content tool limits requests to 20 per minute.

LLM-Optimized Output Formatting

A key feature of the duckduckgo-mcp-server is its ability to format search results and webpage content specifically for consumption by large language models. This involves cleaning and structuring the data in a way that is easily processed and understood by LLMs. The server removes irrelevant information, such as advertisements and tracking parameters, and presents the data in a clear and concise format. This optimization improves the performance of AI models by reducing noise and allowing them to focus on the most relevant information.

For instance, the server truncates long content appropriately, preventing the LLM from being overwhelmed with excessive text. This ensures that the model can efficiently process the information and generate accurate and relevant responses. The server also cleans up DuckDuckGo redirect URLs, providing the LLM with direct links to the content.