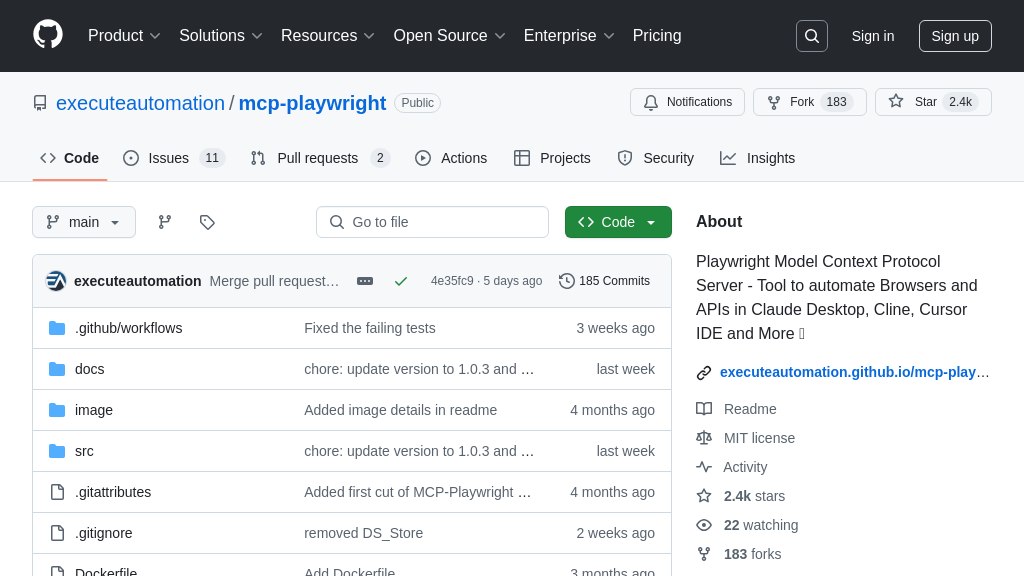

fastmcp

FastMCP: A Pythonic way to build MCP Servers. No longer maintained, integrated into the official MCP SDK.

fastmcp Solution Overview

FastMCP is a Pythonic framework designed to streamline the creation of Model Context Protocol (MCP) servers. It empowers developers to build MCP servers rapidly with minimal boilerplate, focusing on creating tools, exposing resources, and defining prompts using clean Python code.

Key features include a high-level interface for rapid development, a simple and intuitive design for Python developers, and aims to provide a full implementation of the core MCP specification. FastMCP allows seamless interaction with AI models by providing resources (data endpoints), tools (actionable functions), and prompts (reusable templates). It simplifies integration by handling connection management, protocol compliance, and message routing.

FastMCP offers multiple deployment options, including a development mode with a web interface for interactive testing, Claude Desktop integration for regular use, and direct execution for advanced scenarios. While this repository is no longer maintained, FastMCP has been added to the official MCP SDK.

fastmcp Key Capabilities

Rapid Server Development

FastMCP streamlines the creation of MCP servers by minimizing boilerplate code, allowing developers to focus on defining resources, tools, and prompts using Python. This is achieved through a high-level, Pythonic interface that simplifies the complexities of the MCP protocol. Developers can quickly expose data to LLMs via resources (akin to GET endpoints), enable LLMs to perform actions via tools (akin to POST endpoints), and create reusable prompt templates for effective LLM interaction. This rapid development capability significantly reduces the time and effort required to integrate AI models with external data sources and services.

For example, a developer can create a simple MCP server that exposes a company's configuration data as a resource and a tool to fetch weather information with just a few lines of code. This allows an AI model to access real-time weather data and company-specific configurations, enhancing its ability to provide context-aware responses. FastMCP leverages Python decorators and type hints to automatically handle request parsing, validation, and response serialization, further simplifying the development process.

Resource and Tool Definition

FastMCP provides a straightforward way to define resources and tools, which are the core components for interacting with AI models. Resources are defined using the @mcp.resource decorator, allowing developers to expose data to LLMs without significant computation or side effects. Tools, defined using the @mcp.tool decorator, enable LLMs to take actions through the server, performing computations and having side effects. Both resources and tools can accept parameters, allowing for dynamic data retrieval and action execution.

Consider a scenario where an AI model needs to access user profile information and update user settings. With FastMCP, developers can define a resource that retrieves user profile data based on a user ID and a tool that updates user settings based on provided parameters. The AI model can then use these resources and tools to personalize user interactions and automate user management tasks. FastMCP automatically handles the serialization and deserialization of data, ensuring seamless communication between the AI model and the server.

Contextual Awareness via Context Object

FastMCP introduces a Context object that provides tools and resources with access to MCP capabilities, enhancing their contextual awareness. This object allows tools and resources to report progress, log information, access other resources, and retrieve request metadata. The report_progress() function enables tools to provide real-time updates on long-running tasks, while the logging functions (debug(), info(), warning(), error()) facilitate debugging and monitoring. The read_resource() function allows tools to access other resources, enabling complex workflows that require data from multiple sources.

For instance, a tool that processes multiple files can use the Context object to report progress to the AI model, providing users with real-time feedback on the task's status. The tool can also use the read_resource() function to access configuration files or other data sources required for processing the files. The Context object provides valuable information about the request, such as the request ID and client ID, which can be used for auditing and security purposes.

Development and Deployment Flexibility

FastMCP offers multiple options for running and deploying MCP servers, catering to different development and production needs. The development mode, launched via fastmcp dev server.py, provides a web interface for interactive testing, detailed logs, and performance monitoring, streamlining the development process. For regular use, the fastmcp install server.py command installs the server in an isolated environment with automatic dependency management, simplifying deployment. Direct execution via fastmcp run server.py or python server.py offers advanced users more control over the server's execution environment.

For example, during development, a developer can use the development mode to test resources and tools interactively, inspect logs, and monitor performance. Once the server is ready for deployment, the developer can use the fastmcp install command to install it in a production environment with automatic dependency management. This flexibility allows developers to choose the deployment option that best suits their needs, ensuring a smooth transition from development to production.