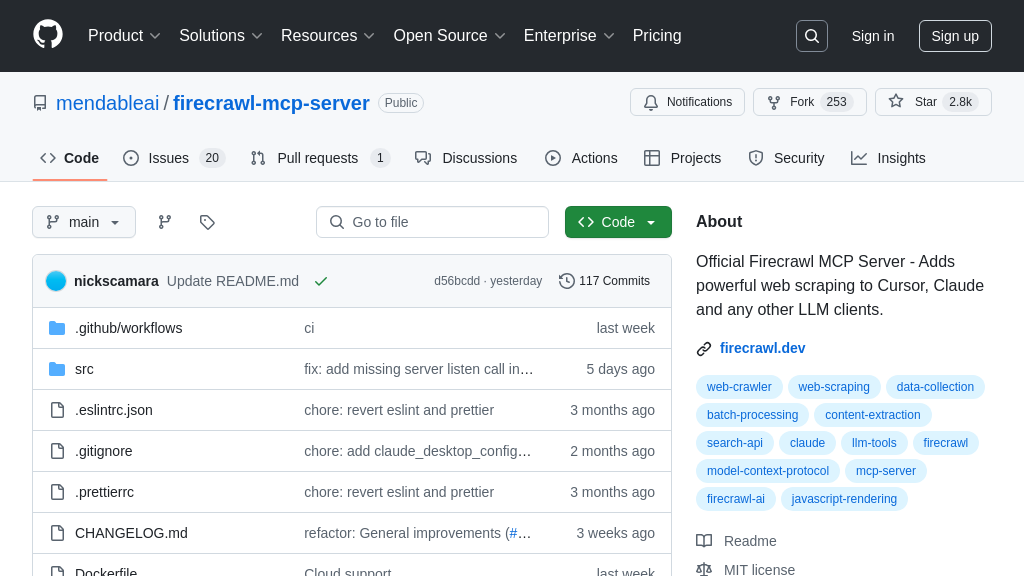

firecrawl-mcp-server

firecrawl-mcp-server: An MCP Server leveraging Firecrawl for advanced web scraping and data extraction. Provides AI models secure, structured access to web content via the Model Context Protocol.

firecrawl-mcp-server Solution Overview

Meet the firecrawl-mcp-server, a powerful MCP Server implementation designed to connect your AI models directly to the web's vast resources using Firecrawl's advanced capabilities. It acts as a standardized bridge, enabling AI agents within clients like Cursor or Claude Desktop to perform sophisticated web interactions. Key features include robust single-page scraping with JavaScript rendering, efficient batch scraping, comprehensive website crawling, integrated web search, and LLM-powered structured data extraction. It even offers a unique "deep research" tool combining crawling, search, and analysis. By handling complexities like rate limiting and retries, firecrawl-mcp-server provides reliable, structured web data access, significantly enhancing your AI's ability to interact with and understand real-time online information. Integration is straightforward via command-line execution (npx) using standard MCP transports like stdio or SSE.

firecrawl-mcp-server Key Capabilities

Advanced Web Data Acquisition

The firecrawl-mcp-server provides sophisticated web scraping and crawling capabilities directly to AI models through the Model Context Protocol. It leverages Firecrawl's core functionalities via the firecrawl_scrape, firecrawl_batch_scrape, and firecrawl_crawl tools. This allows AI models to fetch content not just from static pages but also from dynamic, JavaScript-heavy websites (e.g., built with React, Vue, Angular) thanks to its built-in JS rendering support. Developers can instruct the AI to scrape single URLs, efficiently process multiple URLs in batches with managed rate limiting, or initiate asynchronous crawls across entire websites. Fine-grained control is available through parameters like targeting only main content (onlyMainContent), simulating mobile or desktop browsers, including or excluding specific HTML tags, and setting timeouts. This eliminates the significant developer overhead of building and maintaining complex, resilient web scraping infrastructure. For AI models, this feature unlocks access to real-time, comprehensive data from virtually any website, enabling tasks like market research, content summarization, and competitive analysis based on the most current information available online.

- Use Case: An AI financial analyst assistant can use

firecrawl_scrapewith JS rendering enabled to extract the latest stock data or news commentary from a dynamic financial portal that requires JavaScript to display information. For a broader market overview, it could usefirecrawl_batch_scrapeto gather quarterly report summaries from multiple company investor relations pages simultaneously. - Technical Details: Utilizes Firecrawl API for scraping/crawling, supports JavaScript rendering, offers batch processing (

firecrawl_batch_scrape) with status checks (firecrawl_check_batch_status), asynchronous website crawling (firecrawl_crawl), and configurable options for content filtering and browser simulation.

Intelligent Structured Data Extraction

This server empowers AI models with the ability to extract structured data from unstructured web content using the firecrawl_extract tool. Instead of just retrieving raw text or markdown, developers can define a desired output structure using a JSON schema and provide a natural language prompt instructing the AI on what information to extract (e.g., "Extract the product name, price, and specifications"). The underlying Firecrawl service, integrated via the MCP server, then uses a Large Language Model (LLM) to intelligently parse the content of the specified URLs (fetched using its scraping capabilities) and populate the defined schema. This significantly simplifies the data extraction process for developers, removing the need to write and maintain fragile CSS selectors or XPath queries that often break with website redesigns. For the AI model, it provides direct access to clean, organized, and immediately usable data, enhancing its ability to perform complex tasks like data aggregation, comparison, and analysis without needing extensive post-processing of raw web content. It effectively translates messy web data into actionable, structured knowledge for the AI.

- Use Case: An AI agent tasked with creating a database of local events could use

firecrawl_extractwith a schema defining "event_name", "date", "location", and "description" to automatically pull this information from various community websites and event listing pages, even if they have different layouts. - Technical Details: Requires a list of URLs and a natural language prompt. Optionally accepts a JSON schema for output structure, a system prompt for the LLM, and flags to control external link following, web search integration, or subdomain inclusion during extraction.

Contextual Web Search & Research

firecrawl-mcp-server integrates powerful web search and automated research capabilities into the AI's toolkit via the firecrawl_search and firecrawl_deep_research tools. The firecrawl_search tool allows the AI model to execute standard web searches based on a query, returning a list of relevant results. Crucially, it can be configured to automatically scrape the content of these search result pages, providing the AI not just with links but with the actual information from those sources. For more complex inquiries, the firecrawl_deep_research tool enables the AI to conduct autonomous, in-depth research on a given topic. It combines web crawling, search result analysis, and LLM-driven synthesis to explore multiple relevant web pages, gather information, and generate a comprehensive analysis or report within specified constraints (like time or number of URLs). This solves the developer challenge of integrating disparate search APIs, scraping logic, and content analysis workflows. For AI models, it provides the crucial ability to actively seek out, evaluate, and synthesize current information from the broader web, enabling sophisticated question answering, report generation, and knowledge discovery beyond their static training data.

- Use Case: When asked about the "impact of recent AI regulations in the EU," an AI model can use

firecrawl_searchto find and retrieve content from the top news articles and official sources. Alternatively, it could employfirecrawl_deep_researchto perform a more thorough investigation, exploring links within initial results, analyzing different perspectives, and producing a synthesized summary of the regulatory landscape and its potential consequences. - Technical Details:

firecrawl_searchaccepts a query string and optional parameters for result limit, language, country, and options for scraping results.firecrawl_deep_researchtakes a research query and parameters like maximum depth, time limit, and URL count, returning a final LLM-generated analysis.

Automated llms.txt Generation

Recognizing the growing need for standardized AI interaction protocols, the firecrawl-mcp-server includes the firecrawl_generate_llmstxt tool. This utility specifically addresses the emerging llms.txt standard, which functions similarly to robots.txt but is tailored for Large Language Models and other AI agents. By providing a website's base URL, developers or AI models can use this tool to automatically generate a draft llms.txt file. The tool analyzes the site structure (crawling a limited number of pages) to suggest rules defining which paths AI crawlers should be allowed or disallowed to access for data gathering or training purposes. It can optionally generate a more detailed llms-full.txt as well. This feature provides significant value by simplifying compliance with website access policies for developers building AI agents that interact with the web. It also empowers website owners to easily create and implement controls over how AI models utilize their content, fostering a more transparent and controllable AI ecosystem. Within the MCP framework, it promotes responsible and standardized web interactions between AI clients and web resources.

- Use Case: Before initiating a large-scale crawl of a target website using

firecrawl_crawl, an AI agent can first usefirecrawl_generate_llmstxtto check for existing rules or generate a proposed set of rules. This ensures the agent respects the website owner's preferences regarding AI access, promoting ethical data collection. A website administrator could use this tool to quickly establish baseline AI access policies for their domain. - Technical Details: Requires the website's base URL. Optional parameters include

maxUrls(number of pages to analyze for rule generation, default 10) andshowFullText(boolean to includellms-full.txtcontent in the response). Returns the generatedllms.txtcontent and optionally thellms-full.txt.