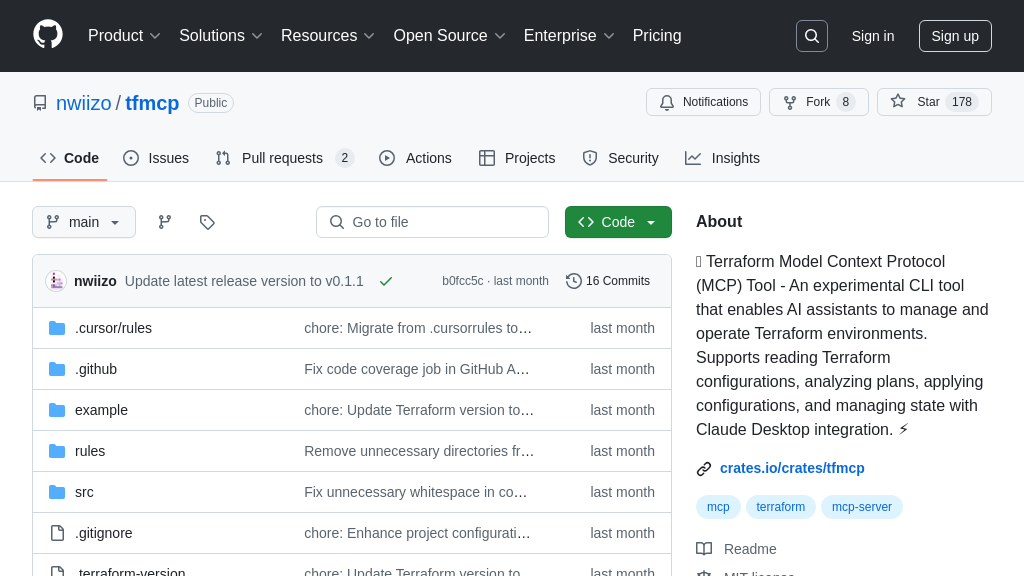

k8m

k8m: An AI-driven Kubernetes dashboard and MCP Server. Simplify cluster management with integrated AI and empower LLMs via 49+ built-in MCP tools for secure, multi-cluster Kubernetes operations.

k8m Solution Overview

k8m is a versatile AI-driven Kubernetes dashboard and a powerful MCP Server, designed to streamline cluster management for developers. As a lightweight, single-binary tool, it offers intuitive multi-cluster management, Pod operations (files, logs, shell), CRD handling, and Helm support. Its core strength lies in AI integration: leveraging built-in or custom LLMs for intelligent assistance like log analysis, resource explanation, and command recommendations. Crucially, k8m acts as an MCP Server, exposing 49 granular Kubernetes tools (e.g., scaling deployments, managing nodes, querying resources) callable by MCP Clients like IDE plugins or other AI agents. Its unique permission system ensures LLM-driven actions via MCP adhere strictly to the user's K8s access rights, enabling secure, AI-powered automation and interaction with clusters. k8m integrates seamlessly via its standard SSE endpoint (default port 3619), simplifying the connection between AI models and Kubernetes infrastructure.

k8m Key Capabilities

AI-Enhanced K8s Operations

k8m integrates AI capabilities directly into the Kubernetes dashboard experience, significantly simplifying cluster management and troubleshooting. It leverages Large Language Models (LLMs), supporting built-in options like Qwen2.5-Coder-7B and DeepSeek-R1-Distill-Qwen-7B, or allowing connection to custom private models via standard API endpoints (like OpenAI-compatible APIs). This AI integration manifests in several practical features: users can select text within the UI (e.g., resource definitions, logs, error messages) for instant AI-powered explanations or translations (YAML attribute translation). It provides resource guides, interprets complex kubectl describe outputs, offers AI-driven diagnostics for Pod logs ("日志AI问诊"), and recommends appropriate kubectl commands for various situations. This fusion transforms the dashboard from a passive viewer into an active assistant, reducing the cognitive load on developers and operators, especially those less familiar with Kubernetes intricacies. For instance, when encountering a cryptic Pod error log, a user can select the error and ask the integrated AI to explain the likely cause and suggest troubleshooting steps or relevant commands, directly accelerating problem resolution without leaving the k8m interface. This feature enhances the AI model's utility by grounding it in the specific context of the user's K8s environment.

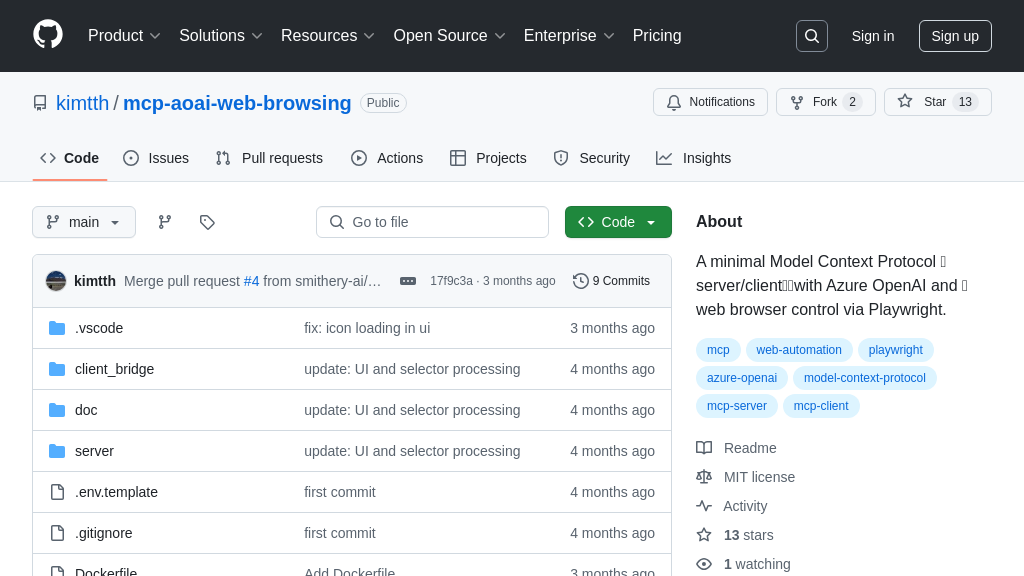

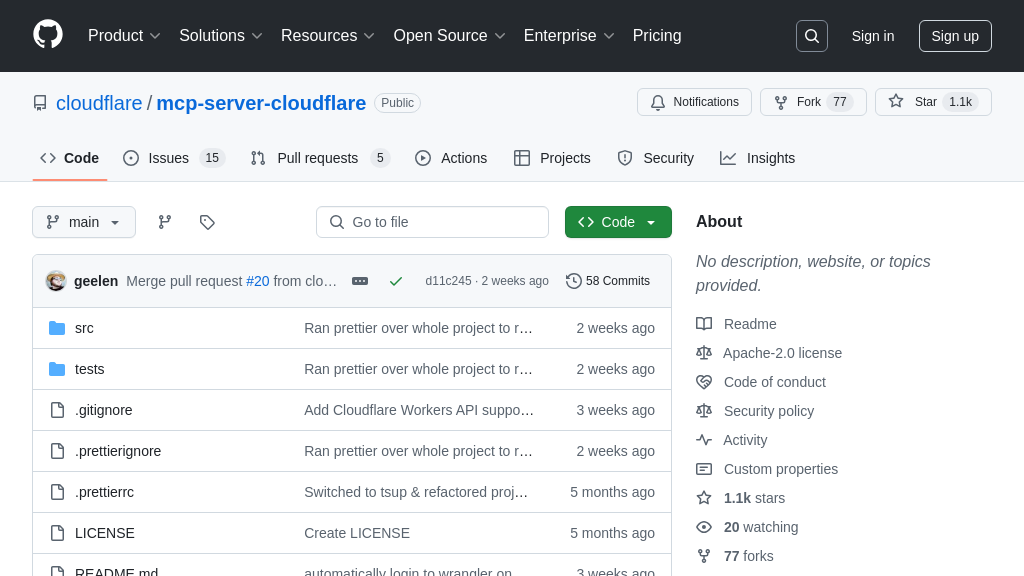

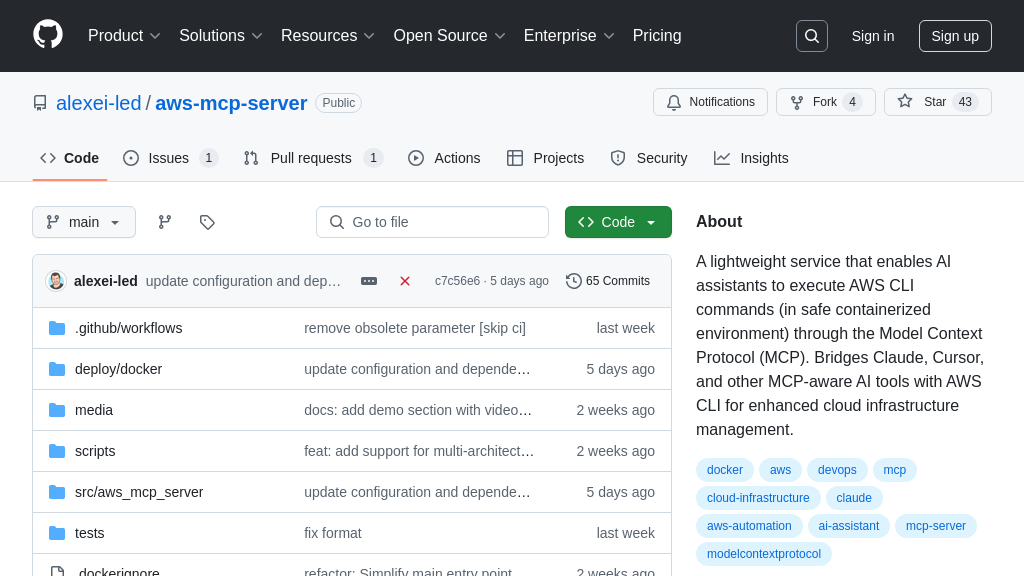

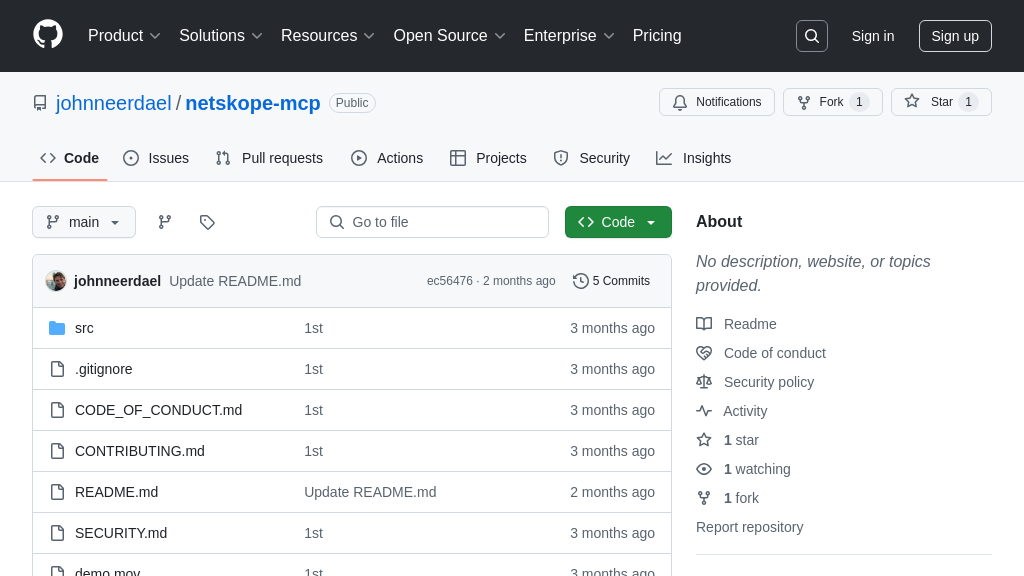

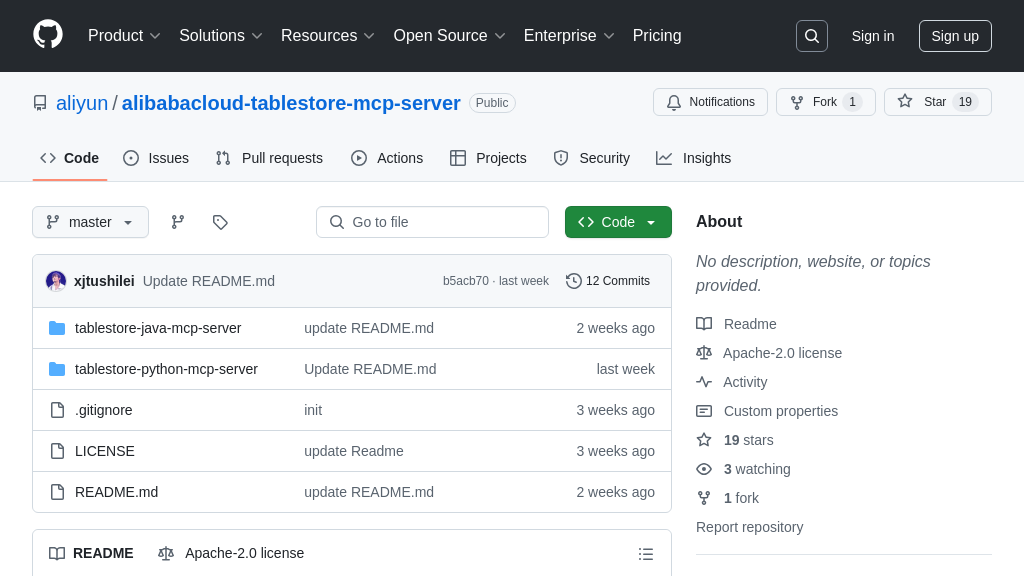

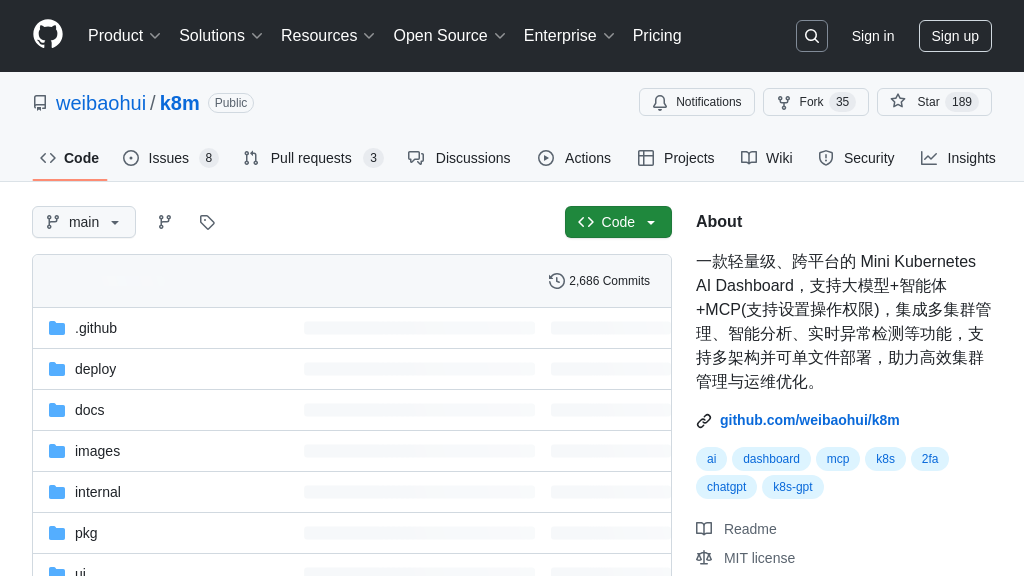

Integrated MCP Server

k8m functions as a fully-fledged MCP (Model Context Protocol) Server, exposing its Kubernetes management capabilities through a standardized protocol for consumption by external AI models and tools (MCP Clients). It listens on a dedicated port (default 3619, configurable via --mcp-server-port or MCP_SERVER_PORT environment variable) and communicates using the HTTP/SSE transport mechanism (/sse endpoint). This allows any MCP-compatible client, such as AI coding assistants (Cursor), specialized AI platforms (Windsurf), or custom applications, to interact with the Kubernetes clusters managed by k8m. The server comes pre-packaged with 49 distinct MCP tools specifically designed for Kubernetes operations, covering areas like deployment management (scaling, restarting, rollbacks), dynamic resource interaction (get, describe, delete, patch, label), node management (taint, cordon, drain), Pod operations (logs, exec, file management), YAML application, and storage/ingress configuration. This provides a rich, ready-to-use interface for AI models to perform complex, multi-step cluster operations safely and reliably. For example, an AI assistant integrated via MCP could receive a user request like "Scale the 'frontend' deployment in the 'production' cluster to 5 replicas," and k8m's MCP server would execute the corresponding scale_deployment tool against the correct cluster context. This positions k8m as a crucial bridge in the MCP ecosystem, enabling sophisticated AI-driven Kubernetes automation.

Unified Permission Model

A key security and operational feature of k8m is its unified permission model that seamlessly integrates Kubernetes RBAC with MCP tool execution. Highlighted by the principle "who uses the large model, uses whose permissions to execute MCP" (谁使用大模型,就用谁的权限执行MCP), this ensures that any action performed via the MCP interface by an AI model on behalf of a user is subject to that user's existing permissions within the target Kubernetes cluster(s). When a user interacts with k8m (either through the UI or via an MCP client connected to k8m's server), their authenticated identity and associated Kubernetes permissions (managed within k8m, supporting user/group level authorization with cluster-specific roles like read-only, exec, admin) are used to authorize any MCP tool invocation. This prevents AI models from bypassing established security boundaries or performing actions the user themselves is not authorized to do. For instance, if a developer with only read-only access to the 'production' cluster asks an AI tool (connected via MCP to k8m) to delete a deployment in that cluster, k8m's MCP server will reject the delete_k8s_resource tool execution because the underlying user lacks the necessary K8s permissions. This tight integration solves the critical problem of securely delegating K8s operations to AI, providing administrators peace of mind and ensuring compliance without needing a separate permission system for AI interactions.