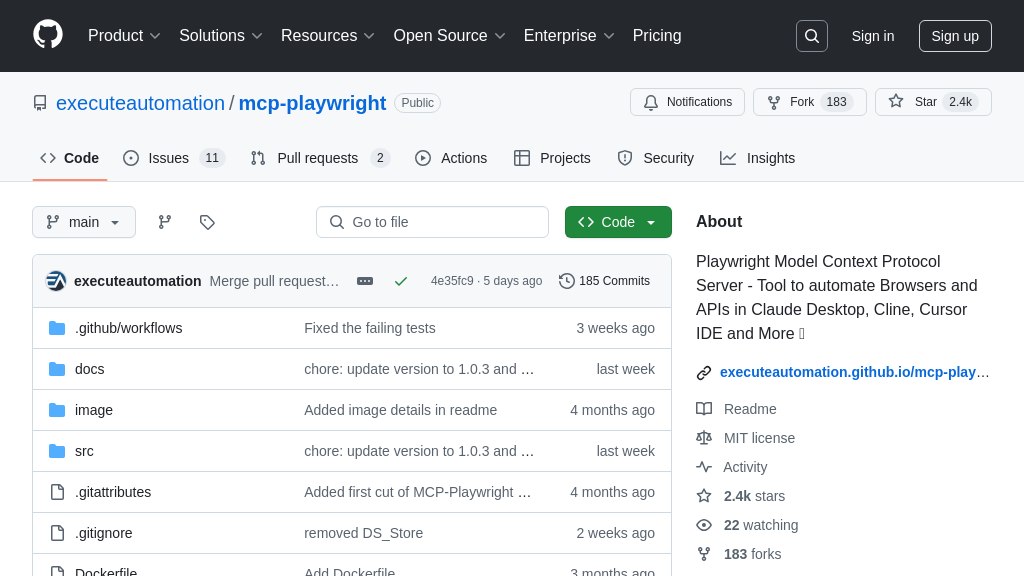

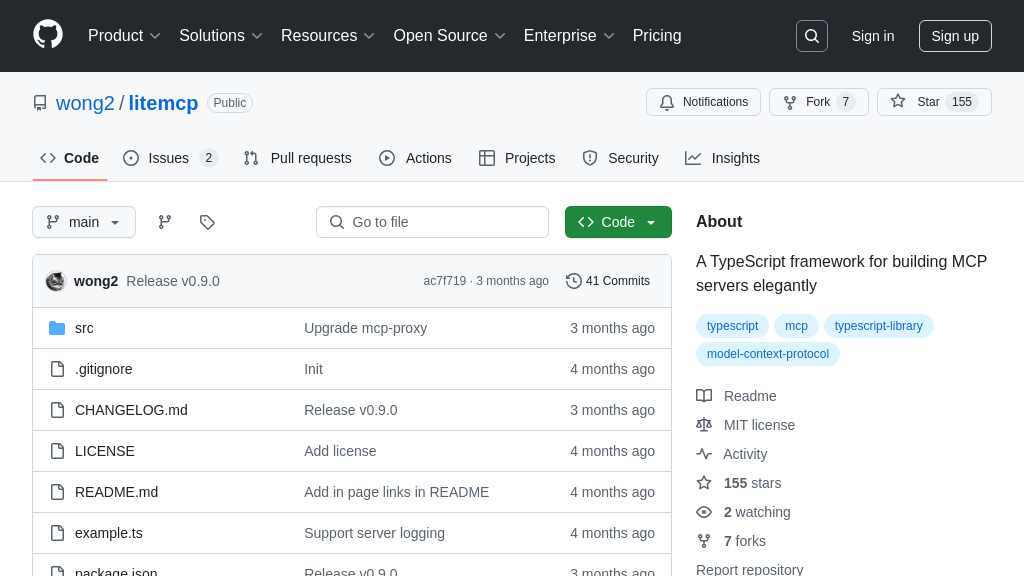

litemcp

LiteMCP: TypeScript framework simplifying MCP server creation for AI model integration.

litemcp Solution Overview

LiteMCP is a TypeScript framework designed to streamline the creation of MCP servers. It empowers developers to build robust interfaces between AI models and the external world with ease. By offering simple abstractions for defining Tools, Resources, and Prompts, LiteMCP simplifies the integration of AI models with external data sources and services.

Key features include full TypeScript support, built-in logging and error handling, and a CLI for testing and debugging. Tools enable AI models to execute specific functions, Resources provide access to external data, and Prompts standardize interactions with LLMs. LiteMCP supports both standard input/output and SSE transport, offering flexibility in deployment. By using LiteMCP, developers can focus on building innovative AI-powered applications without getting bogged down in complex integration details. It facilitates seamless interaction between AI models and external resources, accelerating development and enhancing AI functionality.

litemcp Key Capabilities

Simple Tool Definition

LiteMCP simplifies the process of defining tools for AI models by providing a clear and concise structure. Developers can define the tool's name, description, expected parameters (using Zod for schema validation), and the execution logic within a single addTool call. This abstraction reduces boilerplate code and makes it easier to manage and maintain the available tools. The execute function receives the validated arguments, allowing developers to focus on the core functionality of the tool. For example, a tool could be created to fetch data from an external API, perform a calculation, or interact with a database. This streamlined approach enables developers to quickly expose functionalities to AI models, enhancing their capabilities and enabling them to interact with the external world in a controlled and predictable manner.

Technically, the tool definition is converted into a standardized format that the MCP client can understand, ensuring seamless communication between the AI model and the server.

Flexible Resource Provisioning

LiteMCP offers a flexible way to provide resources to AI models. Resources can represent any kind of data, from file contents and screenshots to log files and database records. Each resource is identified by a unique URI and can contain either text or binary data. The addResource function allows developers to define the resource's URI, name, MIME type, and a load function that retrieves the resource's content. This approach enables developers to expose a wide variety of data sources to AI models in a consistent and easily accessible manner. For instance, a resource could provide the current weather conditions, the contents of a specific file, or the latest news headlines. The ability to return binary data (as a base64 encoded string) further expands the possibilities, allowing AI models to process images, audio, and other non-textual data.

The load function is asynchronous, allowing developers to efficiently retrieve data from various sources without blocking the main thread.

Reusable Prompt Templates

LiteMCP's prompt functionality allows developers to define reusable prompt templates and workflows that clients can easily surface to users and LLMs. This provides a powerful way to standardize and share common LLM interactions. By defining prompts within the LiteMCP server, developers can ensure consistency and control over how AI models are instructed. Each prompt can accept arguments, allowing for dynamic content injection and customization. For example, a prompt could be created to generate a summary of a document, translate text into another language, or answer a specific question. The load function dynamically constructs the prompt based on the provided arguments.

This feature is particularly useful for creating complex workflows that involve multiple steps and interactions with AI models. The prompt templates can be versioned and managed centrally, ensuring that all clients are using the latest and most effective prompts.

Built-in Logging and Error Handling

LiteMCP includes built-in logging and error handling capabilities, simplifying the process of monitoring and debugging MCP servers. The server.logger object provides methods for logging messages at different levels (debug, info, warn, error), allowing developers to track the server's activity and identify potential issues. These log messages can be sent to the client, providing valuable insights into the server's operation. The built-in error handling mechanism automatically catches and handles exceptions, preventing the server from crashing and providing informative error messages to the client.

For example, a tool could log the start and end times of its execution, along with any relevant parameters or results. This information can be used to track the tool's performance and identify potential bottlenecks. Similarly, the error handling mechanism can catch exceptions thrown by the tool and log them, providing valuable information for debugging.

SSE Transport Support

LiteMCP offers built-in support for Server-Sent Events (SSE) transport, in addition to the default standard input/output (stdio) transport. SSE provides a lightweight and efficient way for the server to push updates to the client in real-time. This is particularly useful for applications that require continuous data streaming, such as live monitoring dashboards or real-time data analysis tools. By configuring the server to use SSE transport, developers can enable their AI models to receive updates as soon as they become available, without the need for constant polling.

The SSE endpoint can be easily configured using the start method, allowing developers to specify the endpoint URL and port number. This flexibility enables developers to integrate LiteMCP servers with existing infrastructure and applications.