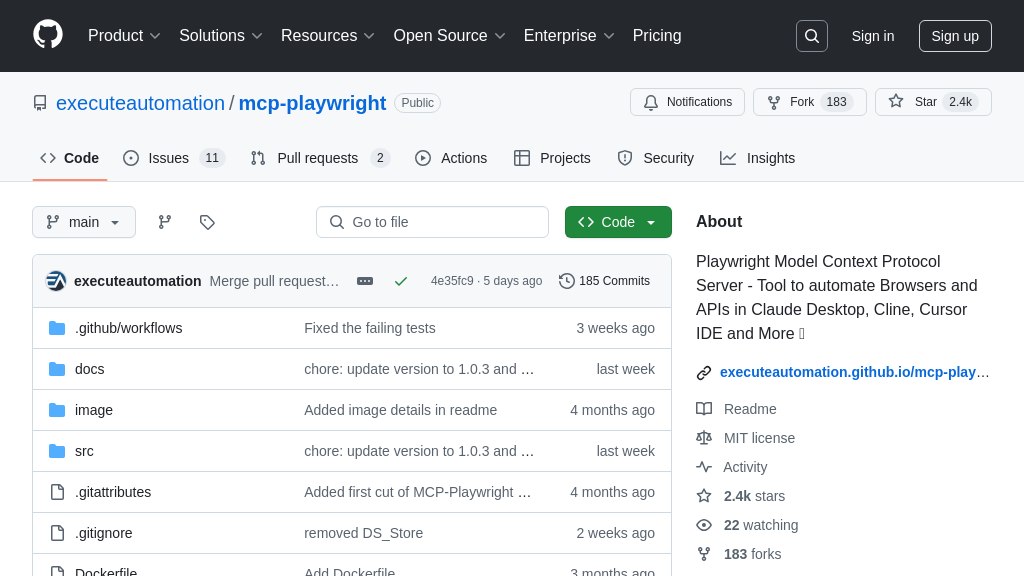

mcp-go

MCP Go: A Go library simplifying MCP server creation for seamless LLM integration with external data and tools.

mcp-go Solution Overview

MCP Go is a Go library designed to streamline the creation of MCP servers, facilitating seamless interaction between Large Language Models (LLMs) and external resources. It simplifies the development process by abstracting away complex protocol details and server management, allowing developers to concentrate on building robust tools and resources for LLMs.

Key features include rapid development through a high-level interface, minimal boilerplate for server creation, and a comprehensive implementation of the core MCP specification. MCP Go enables the creation of servers that expose data via Resources (akin to GET endpoints) and functionality via Tools (akin to POST endpoints), along with defining interaction patterns through reusable Prompts.

By using MCP Go, developers can quickly build standardized, secure interfaces for LLMs to access external data and services, enhancing the capabilities of AI models and resolving the challenge of integrating them with the external world. It integrates via standard input/output, making it easy to deploy and use.

mcp-go Key Capabilities

Simplified Server Creation

mcp-go abstracts away the complexities of the MCP protocol, enabling developers to create MCP servers with minimal boilerplate code. Instead of dealing with low-level protocol details, developers can focus on defining resources, tools, and their associated logic. The server.NewMCPServer function provides a high-level interface for initializing an MCP server, handling tasks such as request parsing, response formatting, and server lifecycle management. This simplification significantly reduces the development time and effort required to expose data and functionality to LLM applications.

For example, a developer can quickly create a server that exposes a weather API to an LLM. They would define a "get_weather" tool with parameters like "city" and "date," and then implement a handler function that fetches weather data from an external source. mcp-go handles the communication between the LLM and the weather API, allowing the LLM to seamlessly access real-time weather information.

Tool and Resource Definition

mcp-go provides a clear and concise way to define tools and resources, which are the fundamental building blocks of an MCP server. The mcp.NewTool and related functions allow developers to specify the name, description, and input parameters of each tool. Similarly, resources can be defined to expose data to LLMs. The library supports various data types for input parameters, including strings, numbers, and enums, allowing for flexible and well-defined interfaces. The ability to define required and optional parameters, along with descriptions, enhances the usability and discoverability of the tools and resources.

Consider a scenario where an LLM needs to access a user's profile information. A developer can define a "get_user_profile" resource with a "user_id" parameter. The mcp-go library ensures that the "user_id" is properly validated and passed to the handler function, which retrieves the user's profile from a database. The LLM can then use this information to personalize its responses or perform other tasks.

Request Hooks for Observability

mcp-go allows developers to hook into the request lifecycle through the use of Hooks objects. By implementing callbacks for various stages of the request processing, developers can gain valuable insights into the behavior of their MCP servers. This feature enables telemetry, allowing for the collection of metrics such as request counts, error rates, and agent identities. The request hooks provide a mechanism for monitoring and debugging MCP servers, ensuring their reliability and performance.

For instance, a developer can use request hooks to log all incoming requests, track the execution time of each tool, and identify improperly formatted requests. This information can be used to optimize the server's performance, troubleshoot errors, and gain a better understanding of how LLMs are interacting with the exposed tools and resources. The server.WithHooks option is used when creating the server to add the desired hooks.