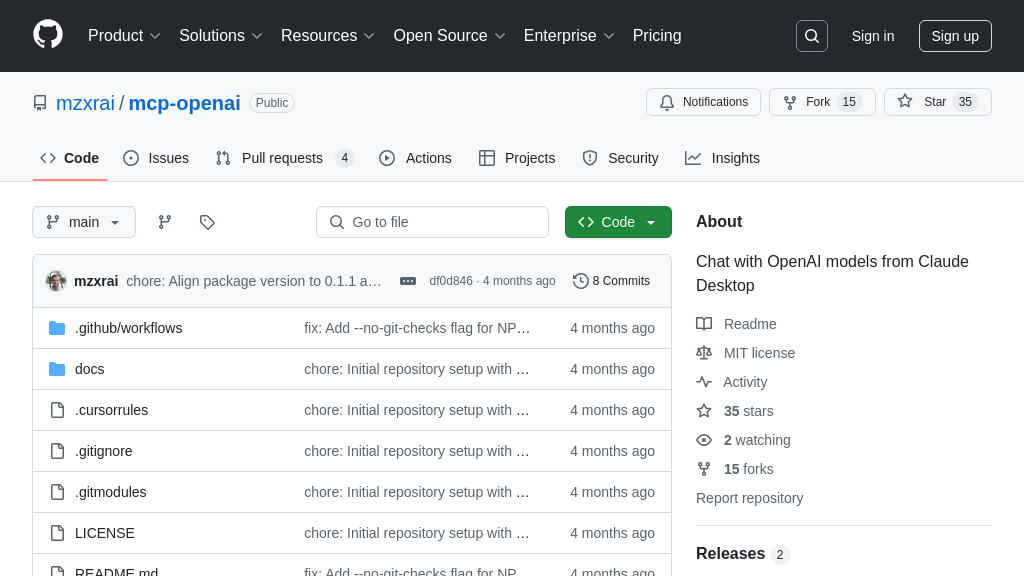

mcp-openai

mcp-openai: Seamlessly integrate OpenAI models into Claude workflows using this MCP server. Enhance Claude with gpt-4o and more!

mcp-openai Solution Overview

MCP OpenAI Server is an MCP server designed to seamlessly integrate OpenAI's powerful models into your Claude workflows. This tool allows you to leverage models like gpt-4o, gpt-4o-mini, o1-preview, and o1-mini directly from Claude, enhancing its capabilities with OpenAI's advanced language processing.

The core functionality revolves around a simple messaging interface, enabling you to send prompts to OpenAI's chat completion API. By configuring the mcpServers setting in your Claude Desktop application, you can specify the command to launch the OpenAI MCP server and set your OpenAI API key. Once configured, you can invoke OpenAI models within Claude using specific instructions, such as "Can you ask o1 what it thinks about this problem?". This integration streamlines the process of utilizing multiple AI models, offering a unified experience and expanding the possibilities for AI-driven tasks. Built with Node.js, it communicates with the Claude Desktop app via the MCP protocol.

mcp-openai Key Capabilities

Seamless OpenAI Model Integration

The mcp-openai server bridges the gap between Claude and OpenAI, allowing users to leverage OpenAI's powerful models directly within the Claude environment. This integration eliminates the need to switch between platforms or manually transfer data, streamlining workflows and enhancing productivity. By configuring the claude_desktop_config.json file, users can specify the mcp-openai server and its associated OpenAI API key, enabling Claude to seamlessly route requests to OpenAI models. This integration supports a variety of models, including gpt-4o, gpt-4o-mini, o1-preview, and o1-mini, providing flexibility and choice for different tasks.

For example, a user working on a complex problem in Claude can use the directive "What does gpt-4o think about this?" to get insights from OpenAI's gpt-4o model without leaving the Claude interface. The server uses Node.js and communicates with Claude via the MCP protocol, ensuring secure and efficient message passing.

Simplified Model Access via MCP

mcp-openai simplifies the process of accessing and utilizing OpenAI models by providing a standardized interface through the Model Context Protocol (MCP). This abstraction layer shields users from the complexities of interacting directly with OpenAI's API, offering a consistent and user-friendly experience. The server acts as an intermediary, handling the communication between Claude and OpenAI, and managing the underlying technical details. This allows developers and users to focus on the task at hand, rather than grappling with API intricacies.

Consider a scenario where a data scientist wants to compare the outputs of different AI models. With mcp-openai, they can easily switch between models like gpt-4o and o1-preview using simple directives within Claude, without needing to modify their code or configurations. The MCP protocol ensures that the requests are properly formatted and routed, and the responses are seamlessly integrated back into the Claude environment.

Dynamic Model Selection

mcp-openai offers dynamic model selection, allowing users to specify which OpenAI model to use for a particular task directly within Claude. This flexibility enables users to tailor their AI processing to the specific requirements of each task, optimizing performance and cost. By supporting multiple models, including gpt-4o, gpt-4o-mini, o1-preview, and o1-mini, mcp-openai provides a range of options for different use cases. The server intelligently routes requests to the appropriate model based on user directives, ensuring that the desired model is always used.

For instance, a user might use gpt-4o for complex reasoning tasks and gpt-4o-mini for simpler tasks where speed is more important than accuracy. This dynamic selection is achieved through the openai_chat tool, which accepts a model argument to specify the desired model. The server then uses this information to construct the appropriate API request to OpenAI.

Technical Implementation

mcp-openai is built using Node.js, leveraging its asynchronous capabilities to handle concurrent requests efficiently. The server communicates with Claude Desktop via the Model Context Protocol (MCP), a standardized protocol for AI model integration. The core functionality is encapsulated in the openai_chat tool, which takes an array of messages and an optional model parameter as input. This tool constructs the appropriate API request to OpenAI's chat completion endpoint, using the specified model or defaulting to gpt-4o if no model is specified. The server also includes basic error handling to gracefully manage potential issues with the OpenAI API. The use of npx for running the server simplifies deployment and ensures that the latest version of the package is always used.