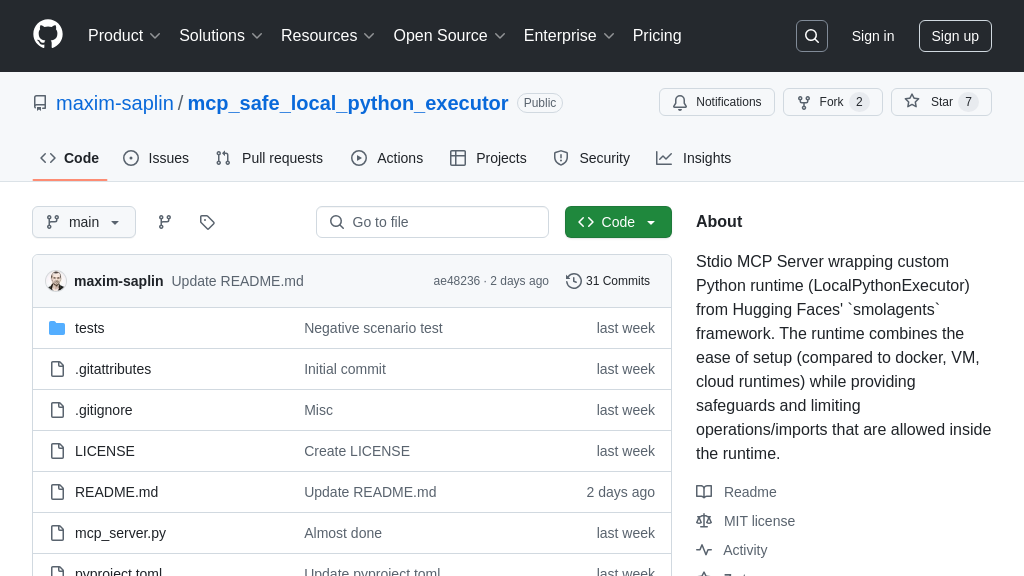

mcp_safe_local_python_executor

mcp_safe_local_python_executor: A secure MCP server for running LLM-generated Python code locally. Enhances AI model interactions safely.

mcp_safe_local_python_executor Solution Overview

The MCP Safe Local Python Executor is an MCP server that provides a secure, sandboxed Python execution environment for AI models. Leveraging the LocalPythonExecutor from Hugging Face's smolagents framework, it allows Large Language Models (LLMs) to safely run Python code locally without requiring Docker or VMs. This executor exposes a run_python tool via the MCP protocol, enabling seamless integration with MCP-compatible clients like Claude Desktop and Cursor, effectively adding a code interpreter to these applications.

Key features include a restricted execution environment with limited import capabilities and no file I/O, enhancing security compared to standard Python eval(). It operates within a uv-managed Python venv, ensuring dependency isolation. The primary value lies in its ease of setup and enhanced security, making it ideal for developers seeking a quick and safe way to enable code execution within their AI workflows. To integrate, simply configure your MCP client to point to the executor's server address.

mcp_safe_local_python_executor Key Capabilities

Secure Python Code Execution

The mcp_safe_local_python_executor provides a secure environment for executing Python code generated by Large Language Models (LLMs). It mitigates the risks associated with directly executing arbitrary code by using a restricted Python runtime based on Hugging Face's LocalPythonExecutor from the smolagents framework. This executor operates within a sandboxed environment, preventing unauthorized access to system resources and limiting the potential for malicious code execution. Unlike standard Python eval(), which can be highly vulnerable, this executor offers a safer alternative for integrating code interpretation capabilities into LLM applications.

For example, consider a scenario where an LLM is tasked with solving a complex mathematical problem. Instead of directly executing the generated Python code, mcp_safe_local_python_executor can be used to run the code in a controlled environment, preventing any potential harm to the system. The executor restricts file I/O operations and limits the import list to a curated set of safe modules, further enhancing security. This allows developers to confidently integrate code execution features into their LLM applications without compromising system integrity.

MCP-Enabled Tool Exposure

This executor seamlessly integrates with the MCP ecosystem, allowing it to be exposed as a tool to LLM applications. By acting as an MCP server using standard input/output (stdio) for communication, it can be easily connected to any MCP-compatible client, such as Claude Desktop or Cursor. This enables LLMs to leverage the executor's capabilities for tasks requiring code execution, such as data analysis, mathematical calculations, or algorithm implementation. The MCP integration simplifies the process of adding code interpretation functionality to LLM workflows.

Imagine a user interacting with Claude Desktop and requesting the calculation of a complex statistical analysis. Through the MCP protocol, Claude Desktop can invoke the mcp_safe_local_python_executor to execute the necessary Python code. The executor performs the calculation and returns the result to Claude Desktop, which then presents it to the user. This seamless integration allows users to leverage the power of Python code execution within their preferred LLM environment, enhancing their productivity and problem-solving capabilities. The configuration in Claude Desktop involves specifying the command and arguments needed to launch the mcp_server.py script, effectively registering the executor as an available tool.

Restricted Python Environment

The mcp_safe_local_python_executor enforces a restricted Python environment to minimize security risks. It operates within a Python virtual environment (venv) managed by uv, ensuring that the execution environment is isolated from the system's global Python installation. Furthermore, it restricts the import list to a predefined set of safe modules, including collections, datetime, itertools, math, queue, random, re, stat, statistics, time, and unicodedata. This prevents the LLM-generated code from accessing potentially dangerous modules or libraries.

For instance, if an LLM attempts to import the os module to perform file system operations, the mcp_safe_local_python_executor will block the import, preventing any unauthorized access to the system's file system. This restriction ensures that the LLM-generated code can only perform operations within the confines of the restricted environment, mitigating the risk of malicious activity. The use of uv for venv management provides a fast and efficient way to create and manage isolated Python environments, further enhancing the security and reliability of the executor.

Technical Implementation

The mcp_safe_local_python_executor leverages the LocalPythonExecutor from the smolagents framework as its core execution engine. This executor is a custom-built Python runtime designed for safe execution of LLM-generated code. It differs from the standard Python interpreter by implementing various security measures, such as restricting access to built-in functions and modules. The executor is integrated into an MCP server that communicates with MCP clients using standard input/output (stdio). The server receives Python code from the client, executes it within the LocalPythonExecutor, and returns the result to the client.

The choice of stdio as the transport mechanism simplifies the deployment and integration of the executor. It eliminates the need for complex network configurations or dependencies, making it easy to set up and use. The use of uv for managing the Python virtual environment ensures that the executor's dependencies are isolated from the system's global Python installation, preventing conflicts and ensuring reproducibility. The combination of these technical features provides a robust and secure platform for executing LLM-generated Python code within the MCP ecosystem.