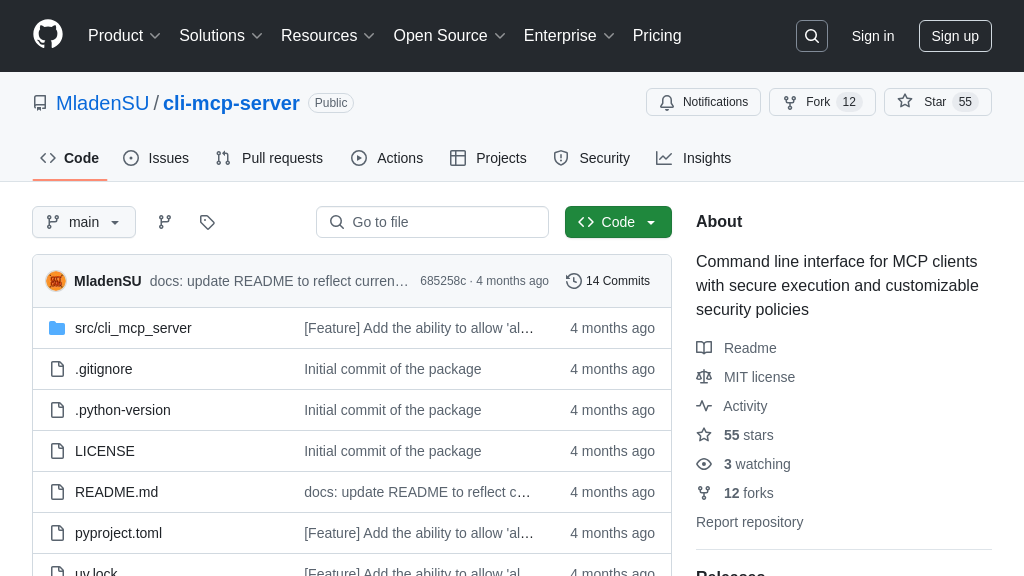

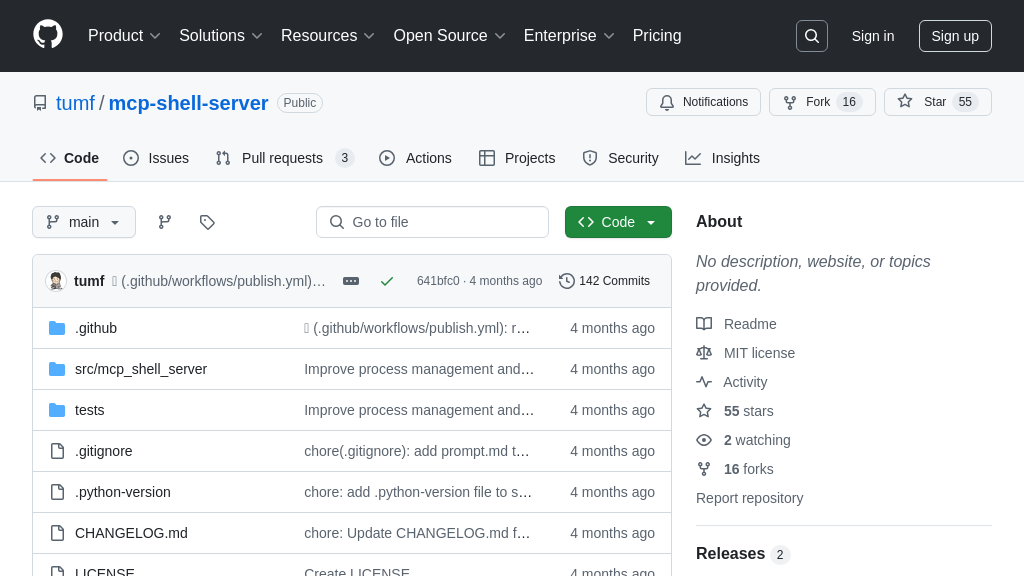

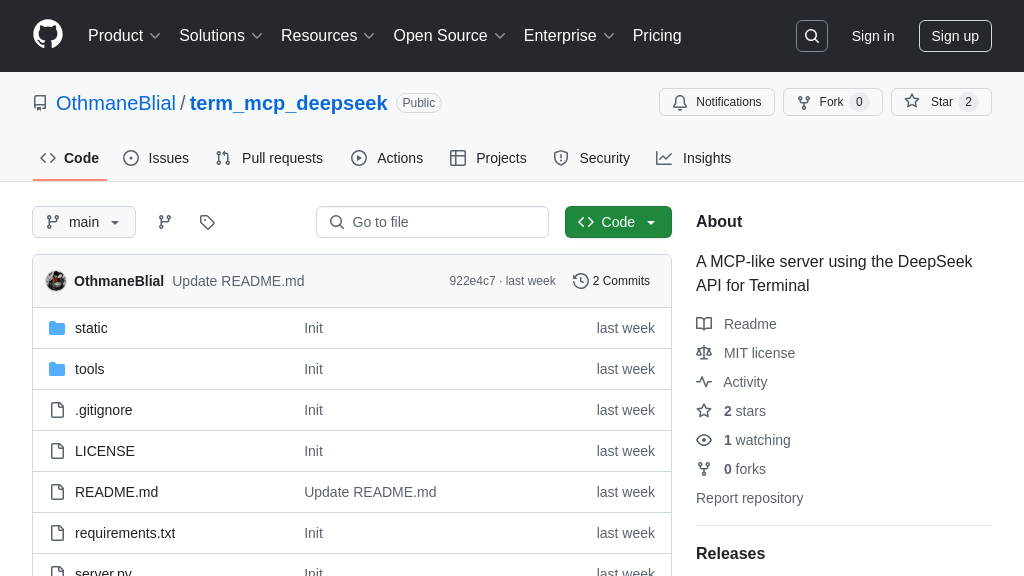

term_mcp_deepseek

term_mcp_deepseek: DeepSeek API-powered MCP server for terminal AI.

term_mcp_deepseek Solution Overview

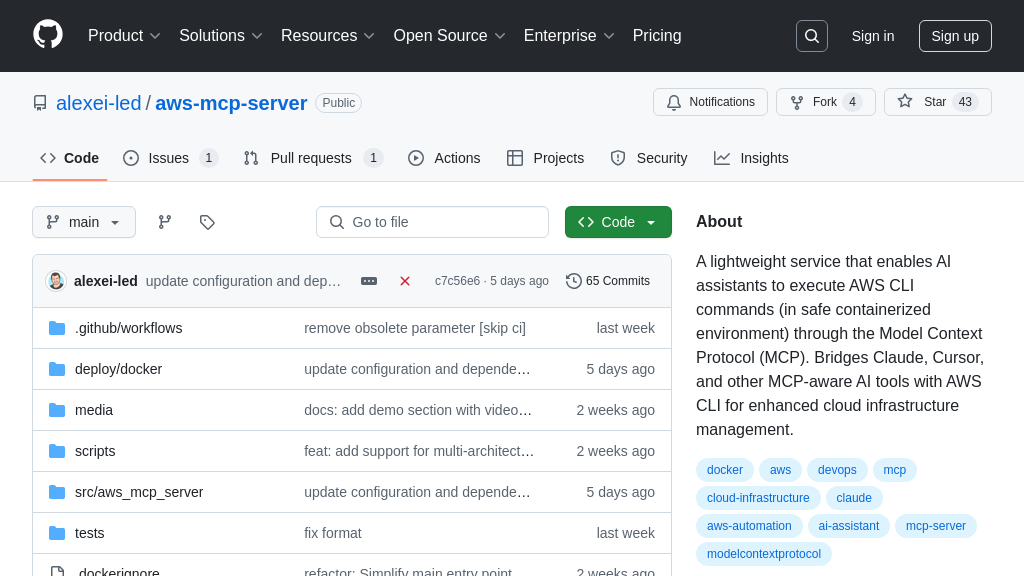

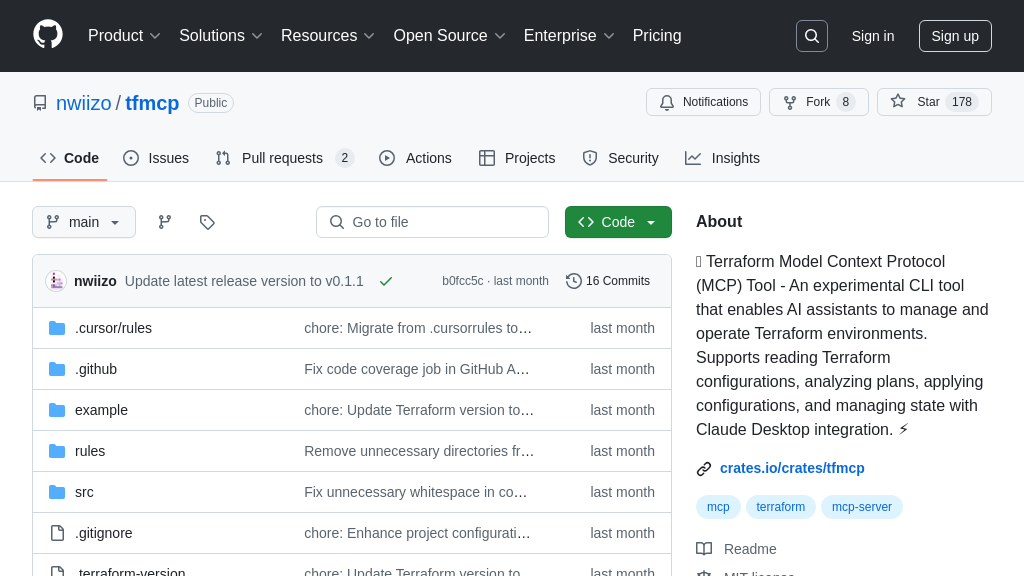

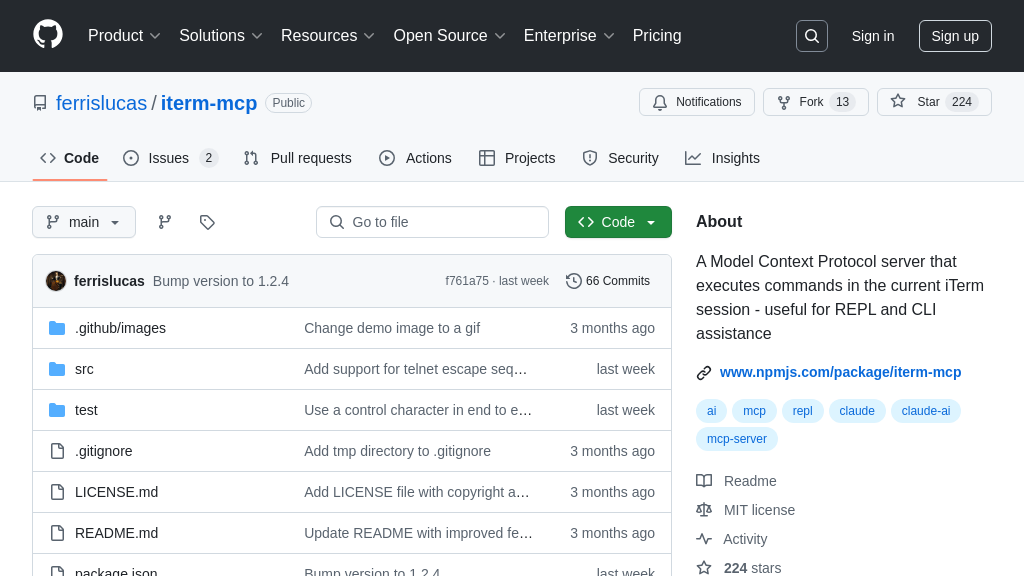

term_mcp_deepseek is a prototype server designed to explore Model Context Protocol (MCP) concepts using the DeepSeek API. Functioning as a bridge between AI models and a terminal environment, it allows AI assistants to list available tools and execute commands within a shell session.

This solution features a chat interface for interacting with the server, leveraging the DeepSeek API to interpret user messages and identify commands (indicated by "CMD:"). It then executes these commands using a persistent Bash session and returns the output. The server exposes /mcp/list_tools and /mcp/call_tool endpoints, mimicking MCP tool discovery and invocation.

While not fully MCP compliant, term_mcp_deepseek demonstrates the potential for AI-driven terminal interaction. By integrating with AI models, it streamlines tasks and enhances automation within development workflows. It's built with Python, Flask, and utilizes the pexpect library for terminal interaction.

term_mcp_deepseek Key Capabilities

Terminal Command Execution

This feature allows the DeepSeek AI model to execute shell commands within a persistent Bash session on the server. The server uses the pexpect library to manage the shell session, enabling the AI to interact with the operating system directly. When the AI generates a response containing a line starting with CMD:, the server extracts the command and executes it in the shell. The output from the command is then captured and sent back to the AI, which can use it to inform its subsequent responses. This creates a feedback loop where the AI can use the terminal to gather information, run tools, and modify the system state.

For example, an AI assistant could use this feature to check the status of a service by executing CMD: systemctl status nginx. The output of this command would be returned to the AI, allowing it to inform the user whether the service is running or not. This feature bridges the gap between the AI's reasoning capabilities and the real-world environment, enabling it to perform tasks that require direct interaction with the operating system. The technical implementation relies on pexpect to handle the complexities of interacting with a shell process, including handling prompts, sending input, and capturing output.

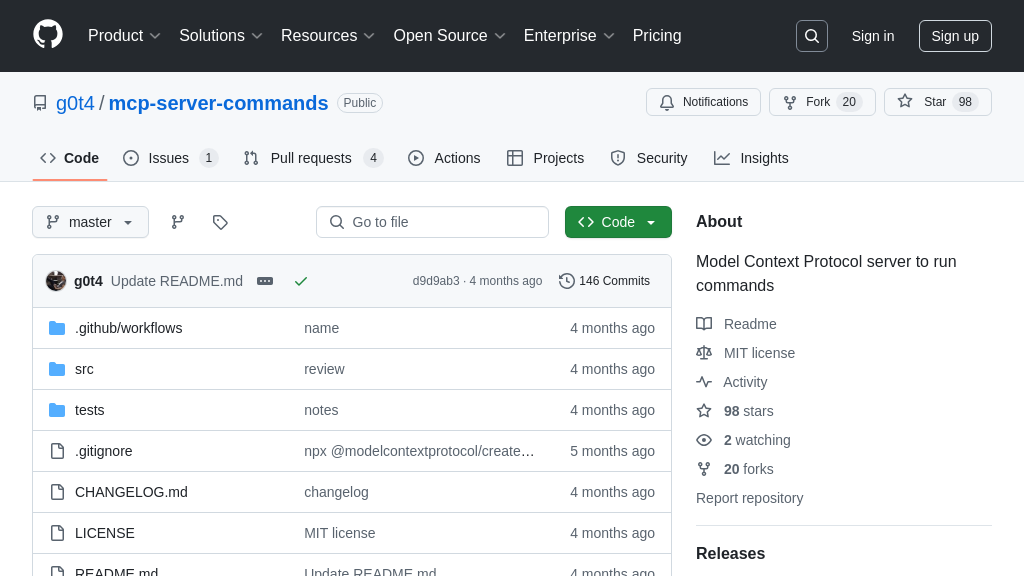

MCP-like Tool Discovery

The /mcp/list_tools endpoint mimics the MCP's tool discovery mechanism. It provides a way for AI models to query the server and discover the available tools. In this implementation, the server returns a JSON list of available tools, such as write_to_terminal, read_terminal_output, and send_control_character. This allows the AI to dynamically adapt its behavior based on the available tools, enabling it to perform a wider range of tasks. The AI can use this information to construct appropriate call_tool requests, specifying the tool to be invoked and the arguments to be passed.

For example, an AI could first call /mcp/list_tools to determine that the write_to_terminal tool is available. It could then use this tool to write a message to the terminal by calling /mcp/call_tool with the appropriate arguments. This feature is crucial for enabling AI models to interact with external systems in a flexible and dynamic way. The technical implementation involves defining a set of available tools and their corresponding functions, and then exposing these tools through the /mcp/list_tools endpoint.

MCP-like Tool Invocation

The /mcp/call_tool endpoint allows AI models to invoke specific tools on the server. This endpoint receives a JSON payload containing the name of the tool to be invoked and the arguments to be passed to the tool. The server then executes the corresponding function with the provided arguments and returns the result to the AI. This mechanism enables the AI to perform specific actions on the server, such as writing to the terminal, reading terminal output, or sending control characters.

For example, if the AI wants to create a new directory, it could call /mcp/call_tool with the tool name set to write_to_terminal and the arguments set to mkdir new_directory. The server would then execute this command in the terminal and return the output to the AI. This feature is essential for enabling AI models to control and interact with external systems in a precise and controlled manner. The technical implementation involves mapping tool names to specific functions and ensuring that the arguments are properly validated and passed to the functions.

Chat Interface

The web-based chat interface, built with Flask and Tailwind CSS, provides a user-friendly way to interact with the server and the DeepSeek AI model. Users can type messages into the chat interface, and these messages are sent to the server. The server then sends the messages to the DeepSeek API, which generates a response. If the response contains commands (lines starting with CMD:), the server executes these commands in the terminal and includes the output in the final response sent back to the user.

For example, a user could ask the AI to list the files in the current directory. The AI might respond with a message containing the command CMD: ls -l. The server would execute this command and include the output of the ls -l command in the response displayed to the user. This feature provides a convenient way for users to interact with the AI and leverage its ability to execute terminal commands. The technical implementation involves using Flask to create the web server and handle the chat messages, and Tailwind CSS to style the user interface.