kubectl-mcp-server

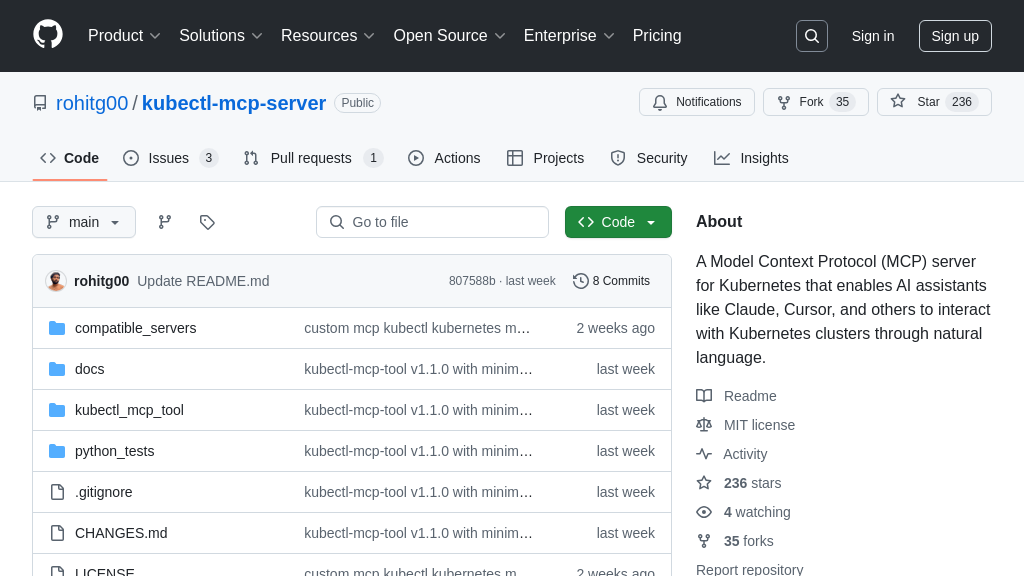

Kubectl MCP Tool: Connect AI models to Kubernetes clusters via natural language. An MCP server for seamless AI integration.

kubectl-mcp-server Solution Overview

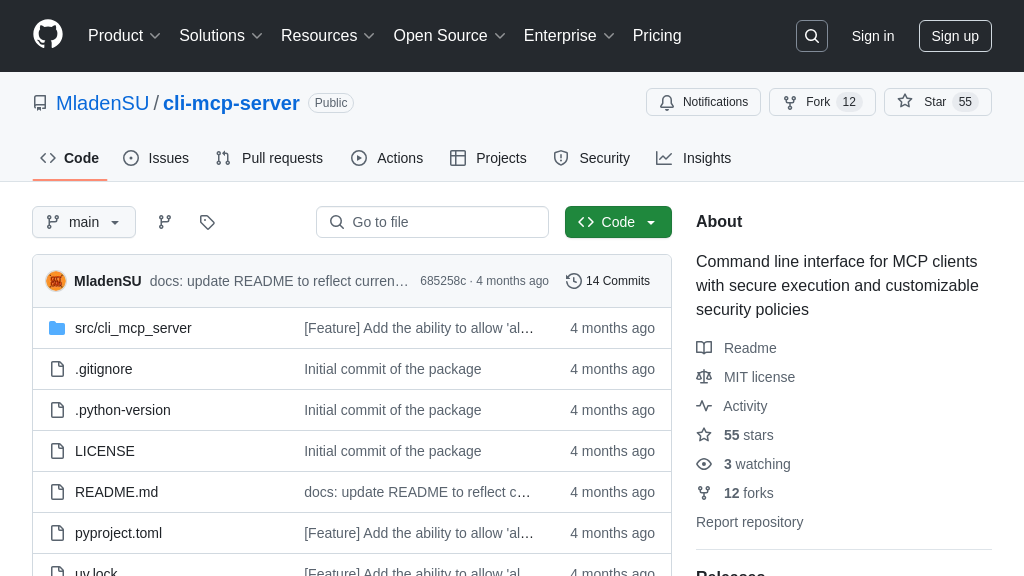

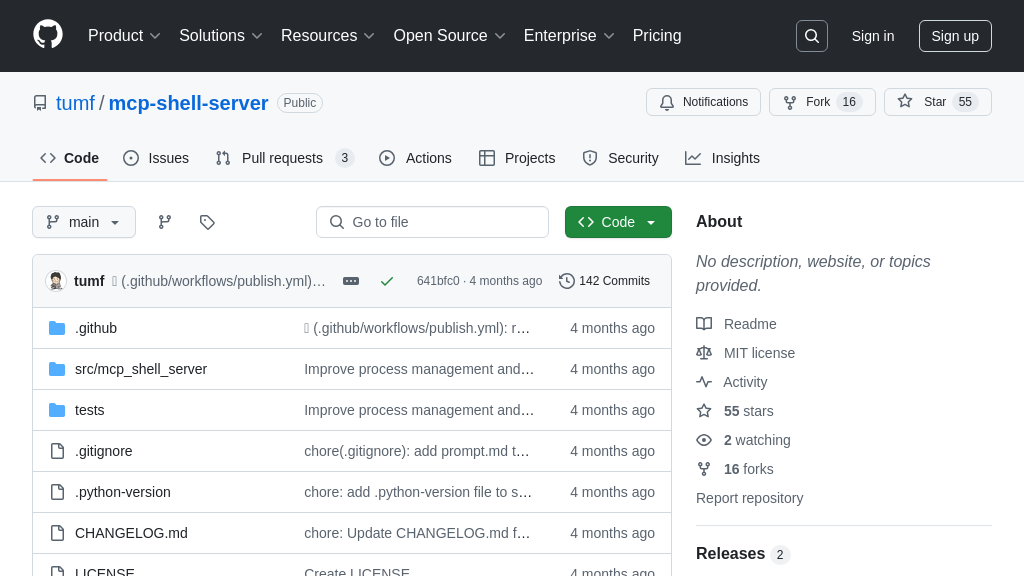

kubectl-mcp-server is an MCP server designed to bridge the gap between AI assistants and Kubernetes clusters, enabling natural language interaction with your infrastructure. It empowers AI models to perform core Kubernetes operations, such as managing pods, services, and deployments, retrieving logs, and even executing Helm commands. By translating natural language queries into kubectl commands, it simplifies cluster management and enhances productivity.

This tool supports multiple transport protocols, including stdio and SSE, ensuring compatibility with various AI clients. Key benefits include streamlined troubleshooting through cluster diagnostics, enhanced security via RBAC validation, and improved monitoring with resource utilization tracking. kubectl-mcp-server integrates seamlessly with AI assistants like Claude and Cursor, allowing developers to leverage AI for efficient Kubernetes management. It's implemented in Python and easily installed via pip, making it a valuable asset for any Kubernetes-focused development workflow.

kubectl-mcp-server Key Capabilities

Natural Language to Kubernetes

The kubectl-mcp-server translates natural language queries into kubectl commands, enabling users to interact with Kubernetes clusters using intuitive language. It leverages NLP to understand user intent, construct appropriate commands, and execute them against the cluster. This feature abstracts away the complexity of kubectl syntax, making Kubernetes management accessible to users with varying levels of expertise. The server maintains context across commands, allowing for follow-up questions and iterative refinement of operations.

For example, a user can ask "List all pods in the default namespace," and the server will translate this into the equivalent kubectl get pods command. This simplifies common tasks and reduces the learning curve for new Kubernetes users. The server also supports more complex queries, such as "Create a deployment named nginx-test with 3 replicas using the nginx:latest image," which translates into a kubectl create deployment command with the specified parameters. This feature relies on the natural_language.py module for processing and interpreting user input.

Kubernetes Resource Management

This feature provides comprehensive management capabilities for Kubernetes resources, including pods, services, deployments, and nodes. It allows users to list, create, delete, describe, and manage these resources through natural language or direct command execution. The server supports Helm v3 operations, enabling the installation, upgrade, and uninstallation of Helm charts. It also offers features like namespace selection, port forwarding, scaling deployments, executing commands in containers, and managing ConfigMaps and Secrets.

For instance, a developer can use the server to scale a deployment by asking "Scale the nginx-test deployment to 5 replicas," which translates into a kubectl scale deployment command. Similarly, they can retrieve logs from a pod by asking "Get logs from the nginx-test pod," which executes kubectl logs. This feature streamlines resource management tasks and provides a unified interface for interacting with various Kubernetes objects. The core logic for this feature resides in mcp_kubectl_tool.py, which translates MCP tool calls into Kubernetes API operations.

Cluster Monitoring and Diagnostics

The kubectl-mcp-server offers robust monitoring and diagnostic capabilities, providing insights into cluster health, resource utilization, and pod status. It tracks resource usage via kubectl top, monitors container readiness and liveness, and provides historical performance tracking. The server also includes diagnostic tools for troubleshooting cluster issues, validating configurations, and analyzing errors. It identifies resource constraints, validates configurations for misconfigurations, and provides detailed liveness and readiness probe validation.

For example, a user can check the CPU usage of all pods in a namespace by asking "Show me the CPU usage of all pods," which leverages kubectl top pod command. If a pod is failing its liveness probe, the server can provide detailed information about the probe configuration and any associated error messages. This feature helps users proactively identify and resolve issues, ensuring the stability and performance of their Kubernetes clusters. The monitoring functionalities are implemented in the monitoring/ directory, while diagnostics are handled in diagnostics.py.

Multiple Transport Protocols

The server supports multiple transport protocols, including standard input/output (stdio) and Server-Sent Events (SSE). This flexibility allows it to integrate with a wide range of AI assistants and client applications. The choice of transport protocol can be configured based on the specific requirements of the client and the network environment. SSE provides a persistent connection for real-time updates, while stdio offers a simpler, request-response model.

This feature enables seamless integration with various AI assistants like Claude, Cursor, and Windsurf, each potentially having different communication preferences. The mcp_server.py handles the transport layer, allowing the server to adapt to different client requirements. This ensures broad compatibility and ease of integration within the MCP ecosystem.