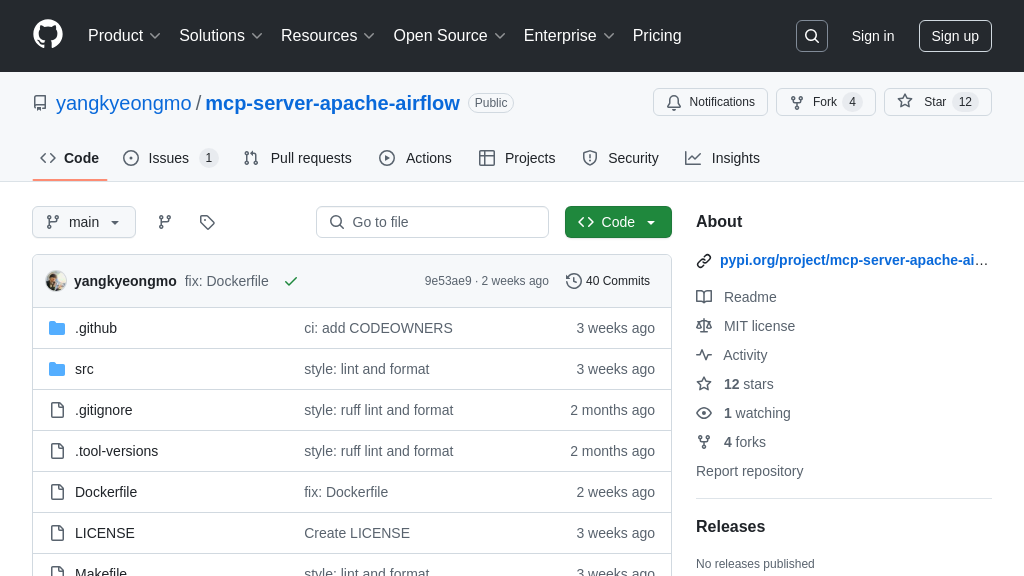

mcp-server-apache-airflow

Integrate AI models with Apache Airflow using the mcp-server-apache-airflow, a Model Context Protocol server.

mcp-server-apache-airflow Solution Overview

mcp-server-apache-airflow is an MCP server designed to seamlessly integrate Apache Airflow with MCP clients. It acts as a bridge, enabling AI models to interact with Airflow's powerful workflow management capabilities through a standardized MCP interface. This server wraps Airflow's REST API, allowing clients to manage DAGs, trigger DAG runs, manage variables, connections, datasets, and monitor Airflow's health, all via the MCP protocol.

By providing a consistent interface, mcp-server-apache-airflow simplifies the integration process, eliminating the need for custom code to interact with Airflow's API directly. This allows developers to focus on building AI-driven workflows, leveraging Airflow's scheduling and orchestration features. The server supports various API groups, allowing users to select the specific functionalities they need. It uses the official Apache Airflow client library, ensuring compatibility and maintainability. This solution empowers AI models to trigger, monitor, and manage complex data pipelines within Airflow, enhancing their ability to leverage real-world data and workflows.

mcp-server-apache-airflow Key Capabilities

DAG Management via MCP

The mcp-server-apache-airflow provides comprehensive DAG (Directed Acyclic Graph) management capabilities through the Model Context Protocol. This allows AI models to programmatically interact with Airflow DAGs, including listing available DAGs, retrieving detailed information about specific DAGs, pausing or unpausing DAGs, updating DAG configurations, deleting DAGs, and accessing the source code of DAGs. This feature enables AI models to dynamically adjust and control data pipelines within Airflow based on real-time insights or changing requirements.

For example, an AI model monitoring data quality could automatically pause a DAG if it detects a significant drop in data quality, preventing downstream processes from using corrupted data. Conversely, it could unpause a DAG once the data quality issue is resolved. The server leverages Airflow's REST API to perform these actions, ensuring compatibility and maintainability. This integration simplifies the process of incorporating AI-driven decision-making into data pipeline management.

Dynamic DAG Run Control

This feature allows MCP clients to manage DAG runs within Apache Airflow. AI models can trigger new DAG runs, retrieve details about existing runs, update the state of a DAG run, and even delete runs. This dynamic control is crucial for AI-driven workflows that require on-demand data processing or adjustments to existing pipelines. The ability to clear DAG runs and set notes provides additional control and context for managing complex workflows.

Imagine an AI model that predicts customer churn. Based on the prediction, it can trigger a DAG run to execute a targeted marketing campaign. The model can then monitor the DAG run's progress and update the run's state based on the campaign's performance. This level of integration allows for a closed-loop system where AI models directly influence and manage data processing workflows. The server uses the Airflow client library to interact with the Airflow API, ensuring seamless integration and compatibility.

Variable Management for AI Models

The mcp-server-apache-airflow enables AI models to manage Airflow variables through the MCP. This includes listing, creating, retrieving, updating, and deleting variables. Airflow variables are key-value pairs that can be used to store configuration parameters, API keys, or any other data that needs to be accessed by tasks within a DAG. This feature allows AI models to dynamically configure and customize data pipelines based on real-time conditions or learned insights.

For instance, an AI model could update an Airflow variable containing the threshold for anomaly detection. Based on the model's analysis of historical data, it can adjust the threshold to optimize the detection of anomalies in incoming data streams. This dynamic adjustment ensures that the data pipeline remains responsive to changing data patterns. The server translates MCP requests into corresponding Airflow API calls, providing a standardized interface for AI models to interact with Airflow's variable management system.