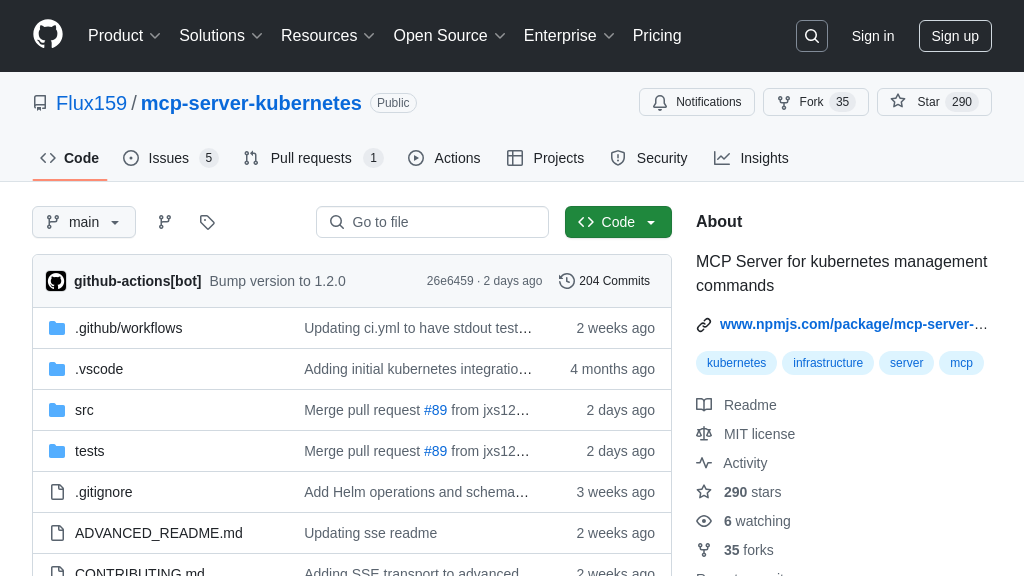

mcp-server-kubernetes

Manage Kubernetes with AI using mcp-server-kubernetes, an MCP Server for seamless AI model integration.

mcp-server-kubernetes Solution Overview

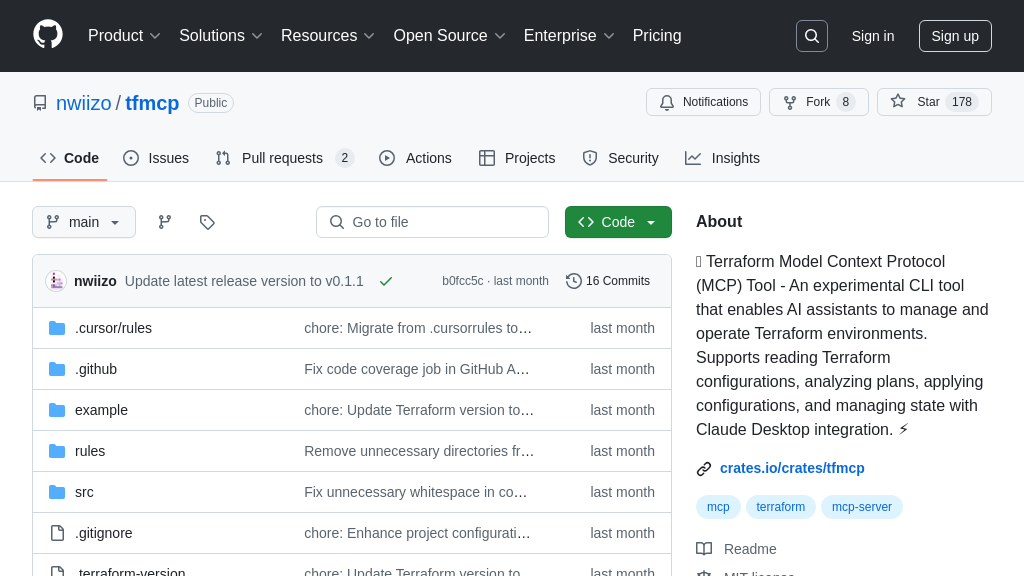

mcp-server-kubernetes is an MCP Server designed to empower AI models with the ability to interact with and manage Kubernetes clusters. This server acts as a bridge, enabling AI to dynamically manage Kubernetes resources, list pods, services, deployments, and namespaces, create custom configurations, and even get logs from pods. It also boasts Helm v3 support, allowing AI models to install, upgrade, and uninstall Helm charts.

By connecting to your existing kubectl context, mcp-server-kubernetes eliminates credential management overhead. Developers can seamlessly integrate this server with AI model clients like Claude Desktop or mcp-chat to build intelligent applications that automate infrastructure management tasks. The core value lies in enabling AI-driven orchestration, allowing models to scale applications, troubleshoot issues, and optimize resource utilization within Kubernetes environments. It's built using Typescript and integrates directly with the Kubernetes API.

mcp-server-kubernetes Key Capabilities

Kubernetes Resource Management

The mcp-server-kubernetes empowers AI models to directly manage Kubernetes resources, bridging the gap between AI-driven decision-making and infrastructure orchestration. It allows AI models to list, create, describe, and delete Kubernetes primitives such as pods, services, deployments, and namespaces. This functionality enables AI models to dynamically adjust infrastructure based on real-time data analysis and predictive algorithms. For example, an AI model monitoring application performance could automatically scale up deployment replicas during peak traffic or create new namespaces for isolated testing environments. This direct control streamlines DevOps workflows and enables intelligent, automated infrastructure management. The server leverages the kubectl command-line tool, ensuring compatibility with existing Kubernetes configurations and security models.

Real-time Log Retrieval

This feature provides AI models with the ability to access real-time logs from pods, deployments, jobs, and even based on label selectors. This is crucial for monitoring application health, debugging issues, and gaining insights into system behavior. Imagine an AI model trained to detect anomalies in log data; it can use this feature to continuously monitor application logs, identify potential problems, and trigger alerts or automated remediation actions. The server supports various log sources within Kubernetes, offering a comprehensive view of the cluster's operational status. The implementation uses kubectl logs command, providing a familiar and reliable mechanism for log retrieval.

Helm Chart Management

The mcp-server-kubernetes integrates Helm v3, enabling AI models to deploy, upgrade, and manage applications packaged as Helm charts. This allows AI models to automate the deployment of complex applications with custom configurations. For instance, an AI-powered deployment pipeline could use this feature to deploy different versions of an application based on A/B testing results or to automatically roll back to a previous version if issues are detected. The server supports custom values, namespaces, version specification, and custom repositories, providing flexibility in Helm chart management. The server utilizes the Helm CLI, ensuring compatibility with existing Helm repositories and workflows.