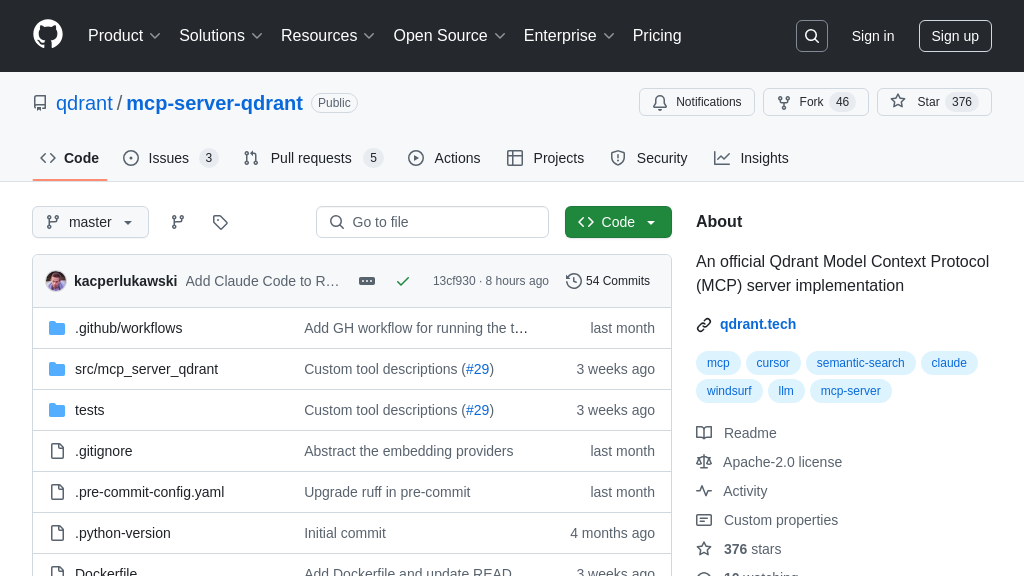

mcp-server-qdrant

mcp-server-qdrant: An MCP server for semantic memory using Qdrant. Connect LLMs to a vector database for persistent knowledge.

mcp-server-qdrant Solution Overview

The mcp-server-qdrant is an MCP server that provides semantic memory capabilities to AI models by leveraging the Qdrant vector search engine. It acts as a bridge, allowing models to store and retrieve information from a Qdrant database using natural language queries.

This server offers two primary tools: qdrant-store for saving information and associated metadata, and qdrant-find for retrieving relevant information based on a query. By using vector embeddings, it enables semantic search, allowing AI models to find information based on meaning rather than exact keyword matches.

The core value lies in its ability to provide AI models with a persistent and contextually relevant memory, enhancing their ability to reason, generate more accurate responses, and learn from past interactions. It integrates seamlessly with any MCP-compatible client and supports multiple transport protocols like standard I/O and Server-Sent Events (SSE). Configuration is managed through environment variables, offering flexibility in deployment and usage.

mcp-server-qdrant Key Capabilities

Semantic Memory Storage

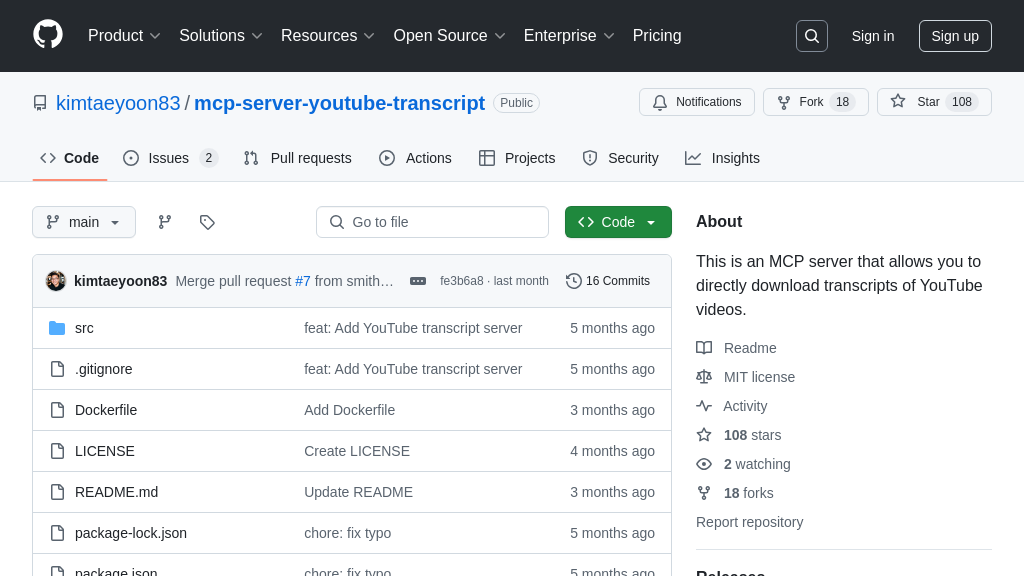

The mcp-server-qdrant acts as a semantic memory layer by storing information in the Qdrant vector search engine. It leverages the qdrant-store tool, which accepts information as a string and optional metadata in JSON format. This allows AI models to offload and persist contextual data, creating a long-term memory store. The server automatically encodes the input information into vector embeddings using a specified embedding model (defaulting to sentence-transformers/all-MiniLM-L6-v2), enabling semantic similarity searches. This feature is crucial for AI applications that need to remember and recall past interactions or learned knowledge.

For example, a chatbot could store user preferences and conversation history in Qdrant. When a user returns, the chatbot can retrieve relevant information from the vector database to personalize the interaction. The metadata field can be used to store additional context, such as timestamps or user IDs. The technical implementation involves using the FastEmbed library to generate embeddings and the Qdrant client to interact with the Qdrant database.

Semantic Information Retrieval

The mcp-server-qdrant enables AI models to retrieve relevant information from the Qdrant database using semantic search. The qdrant-find tool accepts a query string and returns information stored in the database as separate messages. This allows AI models to access and utilize previously stored knowledge to enhance their reasoning and decision-making processes. The semantic search capability ensures that the model retrieves information based on meaning rather than exact keyword matches, leading to more accurate and contextually relevant results.

Consider a code generation tool that stores code snippets and their descriptions. When a developer asks the tool to generate code for a specific task, the tool can use qdrant-find to search for relevant code snippets in the database. The tool can then use these snippets as a starting point for generating the desired code, saving time and effort. The technical implementation involves encoding the query string into a vector embedding and performing a similarity search against the embeddings stored in the Qdrant database.

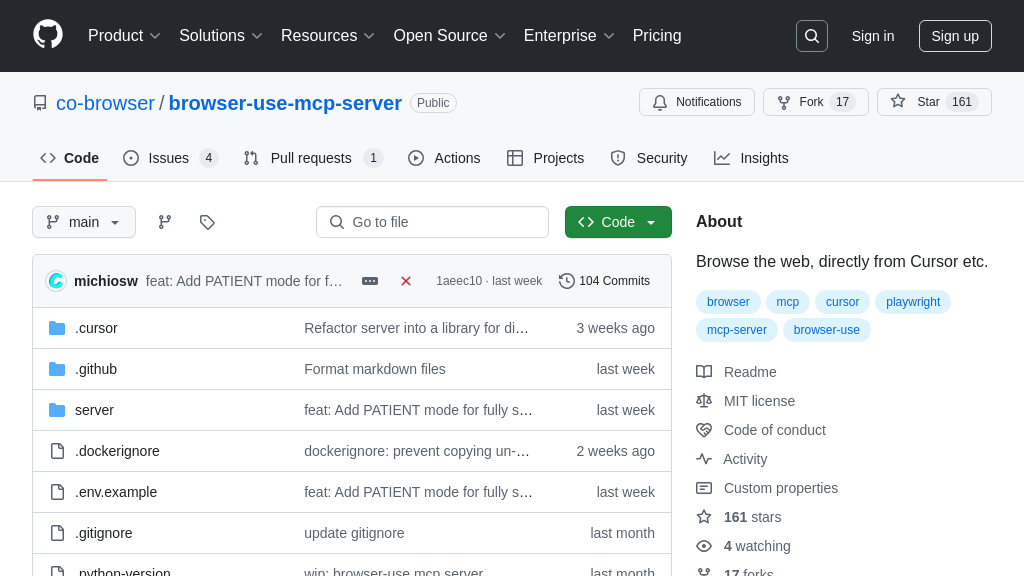

Integration with MCP Ecosystem

The mcp-server-qdrant seamlessly integrates with the Model Context Protocol (MCP) ecosystem, providing a standardized way for AI models to interact with a vector database. By adhering to the MCP, the server can be easily connected to various MCP-compatible clients, such as Claude, Cursor, and Windsurf. This interoperability allows developers to build AI applications that can leverage the power of semantic memory without being tied to a specific platform or tool. The server supports multiple transport protocols, including stdio and sse, enabling both local and remote connections.

For instance, a developer can use the mcp-server-qdrant with Cursor to create a code search tool. By configuring the server with appropriate tool descriptions, the developer can store code snippets and their descriptions in Qdrant and then use Cursor to search for relevant code snippets based on natural language queries. The SSE transport protocol allows the server to be hosted remotely and accessed by multiple developers. The MCP integration simplifies the process of connecting AI models to external data sources and tools, fostering innovation and collaboration.

Security Considerations

The mcp-server-qdrant has a known security vulnerability, identified as CVE-2024-4994, which could lead to Remote Code Execution (RCE). It is crucial to update to version 0.7.2 or later to mitigate this risk. This highlights the importance of staying up-to-date with security patches and best practices when deploying and using the mcp-server-qdrant.

Flexible Deployment Options

The mcp-server-qdrant offers flexible deployment options, including using uvx, Docker, and Smithery. This allows developers to choose the deployment method that best suits their needs and infrastructure. The Dockerfile provides a convenient way to build and run the server in a containerized environment, while uvx simplifies the process of running the server directly. Smithery enables automated installation for specific clients like Claude Desktop. This flexibility makes it easy to integrate the mcp-server-qdrant into existing AI development workflows.