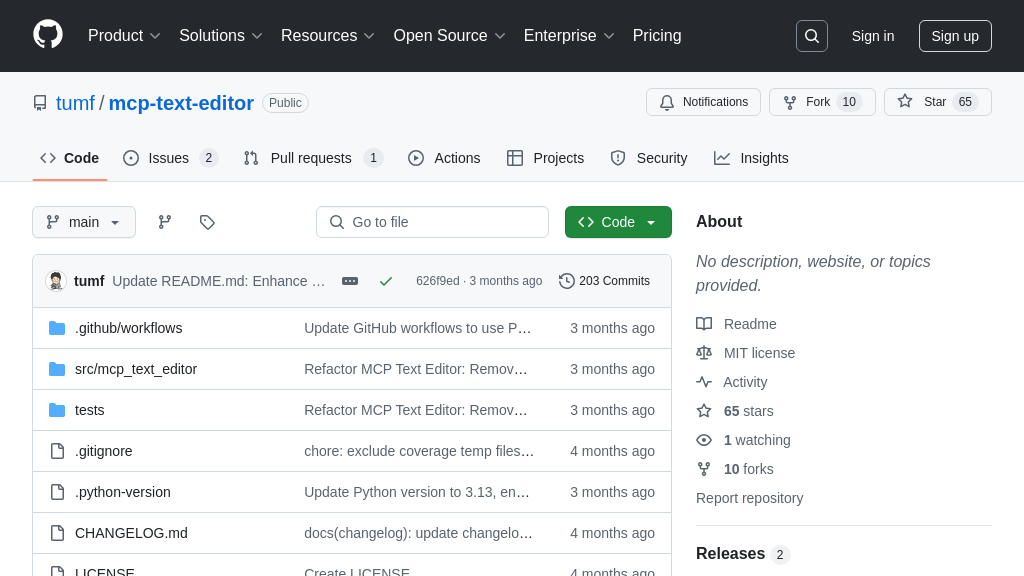

mcp-text-editor

mcp-text-editor: An MCP server for efficient LLM text file editing.

mcp-text-editor Solution Overview

The MCP Text Editor Server is a powerful tool designed to provide line-oriented text file editing capabilities to AI models through a standardized MCP interface. This server excels at enabling AI to interact with and modify text files safely and efficiently, particularly beneficial for LLM tools where token usage is a concern.

Key features include token-efficient partial file access with line-range specifications, safe concurrent editing with hash-based validation, and comprehensive encoding support. It allows AI models to get text file contents with line range specification and apply line-based patches with conflict detection. By implementing the Model Context Protocol, the Text Editor Server ensures reliable file editing, making it ideal for collaborative editing tools, automated text processing systems, and scenarios requiring synchronized file access. This server empowers developers to build AI-driven applications that can seamlessly read, modify, and manage text-based data. Installation is straightforward, with options for uvx, Smithery, or manual setup.

mcp-text-editor Key Capabilities

Line-Oriented Editing

The MCP Text Editor Server provides precise, line-based manipulation of text files, enabling AI models to make targeted changes without processing entire documents. This functionality is crucial for tasks like code refactoring, content updates, or configuration adjustments. The server allows reading and writing specific line ranges, ensuring that only the necessary parts of a file are accessed and modified. This approach minimizes the amount of data transferred, reducing latency and computational overhead, especially beneficial when working with large files or limited bandwidth. The line-oriented design simplifies conflict resolution, as changes are localized and easier to track.

For example, an AI model could use this feature to automatically update a configuration file by modifying specific lines containing outdated parameters. The model would first read the relevant lines, determine the necessary changes, and then use the server to replace those lines with the updated values. This targeted approach ensures that only the required modifications are made, minimizing the risk of unintended side effects.

Token-Efficient Partial Access

A key feature is the ability to access specific portions of text files, defined by line ranges. This is particularly valuable for LLM-based tools, where token usage directly impacts cost and performance. By only loading the necessary parts of a file, the server minimizes the number of tokens required for processing, leading to significant savings and faster response times. The server supports retrieving multiple ranges from multiple files in a single operation, further optimizing efficiency. This feature is essential for AI models that need to analyze or modify specific sections of a file without incurring the overhead of processing the entire document.

Consider an AI model tasked with summarizing specific sections of a large log file. Instead of loading the entire file into memory, the model can use the server to retrieve only the relevant line ranges, such as those containing error messages or specific events. This targeted approach significantly reduces token consumption and processing time, making the task more efficient and cost-effective.

Concurrent Edit Handling

The server employs hash-based validation to ensure safe concurrent editing of text files. Before applying any changes, the server verifies that the current hash of the file matches the hash provided by the client. If the hashes do not match, it indicates that the file has been modified by another process, preventing potential conflicts and data corruption. This mechanism ensures that all edits are based on the latest version of the file, maintaining data integrity. The server provides detailed error messages, including the current hash and content of the file, to help clients resolve conflicts and retry their operations.

Imagine a collaborative coding environment where multiple AI agents are working on the same codebase. The concurrent edit handling feature ensures that each agent's changes are applied safely and consistently, even if they are modifying the same files simultaneously. If one agent attempts to modify a file that has been changed by another agent, the server will detect the conflict and prevent the changes from being applied, prompting the agent to retrieve the latest version of the file and reapply its changes.

Flexible Encoding Support

The MCP Text Editor Server supports a wide range of character encodings, including UTF-8, Shift_JIS, and Latin1. This flexibility allows AI models to work with text files in various languages and formats, ensuring compatibility and preventing encoding-related errors. The server allows clients to specify the encoding of the file when reading or writing content, ensuring that the data is correctly interpreted and processed. This feature is crucial for applications that need to handle text files from diverse sources or legacy systems.

For instance, an AI model might need to process text files generated by a Japanese application that uses the Shift_JIS encoding. By specifying the correct encoding when accessing the file, the model can ensure that the text is displayed and processed correctly, avoiding garbled characters or data loss. The server's encoding support simplifies the integration of AI models with existing systems and data sources.

Atomic Multi-File Operations

The server supports atomic multi-file operations, allowing AI models to perform complex changes across multiple files in a single transaction. This ensures that all changes are applied consistently, preventing partial updates or data inconsistencies. If any part of the operation fails, the entire transaction is rolled back, guaranteeing data integrity. This feature is particularly useful for tasks like code refactoring, where changes need to be applied across multiple files simultaneously.

Consider an AI model tasked with renaming a function across an entire project. The atomic multi-file operation feature allows the model to update all occurrences of the function name in a single transaction. If any of the file modifications fail, the entire operation is rolled back, ensuring that the codebase remains in a consistent state. This feature simplifies complex refactoring tasks and reduces the risk of introducing errors.