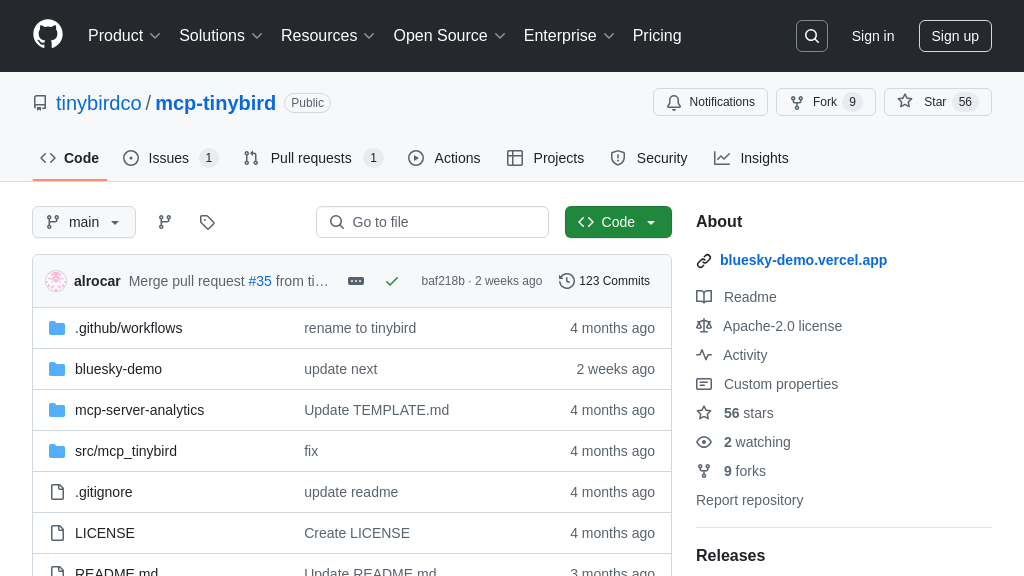

mcp-tinybird

mcp-tinybird: An MCP server bridging AI models and Tinybird for real-time data interaction and analytics.

mcp-tinybird Solution Overview

The Tinybird MCP server acts as a crucial bridge, connecting AI models to the powerful Tinybird data platform. As an MCP server, it enables seamless interaction with your Tinybird Workspace, facilitating both data retrieval and manipulation. Key features include the ability to query Tinybird Data Sources, access existing API Endpoints, and efficiently push datafiles.

This server empowers AI models to leverage real-time analytics from Tinybird, enhancing their decision-making capabilities. It solves the developer challenge of integrating AI with live data, offering a streamlined approach to building data-driven AI applications. Supporting both SSE and STDIO modes, the Tinybird MCP server integrates smoothly into various client-server architectures. By providing tools to list data sources, request pipe data, and even analyze pipe performance, it unlocks the full potential of Tinybird within the MCP ecosystem. It's easily installed via Smithery or mcp-get, making it a valuable asset for developers seeking to infuse AI models with real-time insights.

mcp-tinybird Key Capabilities

Query Tinybird Data Sources

The mcp-tinybird server allows AI models to directly query data stored within Tinybird data sources. This is achieved by translating natural language requests from the AI model into SQL queries that are executed against the Tinybird data source using the Tinybird Query API. The results are then returned to the AI model in a structured format, enabling the model to leverage real-time data for enhanced decision-making and insights. This feature eliminates the need for complex data pipelines or intermediary data stores, providing a streamlined approach to data access.

For example, an AI model could use this feature to analyze website traffic data stored in Tinybird to identify trends in user behavior and optimize content accordingly. The AI model could ask: "What are the top 5 most visited pages in the last week?" The mcp-tinybird server would translate this into a SQL query, execute it against the Tinybird data source, and return the results to the AI model.

Technically, this is implemented using the run-select-query tool, which takes a SQL query as input and returns the results as a JSON object. The server uses the Tinybird API URL and Admin Token to authenticate and authorize the query.

Access Pre-built API Endpoints

This feature enables AI models to access pre-calculated metrics and insights exposed as API endpoints within Tinybird. Instead of querying raw data, the AI model can request data from existing Tinybird API Endpoints via HTTP requests. This allows the AI model to quickly retrieve aggregated and transformed data, reducing the computational load on both the AI model and the Tinybird instance. This is particularly useful for accessing frequently used metrics or complex calculations that have already been defined as API endpoints.

Imagine an AI model designed to provide real-time marketing recommendations. Instead of calculating key performance indicators (KPIs) from raw data, it can use this feature to access pre-built Tinybird API endpoints that provide metrics such as conversion rates, customer acquisition costs, and return on ad spend. The AI model can then use these metrics to generate personalized recommendations for marketing campaigns.

The request-pipe-data tool facilitates this functionality. It takes the name of a Tinybird Pipe Endpoint and any required parameters as input, makes an HTTP request to the endpoint, and returns the data to the AI model.

Push Datafiles to Tinybird

The mcp-tinybird server allows AI models to push datafiles directly into a Tinybird Workspace. This feature enables AI models to create or update Data Sources or Pipes in Tinybird from local datafiles. This is useful for scenarios where the AI model generates new data or transforms existing data and needs to persist it in Tinybird for further analysis or visualization. This eliminates the need for manual data uploads or complex data ingestion pipelines.

For instance, an AI model could generate synthetic data for testing purposes and then use this feature to push the data into a Tinybird Data Source. Alternatively, an AI model could transform data from one format to another and then push the transformed data into a Tinybird Pipe for real-time analysis. The push-datafile tool handles the creation of remote Data Sources or Pipes in the Tinybird Workspace from a local datafile. The Filesystem MCP can be used to save files generated by the MCP server.

Comprehensive Toolset for Data Interaction

The mcp-tinybird server provides a rich set of tools that enable AI models to interact with Tinybird in various ways. These tools include functionalities such as listing data sources and pipes, retrieving schema information, running SQL queries, appending insights, accessing Tinybird documentation, saving events, and analyzing pipe performance. This comprehensive toolset provides AI models with the flexibility and control needed to effectively leverage Tinybird for data analysis and decision-making.

For example, an AI model could use the list-data-sources tool to discover available data sources in the Tinybird workspace, the get-data-source tool to retrieve the schema of a specific data source, and the run-select-query tool to execute a custom SQL query against the data source. The analyze-pipe tool can be used to optimize the performance of existing pipes.

Integration with Standard I/O and SSE

The mcp-tinybird server supports both standard input/output (stdio) and Server-Sent Events (SSE) modes for communication with AI models. This allows for flexible integration with a wide range of MCP clients and environments. Stdio mode is suitable for command-line applications and local development, while SSE mode is ideal for web applications and real-time data streaming. This dual-mode support ensures that the mcp-tinybird server can be easily integrated into existing AI workflows.

For example, the Claude Desktop application can communicate with the mcp-tinybird server using stdio mode, while a web application can use SSE mode to receive real-time updates from Tinybird. The choice of communication mode depends on the specific requirements of the AI model and the environment in which it is deployed.