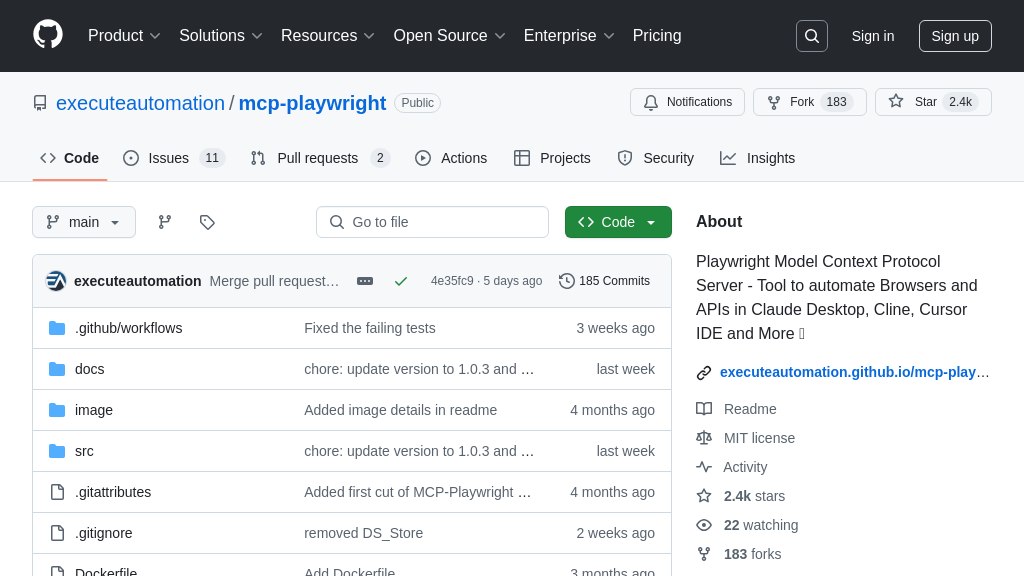

mcp-use

mcp-use is an open-source MCP Client library enabling developers to connect any LLM to any MCP server. Build custom agents and avoid vendor lock-in with this flexible AI model integration tool.

mcp-use Solution Overview

mcp-use is a versatile, open-source MCP Client library, empowering developers to connect any LangChain-supported Large Language Model (LLM) directly to any MCP server. Positioned as a vital client component within the MCP architecture, it facilitates the creation of sophisticated custom AI agents capable of utilizing external tools and data sources exposed via MCP. Its core strength lies in its open-source nature, offering freedom from vendor lock-in often associated with proprietary clients. mcp-use features a straightforward JSON-based configuration for easy setup and supports HTTP connections, simplifying integration with web-accessible MCP servers. By leveraging mcp-use, developers can seamlessly bridge their chosen LLMs with the expanding world of MCP resources, significantly enhancing AI application functionality and flexibility without proprietary constraints. Get started quickly with simple installation and configuration steps outlined in the documentation.

mcp-use Key Capabilities

Universal MCP Connectivity

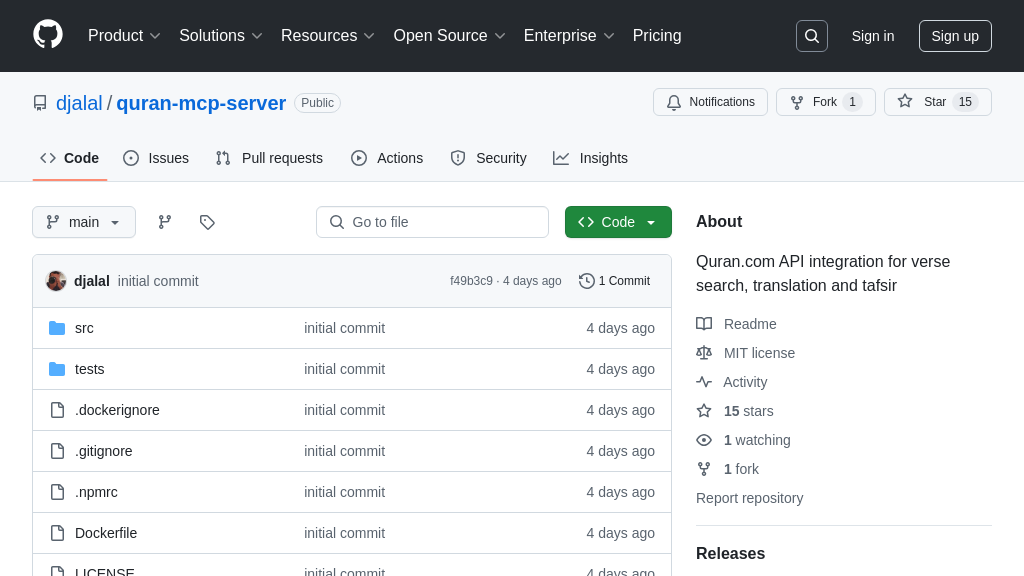

mcp-use serves as a versatile bridge within the MCP ecosystem, specifically designed to connect any Language Learning Model (LLM) supported by the LangChain framework to any compliant MCP server. This core functionality abstracts the complexities of the Model Context Protocol, allowing developers to focus on building intelligent agents rather than low-level communication protocols. By adhering to the MCP standard, mcp-use ensures interoperability, acting as a universal translator between the LLM's processing capabilities and the diverse external resources or tools made available through MCP servers. It effectively decouples the choice of LLM from the choice of MCP tools, enabling developers to mix and match components freely. This promotes flexibility and prevents scenarios where a specific LLM is tied to a proprietary or limited set of tools. The library handles the request/response cycles defined by MCP, managing the flow of information between the AI model and the external system accessed via the server.

- Usage Scenario: A developer building a research assistant application using an open-source LLM like Llama 3 via LangChain can use

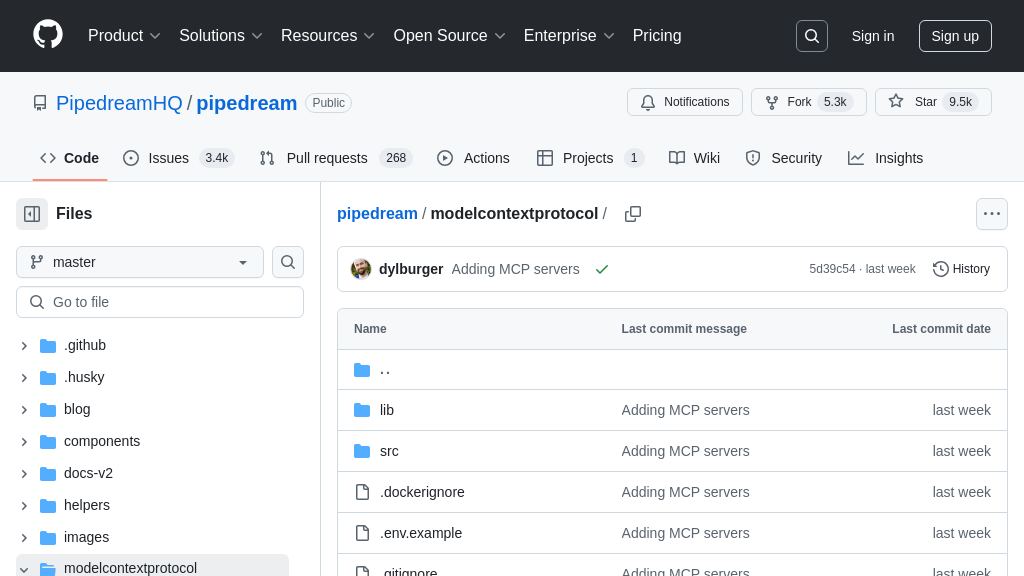

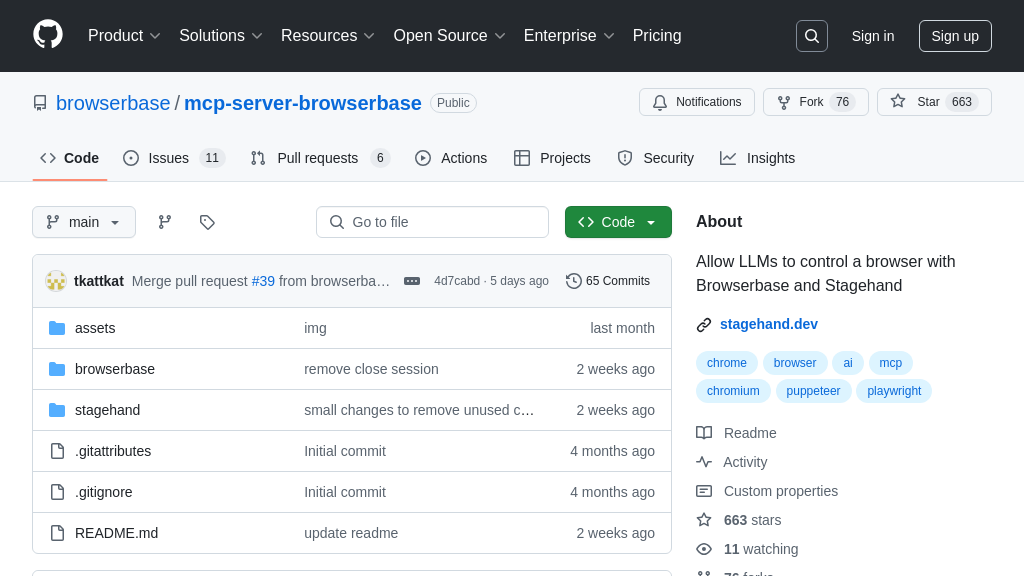

mcp-useto connect their agent to an enterprise MCP server hosting internal document databases and search tools, without needing to modify the agent's core logic significantly when switching tools or LLM providers. - Technical Detail: Implements the client-side logic of the MCP client-server architecture, capable of communicating with any server exposing an MCP-compliant endpoint.

Open Source Flexibility

As an open-source MCP client library, mcp-use offers significant advantages over closed-source or platform-specific alternatives. Its primary benefit is the complete freedom from vendor lock-in, allowing developers and organizations to use, inspect, modify, and distribute the library without restrictive licensing agreements or dependencies on a single provider's ecosystem. This transparency is crucial for security-conscious environments where code auditing is necessary. Furthermore, the open-source nature fosters community collaboration, potentially leading to faster bug fixes, new feature implementations, and broader compatibility driven by user needs rather than a vendor's roadmap. Developers can directly contribute improvements or tailor the library to specific requirements, such as adding custom logging, integrating unique authentication methods, or optimizing performance for particular use cases. This contrasts sharply with closed systems where users are limited to the features and integrations provided by the vendor, often incurring licensing costs.

- Usage Scenario: A startup developing a novel AI agent can leverage

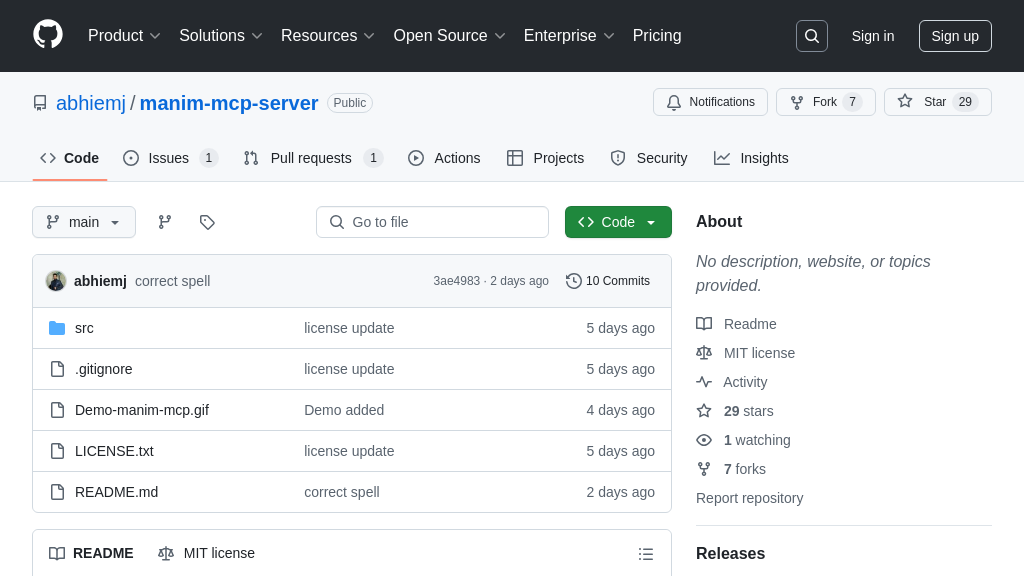

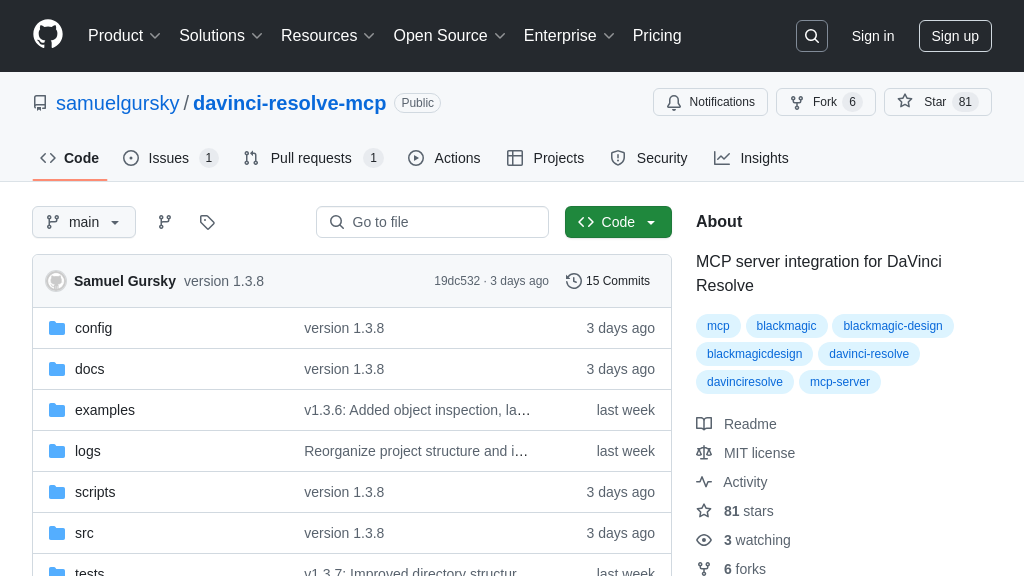

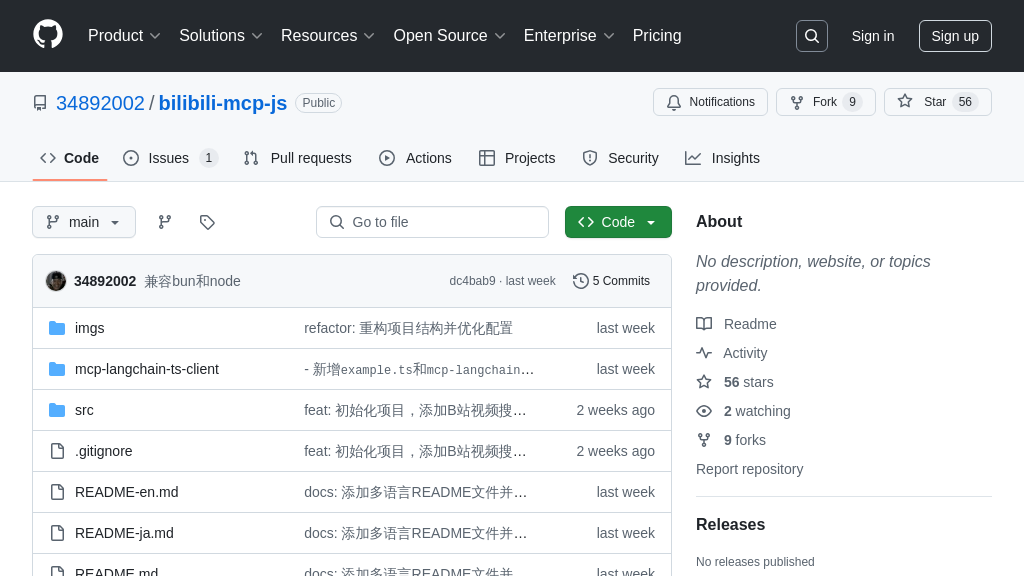

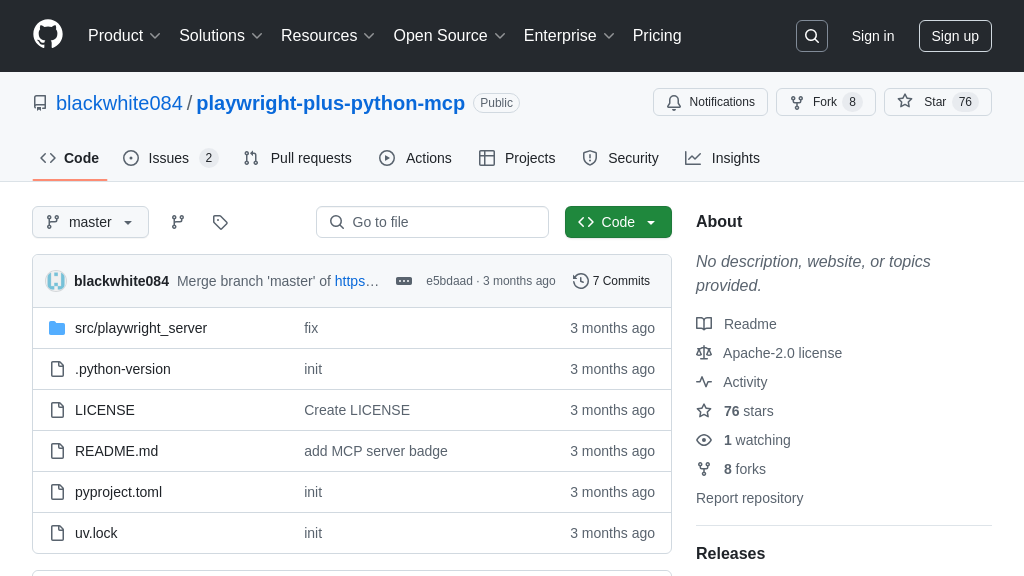

mcp-useas the foundation for tool interaction. If they require a specialized communication protocol feature not yet supported, they can fork the repository, implement the feature themselves, and potentially contribute it back to the main project, benefiting the wider community. - Technical Detail: The codebase is publicly available (typically via platforms like GitHub), allowing for direct inspection, modification, and contribution under an open-source license.

Simplified Server Integration

mcp-use prioritizes ease of use by offering a straightforward configuration mechanism for connecting to MCP servers. Developers can integrate their LangChain-based LLM applications with MCP resources using a simple, declarative approach, primarily through JSON-based configuration files. This significantly lowers the barrier to entry for utilizing the MCP ecosystem, as it doesn't require deep expertise in the underlying protocol details for basic connections. The configuration typically involves specifying the target MCP server's address and port. The library explicitly supports connecting to MCP servers running over HTTP, a common standard for web-based services, which facilitates integration within existing web application architectures or microservices environments. This focus on simple setup allows developers to quickly enable their AI models with external tools and data sources, accelerating the development cycle for creating sophisticated, context-aware agents.

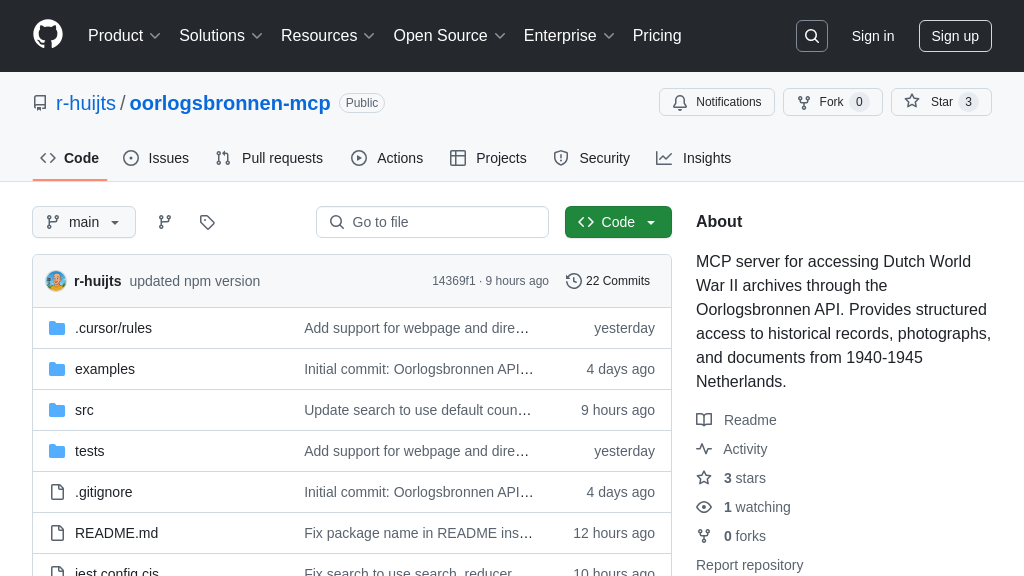

- Usage Scenario: A data scientist wants their LangChain agent to access a real-time financial data feed exposed via an MCP server running on

http://mcp.financialdata.com:8080. Usingmcp-use, they simply create a configuration file specifying this URL and port, and the library handles establishing the connection and routing tool requests from the LLM to the server. - Technical Detail: Utilizes JSON files for configuration parameters like server endpoint URLs. Includes built-in support for the HTTP/SSE transport mechanism common in MCP server implementations.