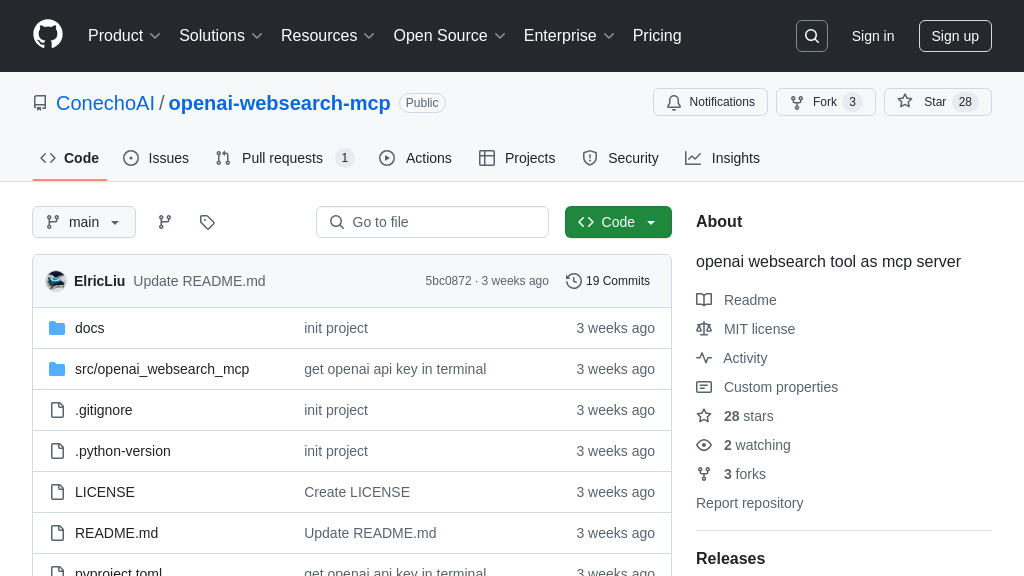

openai-websearch-mcp

The openai-websearch-mcp server enables AI models to access real-time web search via OpenAI, enhancing conversational accuracy.

openai-websearch-mcp Solution Overview

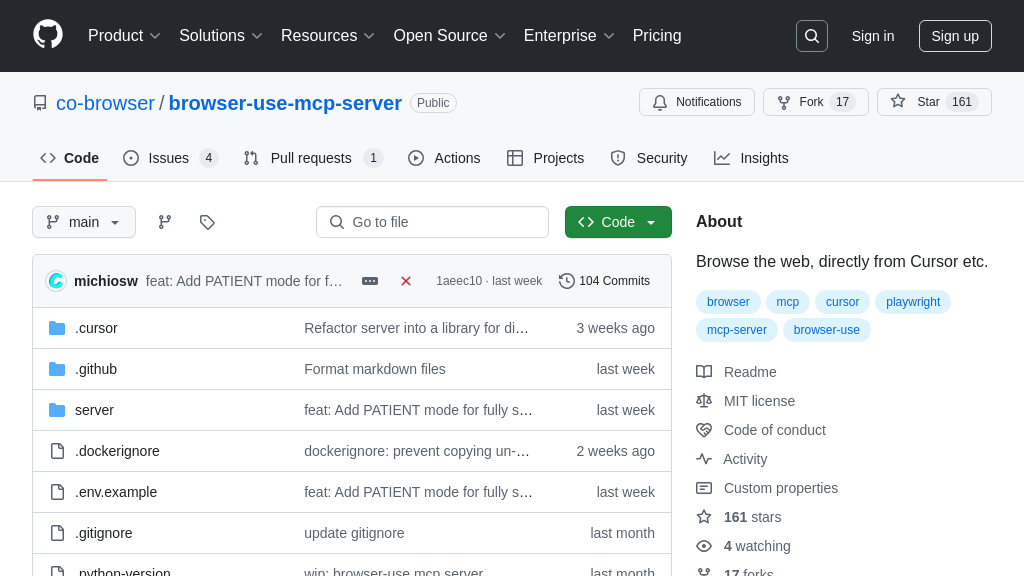

The openai-websearch-mcp is an MCP server designed to equip AI models with real-time web search capabilities via OpenAI's websearch functionality. By integrating seamlessly with MCP-compliant clients like Claude.app and Zed editor, it allows AI assistants to access up-to-date information, enhancing their responses with context beyond their training data.

This server addresses the challenge of AI models lacking current information by providing a web_search tool. This tool accepts parameters for search context size and user location, enabling more relevant and localized search results. Developers can easily install and configure the server using uv or pip, with options for one-click installation for Claude.

The core value lies in empowering AI models to deliver more informed and accurate responses, improving user experience. It uses standard input/output for communication, ensuring compatibility within the MCP ecosystem.

openai-websearch-mcp Key Capabilities

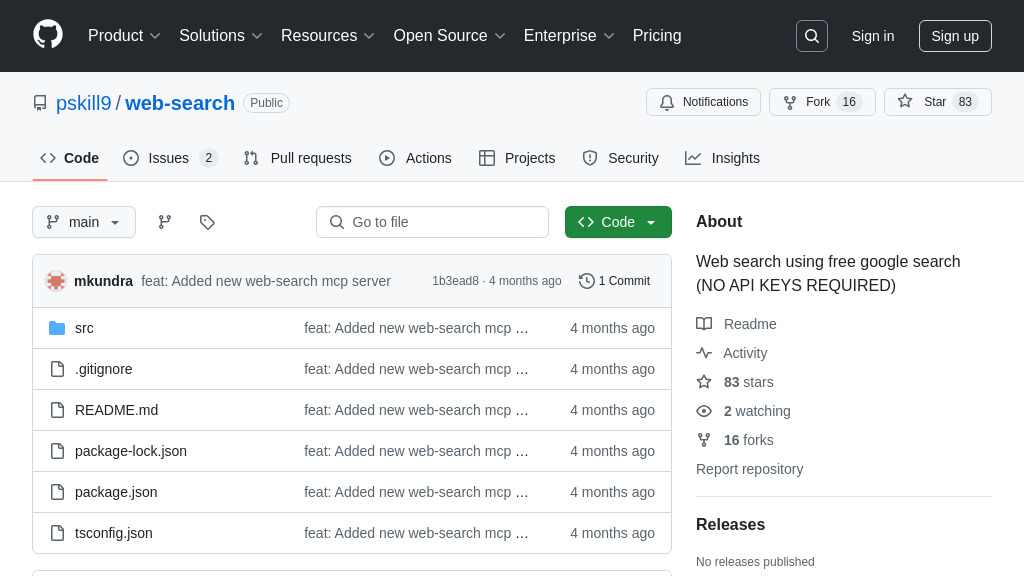

Real-time Web Search Access

The openai-websearch-mcp provides AI models with the ability to access and utilize OpenAI's web search functionality directly within a conversational context. This allows the AI to augment its existing knowledge base with up-to-date information retrieved from the internet in real-time. When a user asks a question that requires current information, the AI model can use the web_search tool to query the web, analyze the search results, and incorporate the findings into its response. This ensures that the AI's answers are accurate, relevant, and reflect the latest developments.

For example, if a user asks "What is the current weather in London?", the AI can use the web_search tool to retrieve the latest weather information from a reliable source and provide the user with an accurate answer. The search_context_size argument allows the AI to manage how much of the search results are used, optimizing for speed and relevance.

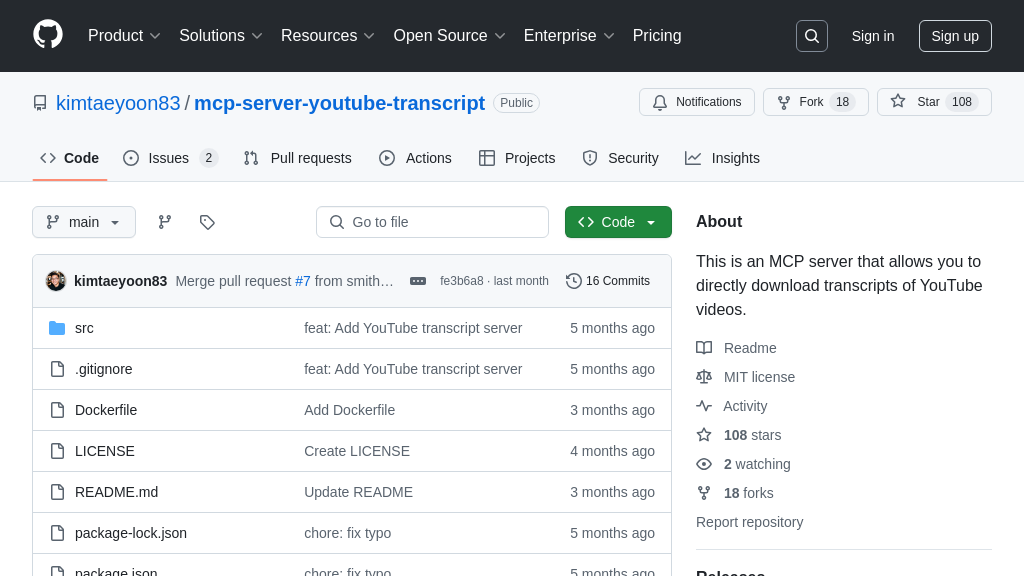

Up-to-Date Information Retrieval

This MCP server addresses the inherent limitation of AI models being trained on static datasets by providing a mechanism to access current information. By integrating with OpenAI's web search, the AI can overcome the knowledge cut-off date and provide users with responses that incorporate the most recent events, data, and trends. This is particularly valuable in scenarios where information changes rapidly, such as news, financial markets, or scientific discoveries. The AI can dynamically update its understanding of the world and provide users with timely and accurate insights.

Imagine a user asking "What are the latest stock prices for Tesla?". Without access to real-time web search, the AI would be limited to its training data, which may be outdated. With openai-websearch-mcp, the AI can query the web for the latest stock prices and provide the user with the most current information available. The user_location parameter allows for location-aware searches, improving the relevance of results.

Seamless MCP Integration

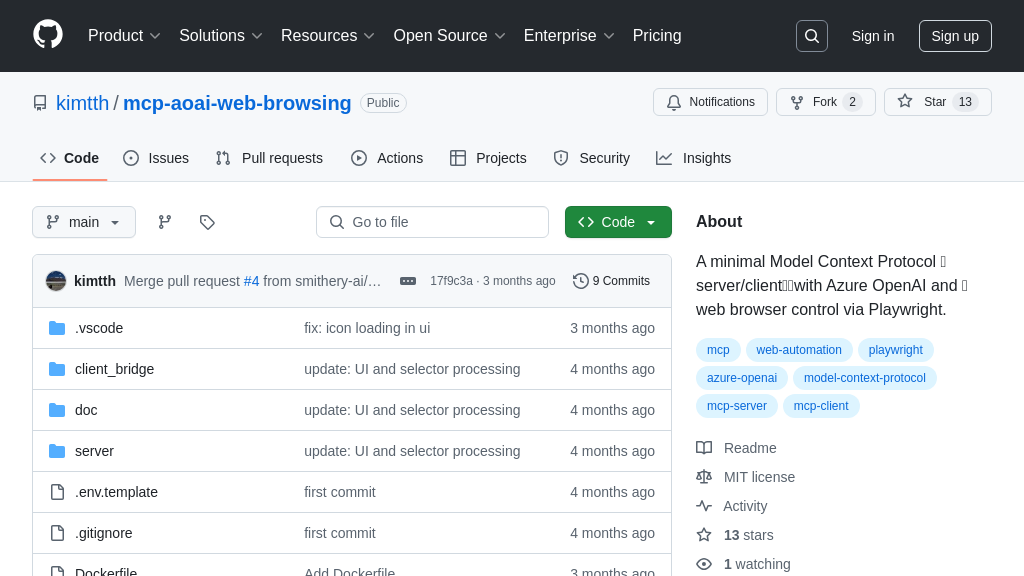

openai-websearch-mcp is designed as a Model Context Protocol (MCP) server, ensuring seamless integration with any MCP-compliant client. This allows developers to easily incorporate web search functionality into their AI applications without having to worry about the complexities of implementing the underlying search mechanisms. The MCP standard provides a consistent and reliable interface for communication between the AI model and the web search service, simplifying the development process and reducing the risk of compatibility issues.

The server can be easily integrated into applications like Claude.app or Zed editor by adding a simple configuration block specifying the command and arguments needed to run the server. This allows developers to quickly add web search capabilities to their AI tools without significant code changes. The use of uvx further simplifies the installation and execution process.

Technical Implementation

The openai-websearch-mcp server is implemented in Python and leverages the OpenAI API for web search functionality. It exposes a web_search tool that can be called by AI models to perform web searches. The server handles the communication with the OpenAI API, including authentication and request formatting. It also provides options for configuring the search context size and user location to optimize the search results. The server can be deployed using either uvx or pip, providing flexibility for different development environments. The debugging instructions provided, utilizing the MCP inspector, are invaluable for developers to ensure proper functionality and troubleshoot any issues.