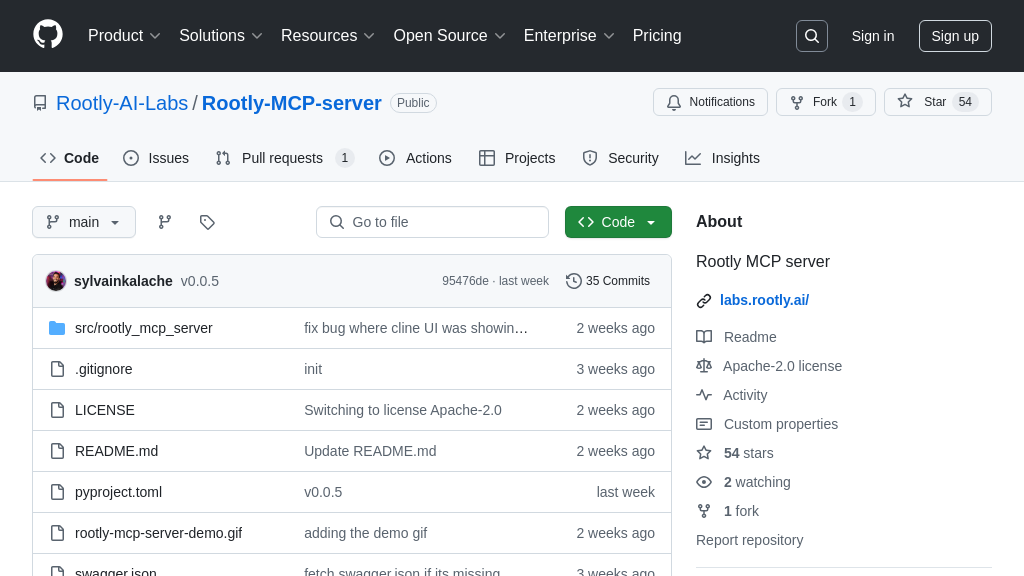

Rootly-MCP-server

Rootly-MCP-server: Resolve incidents in your IDE using AI. An MCP server by Rootly AI Labs.

Rootly-MCP-server Solution Overview

Rootly-MCP-server is an MCP server designed to bridge AI models with the Rootly API, enabling incident resolution directly within MCP-compatible editors. By dynamically generating MCP tools based on Rootly's OpenAPI specification, it allows users to interact with Rootly's incident management capabilities from their IDE, significantly improving efficiency. The server offers default pagination for incident endpoints to prevent context window overflow and limits the number of API paths exposed to the AI agent for enhanced security and focused functionality.

This server seamlessly integrates with AI models through a client-server architecture, allowing developers to resolve production incidents in under a minute without leaving their IDE. To get started, users need a Rootly API token and can run the server using a simple configuration in their MCP-compatible editor. Rootly-MCP-server streamlines incident management workflows, making it a valuable tool for developers seeking to leverage AI in system reliability and operational excellence.

Rootly-MCP-server Key Capabilities

Dynamic Tool Generation

Rootly-MCP-server automatically creates MCP tools from Rootly's OpenAPI specification, enabling AI models to interact with Rootly's incident management features. This dynamic generation simplifies integration, as developers don't need to manually define each tool. The server parses the OpenAPI spec and exposes relevant API endpoints as MCP tools, ensuring that the AI model has access to the necessary functions for incident resolution. This feature reduces the overhead of integrating Rootly with AI-powered workflows, allowing developers to focus on building intelligent incident response systems. For example, an AI model can use these tools to automatically retrieve incident details, update incident status, or add comments, all based on the dynamically generated MCP tools. The server uses Python and libraries like FastAPI to parse the OpenAPI spec and create the corresponding MCP resource definitions.

Contextual Incident Pagination

To prevent overwhelming AI models with excessive data, Rootly-MCP-server implements default pagination for incident endpoints. By default, the server returns 10 items per page, preventing context window overflow in AI models with limited input capacity. This pagination ensures that the AI model receives manageable chunks of information, improving its ability to process and respond to incidents effectively. Without pagination, large incident lists could exceed the AI model's context window, leading to incomplete or inaccurate analysis. For instance, when an AI model requests a list of open incidents, the server returns only the first 10, along with information on how to retrieve subsequent pages. This feature is implemented in the server's Python code, specifically in the handling of /incidents endpoint requests, where pagination parameters are applied to the Rootly API calls.

API Path Security Limiting

Rootly-MCP-server limits the number of API paths exposed to the AI agent to enhance security and focus the AI model's actions. By default, only the /incidents and /incidents/{incident_id}/alerts endpoints are exposed, reducing the risk of unintended or malicious actions. This restriction prevents the AI model from accessing sensitive data or performing unauthorized operations within the Rootly system. Limiting API paths also helps to streamline the AI model's decision-making process by focusing its attention on the most relevant endpoints for incident resolution. For example, the AI model can retrieve incident details and associated alerts but cannot modify user permissions or access other administrative functions. The allowed_paths variable in src/rootly_mcp_server/server.py defines the permitted API paths, providing a centralized control point for managing access.