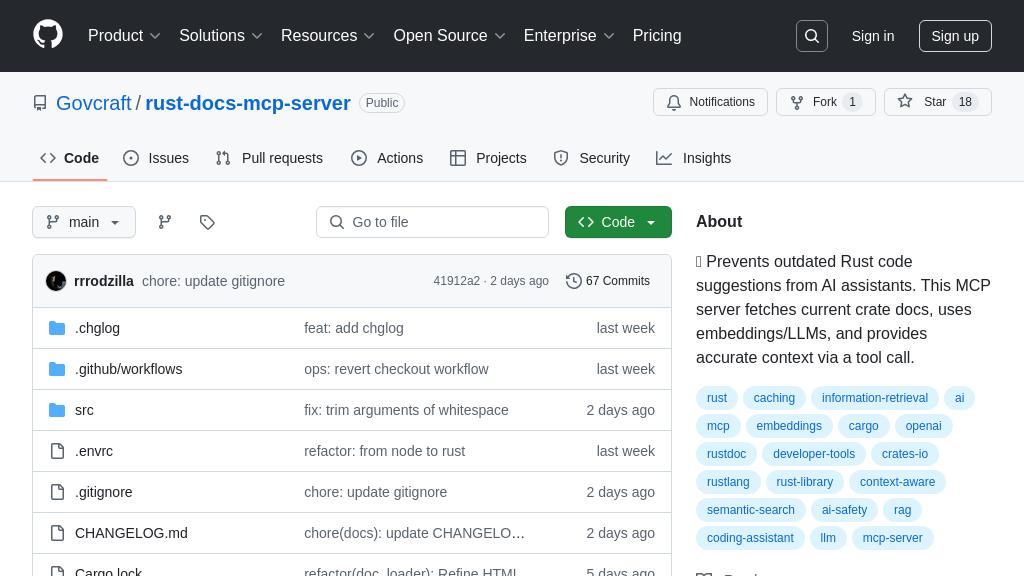

rust-docs-mcp-server

Enhance AI coding with rust-docs-mcp-server: real-time Rust crate documentation for accurate code generation.

rust-docs-mcp-server Solution Overview

The Rust Docs MCP Server is designed to provide AI coding assistants with up-to-date knowledge of specific Rust crates. As an MCP server, it addresses the challenge of AI models using outdated information, leading to inaccurate code suggestions. This server fetches documentation for a specified crate, generates embeddings, and exposes a query_rust_docs tool.

When invoked, the tool answers questions about the crate's API and usage, drawing directly from the current documentation. This significantly improves the accuracy and relevance of AI-generated code, reducing manual corrections and accelerating development. It leverages semantic search and LLM summarization to provide concise answers. The server caches documentation and embeddings for faster subsequent access. By offering a focused, real-time knowledge source, the Rust Docs MCP Server enhances the capabilities of AI coding assistants in the rapidly evolving Rust ecosystem. It integrates seamlessly via standard input/output (stdio) using the Model Context Protocol.

rust-docs-mcp-server Key Capabilities

Accurate Crate Knowledge

The rust-docs-mcp-server provides AI coding assistants with up-to-date information about specific Rust crates. It addresses the common problem of AI models relying on outdated training data, which leads to incorrect or irrelevant code suggestions, especially in rapidly evolving ecosystems like Rust. By focusing on a single crate per server instance, the tool ensures that the AI has access to the most current API details and usage patterns directly from the crate's documentation. This targeted approach significantly improves the accuracy and reliability of AI-generated code, reducing the need for manual corrections and accelerating the development process. For example, if a developer is using the reqwest crate, the AI can query the server to understand the latest methods for making HTTP requests, ensuring the generated code is compatible with the newest version.

Semantic Documentation Search

This server employs semantic search to locate the most relevant documentation sections for a given query. When an AI coding assistant asks a question about a crate, the server generates an embedding of the query using OpenAI's text-embedding-3-small model. It then compares this embedding to pre-computed embeddings of the crate's documentation chunks, identifying the chunk with the highest semantic similarity. This allows the server to go beyond simple keyword matching and understand the intent behind the question, providing more accurate and contextually relevant answers. For instance, if a developer asks "How do I handle errors with Serde?", the semantic search will identify documentation sections discussing error handling in Serde, even if the exact words "handle errors" are not present.

LLM-Powered Summarization

To provide concise and informative answers, the rust-docs-mcp-server leverages LLM summarization. After identifying the most relevant documentation chunk using semantic search, the server sends the user's question and the content of the chunk to OpenAI's gpt-4o-mini-2024-07-18 model. The LLM is then prompted to answer the question based solely on the provided context. This ensures that the answer is directly relevant to the documentation and avoids the LLM hallucinating or providing general information. The summarized response is then returned to the MCP client, providing the AI coding assistant with a clear and focused answer. For example, if the documentation chunk describes a specific method's parameters, the LLM can summarize the purpose and expected input for each parameter in a concise manner.

Caching for Efficiency

The rust-docs-mcp-server implements a caching mechanism to minimize the overhead of documentation retrieval and embedding generation. When the server is run for the first time with a specific crate version and feature set, it downloads the documentation, generates embeddings, and stores them in the XDG data directory. Subsequent runs will load this cached data, significantly reducing startup time and eliminating the need to repeatedly query the OpenAI API for embeddings. The cache is organized by crate name, version requirement, and a hash of the enabled features, ensuring that different configurations are cached separately. This caching strategy makes the server efficient and cost-effective, especially for frequently used crates. If the cache is missing, corrupted, or cannot be decoded, the server will automatically regenerate the documentation and embeddings.

MCP Integration and Tooling

The server seamlessly integrates into the MCP ecosystem by providing a standardized tool called query_rust_docs. This tool allows AI coding assistants to ask specific questions about the crate's API or usage and receive answers derived directly from the current documentation. The server communicates using the Model Context Protocol over standard input/output (stdio). The input schema for the query_rust_docs tool requires a "question" field, and the output is a text response containing the answer generated by the LLM based on the relevant documentation context, prefixed with From <crate_name> docs:. The server also exposes a resource crate://<crate_name> that provides the name of the Rust crate this server instance is configured for. This MCP integration allows AI coding assistants to easily access and utilize the server's knowledge base.