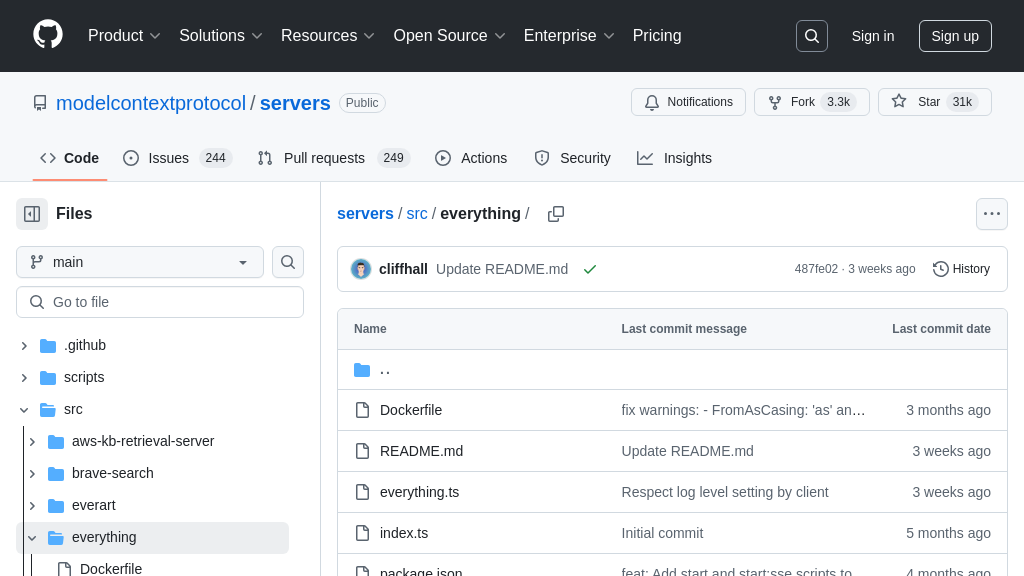

server-everything

The server-everything is an MCP test server showcasing all MCP protocol features for client developers. Not for practical use.

server-everything Solution Overview

The "server-everything" is a comprehensive MCP test server designed to showcase the full spectrum of MCP protocol capabilities. It serves as an invaluable resource for developers building MCP clients, providing a platform to test and validate their implementations. This server features a diverse set of tools, including simple echo functions, arithmetic operations, long-running processes with progress notifications, and even LLM sampling.

Beyond tools, it offers a rich set of resources with pagination, subscription support, and templating, alongside example prompts demonstrating argument handling and multi-turn conversations. The server also simulates real-world scenarios by sending random log messages and demonstrating content annotations. By implementing this server, developers can ensure their MCP clients are robust and fully compliant with the MCP standard, paving the way for seamless AI model integration. It can be easily integrated using npx @modelcontextprotocol/server-everything.

server-everything Key Capabilities

Comprehensive MCP Feature Showcase

The server-everything is designed as a comprehensive test server, meticulously crafted to demonstrate the full spectrum of MCP protocol capabilities. It offers an extensive suite of tools, resources, and prompts that collectively showcase how an MCP server can interact with AI models. This server allows client developers to rigorously test their implementations against various MCP features, ensuring compatibility and a deep understanding of the protocol. By implementing features like LLM sampling, resource handling with pagination and updates, and annotated messages, server-everything provides a sandbox environment to explore and validate MCP client behavior. This ensures that client implementations are robust and fully compliant with the MCP standard.

Dynamic Resource Management

server-everything excels in demonstrating dynamic resource management within the MCP ecosystem. It serves 100 test resources, split evenly between plaintext and binary formats, accessible via URI patterns. These resources support pagination, allowing clients to efficiently retrieve them in manageable chunks. More importantly, the server showcases resource updates, automatically pushing changes to subscribed clients every 5 seconds. This feature highlights the MCP's ability to handle real-time data updates, crucial for applications requiring up-to-date information. This is particularly useful in scenarios where AI models need access to frequently changing data, such as stock prices or sensor readings. The resource templates further demonstrate the flexibility of MCP in defining resource structures.

Advanced Prompt Handling

The server provides both simple and complex prompts to illustrate the versatility of MCP in managing interactions with AI models. The simple_prompt demonstrates a basic message exchange, while the complex_prompt showcases advanced argument handling, including required and optional parameters like temperature and style. This allows client developers to understand how to structure prompts and handle different types of inputs. The complex_prompt also returns a multi-turn conversation with images, demonstrating the ability to handle complex, multi-modal interactions. This is particularly valuable for AI applications that require nuanced control over prompt parameters and the ability to handle diverse content types.

Annotated Message Demonstration

server-everything effectively demonstrates the use of annotations to provide metadata about content within the MCP framework. The annotatedMessage tool generates messages with varying annotations, including priority levels and audience targeting (user, assistant). Error messages are marked with high priority and are visible to both the user and assistant, while debug messages have low priority and are primarily intended for the assistant. This feature showcases how annotations can be used to control the presentation and handling of content by the client. For example, a client could use the priority annotation to determine which messages to display to the user or to filter messages based on the intended audience. This allows for more sophisticated and context-aware interactions between AI models and users.