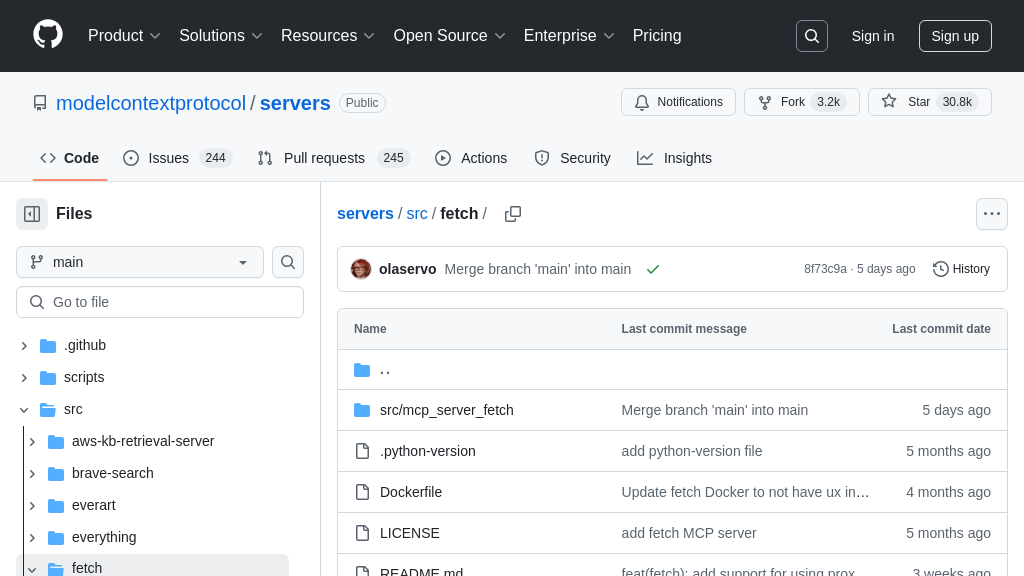

server-fetch

The server-fetch is an MCP server providing web content fetching for AI models, converting HTML to markdown for easy consumption.

server-fetch Solution Overview

server-fetch is an MCP server designed to equip AI models with the ability to retrieve and process web content. It addresses the challenge of providing LLMs with up-to-date information by fetching content from URLs and converting HTML into a more digestible markdown format. The core tool, fetch, allows models to access specific sections of a webpage using start_index and max_length parameters, enabling efficient information extraction.

This server seamlessly integrates with AI models through the MCP client-server architecture, allowing models to request web content as needed. By providing a simple and standardized way to access web data, server-fetch empowers AI models to perform tasks such as research, content summarization, and real-time information retrieval. Installation is straightforward using pip or uv, and it offers customization options for robots.txt compliance, user-agent configuration, and proxy settings.

server-fetch Key Capabilities

Web Content Fetching as Markdown

The core function of server-fetch is to retrieve content from specified URLs and convert the HTML into a simplified markdown format. This process allows AI models to easily parse and understand the information extracted from web pages, overcoming the complexities of raw HTML. The server fetches the content, cleans it, and transforms it into a readable markdown structure, making it directly usable for language models. This functionality is crucial for AI applications that require up-to-date information or need to analyze web-based content.

For example, an AI model tasked with summarizing recent news articles could use server-fetch to retrieve the content of multiple news websites. The model can then process the markdown output to identify key points and generate a concise summary. The fetch tool truncates the response, but by using the start_index argument, you can specify where to start the content extraction. This lets models read a webpage in chunks, until they find the information they need.

Configurable Content Extraction

server-fetch provides options to customize the content extraction process. The max_length parameter allows users to limit the amount of content returned, preventing the model from being overwhelmed with excessive information. The start_index parameter enables users to specify the starting point for content extraction, allowing the model to focus on specific sections of a webpage. The raw parameter allows users to retrieve the raw content without markdown conversion. These options provide flexibility in tailoring the extracted content to the specific needs of the AI model.

Consider a scenario where an AI model needs to extract specific data from a long product description page. By using the start_index and max_length parameters, the model can target the relevant section of the page, ignoring irrelevant information and reducing processing time. This targeted extraction improves efficiency and accuracy.

Robots.txt and User-Agent Customization

server-fetch respects robots.txt by default, ensuring ethical web scraping practices. The server can be configured to ignore robots.txt using the --ignore-robots-txt argument. The server also allows customization of the user-agent header, which can be useful for identifying the source of the requests and complying with website policies. By default, the server uses different user-agent headers for requests initiated by the model and requests initiated by the user. This level of control ensures responsible and transparent interaction with websites.

For instance, a developer might want to set a custom user-agent to clearly identify requests originating from their AI application. This can help website administrators understand the purpose of the requests and potentially grant higher access privileges.

Proxy Support

The server can be configured to use a proxy by using the --proxy-url argument. This feature is useful for accessing websites that are blocked in certain regions or for anonymizing requests. Proxy support enhances the versatility and reliability of server-fetch in various network environments.

Integration Advantages

server-fetch seamlessly integrates into the MCP ecosystem, providing a standardized way for AI models to access web content. Its compatibility with various installation methods (uv, pip) and configuration options makes it easy to deploy and customize. The availability of debugging tools, such as the MCP inspector, simplifies the development and troubleshooting process. This seamless integration reduces the overhead of incorporating web content fetching capabilities into AI applications.