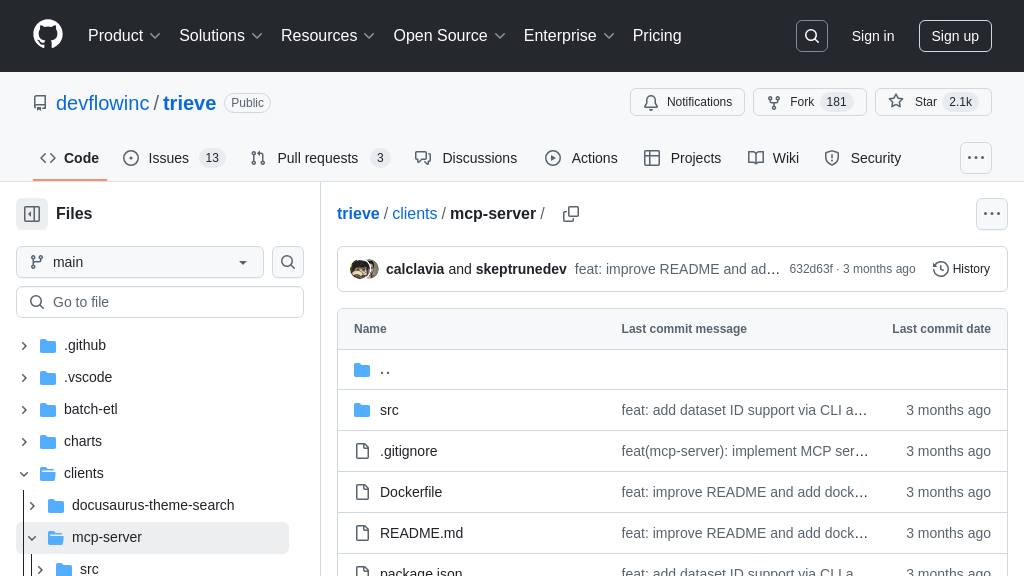

trieve

Trieve MCP Server: Connect AI agents to Trieve datasets for powerful semantic search and data interaction.

trieve Solution Overview

Trieve MCP Server is a powerful tool that connects AI models to Trieve's semantic search capabilities. As an MCP server, it provides AI agents with the ability to search and interact with Trieve datasets through a standardized interface. This allows developers to easily integrate Trieve's advanced search functionality into their AI applications, enabling features like semantic search, full-text search, and hybrid search.

The server exposes Trieve datasets as accessible resources, allowing AI models to dynamically query and retrieve relevant information. Key features include dataset listing, advanced filtering options, and customizable result highlighting. By using the Trieve MCP Server, developers can enhance their AI models with up-to-date and contextually relevant information, improving accuracy and overall performance. It supports both environment variables and command-line arguments for flexible configuration and can be easily integrated with platforms like Claude Desktop. The server is built with TypeScript, ensuring type safety and a smooth developer experience.

trieve Key Capabilities

Semantic Search Across Datasets

Trieve's core functionality revolves around enabling AI models to perform semantic searches across specified datasets. This feature allows models to understand the meaning and context of search queries, returning results that are relevant even if they don't contain the exact keywords. The search tool accepts a query string and a dataset ID, then leverages Trieve's backend to perform the semantic search. The search type can be specified, allowing for options like full-text, hybrid, or BM25, but defaults to semantic search. This ensures that AI models can retrieve information based on conceptual similarity rather than just keyword matching.

For example, an AI assistant could use Trieve to search a company's internal knowledge base for "customer churn prediction" and receive documents discussing related factors, even if those documents don't explicitly use the phrase "customer churn prediction." This is particularly useful for question-answering systems or chatbots that need to understand the intent behind user queries. The technical implementation involves sending the query and dataset ID to the Trieve API, which then returns a ranked list of relevant documents.

Standardized Dataset Access

Trieve provides a standardized way for AI models to access and interact with datasets through the Model Context Protocol (MCP). By exposing Trieve datasets as resources with a specific URI format (trieve://datasets/{dataset-id}), Trieve ensures that AI models can easily identify and connect to the desired data source. This standardization simplifies the integration process, allowing developers to quickly incorporate Trieve into their AI workflows. The MCP server acts as an intermediary, handling the communication between the AI model and the Trieve API.

Imagine a scenario where an AI model needs to analyze customer feedback data stored in a Trieve dataset. Using the standardized URI, the model can directly request the data from the Trieve MCP server, which then retrieves the information from Trieve and delivers it to the model in a structured format. This eliminates the need for custom integration code and ensures that the AI model can seamlessly access the data it needs. The technical aspect involves the MCP server listening for requests on the specified URI and then using the Trieve API to fetch the corresponding dataset.

Flexible Search Configuration

Trieve offers a range of options to customize search behavior, allowing developers to fine-tune the results returned to AI models. The search tool includes parameters for specifying the search type (semantic, fulltext, hybrid, or BM25), advanced filtering options, highlight customization, and pagination. This flexibility enables developers to optimize search performance for different use cases and data types. The ability to specify the search type allows developers to choose the most appropriate algorithm for their specific needs, while filtering options enable them to narrow down the results based on specific criteria.

For instance, a developer building a recommendation system might use the filtering options to only retrieve products within a certain price range or category. Similarly, the highlight customization options can be used to emphasize the most relevant parts of the search results, making it easier for the AI model to extract the key information. The pagination parameters allow the AI model to efficiently process large datasets by retrieving results in smaller chunks. The technical implementation involves passing these parameters to the Trieve API, which then uses them to configure the search query.

Integration with Claude Desktop

Trieve offers seamless integration with Claude Desktop, a popular AI development environment. By providing a configuration snippet for Claude Desktop's claude_desktop_config.json, Trieve simplifies the process of connecting AI models to Trieve datasets. This integration allows developers to quickly prototype and deploy AI applications that leverage Trieve's search capabilities. The configuration snippet specifies the command and arguments needed to start the Trieve MCP server, as well as the environment variables required for authentication.

Consider a developer who wants to build a chatbot that can answer questions about a company's products using data stored in Trieve. By adding the Trieve configuration to their Claude Desktop setup, they can easily connect their chatbot to the Trieve datasets and start building the application. Claude will use search as needed in order to filter and break down queries, and may make multiple queries depending on your task. The technical aspect involves Claude Desktop launching the Trieve MCP server as a separate process and then communicating with it using the Model Context Protocol.