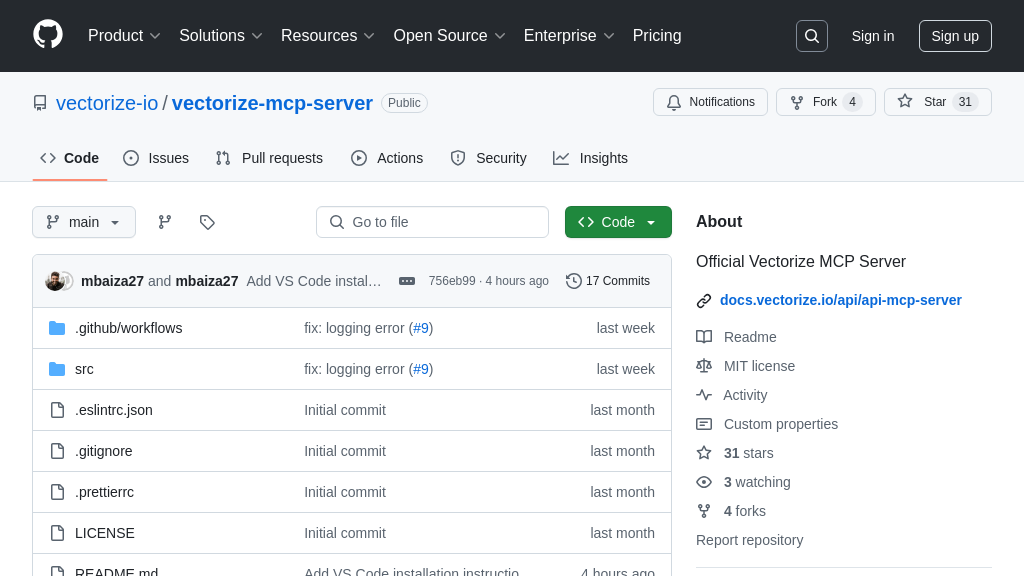

vectorize-mcp-server

Integrate AI models with Vectorize using the Vectorize MCP Server for advanced vector retrieval and text extraction.

vectorize-mcp-server Solution Overview

The Vectorize MCP Server is a crucial component for AI developers seeking to enhance their models with advanced vector retrieval and text extraction capabilities. As an MCP server, it provides a standardized interface for AI models to interact seamlessly with Vectorize, unlocking its powerful features through the Model Context Protocol.

This server offers tools for retrieving relevant documents via vector search, extracting text from various file types and chunking it into Markdown, and even generating in-depth research reports using Vectorize's pipeline. By integrating the Vectorize MCP Server, developers can equip their AI models with the ability to access and utilize Vectorize's capabilities, enabling more informed and context-aware AI applications. Installation is streamlined through npx or manual configuration, making it easy to incorporate into existing workflows. This empowers developers to focus on building intelligent applications without the complexities of direct API integrations.

vectorize-mcp-server Key Capabilities

Vector Search Retrieval

The core function of the vectorize-mcp-server is to enable AI models to perform vector searches within the Vectorize platform. This is achieved through the "retrieve" tool, which accepts a question and a parameter 'k' specifying the number of documents to retrieve. The server then queries the Vectorize platform using the provided credentials and pipeline ID, returning the top 'k' documents most relevant to the input question based on vector similarity. This allows AI models to access a knowledge base and retrieve contextually relevant information for improved reasoning and response generation. For example, an AI model tasked with answering questions about a company's financial performance can use this tool to retrieve relevant financial documents from Vectorize. The retrieved documents can then be used as context for the AI model to generate a more informed and accurate response.

Document Extraction and Chunking

The vectorize-mcp-server provides the "extract" tool, which allows AI models to extract text from various document types and chunk it into Markdown format. This tool accepts a base64-encoded document and its content type as input. The server then utilizes Vectorize's text extraction capabilities to convert the document into plain text and further divides the text into smaller, manageable chunks formatted in Markdown. This is particularly useful for processing large documents that exceed the context window of many AI models. By chunking the document, the AI model can process it in smaller segments, preserving context and improving overall performance. A practical application is extracting information from PDF reports, converting them into Markdown, and feeding them to a language model for summarization or question answering.

Deep Research Generation

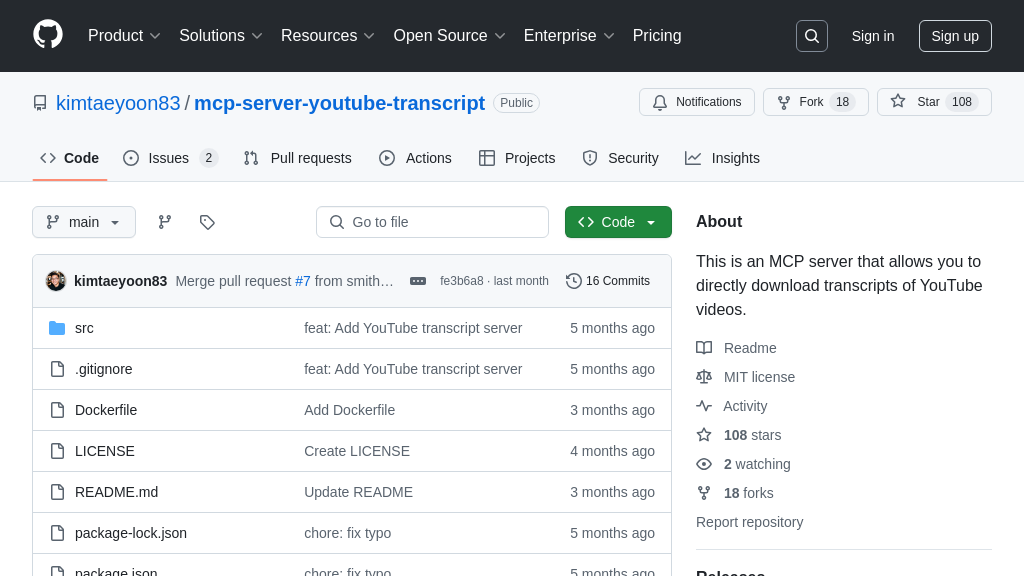

The "deep-research" tool within the vectorize-mcp-server enables AI models to initiate comprehensive research tasks using the Vectorize platform. This tool accepts a query and a boolean flag 'webSearch'. When invoked, the server instructs Vectorize to perform a deep research operation based on the provided query, optionally including web search results. This feature allows AI models to leverage Vectorize's advanced research capabilities to gather information from multiple sources and synthesize it into a coherent report. This is valuable for tasks requiring in-depth analysis and knowledge synthesis. For instance, an AI model could use this tool to generate a financial status report about a company, leveraging both internal documents and external web resources.

Integration Advantages

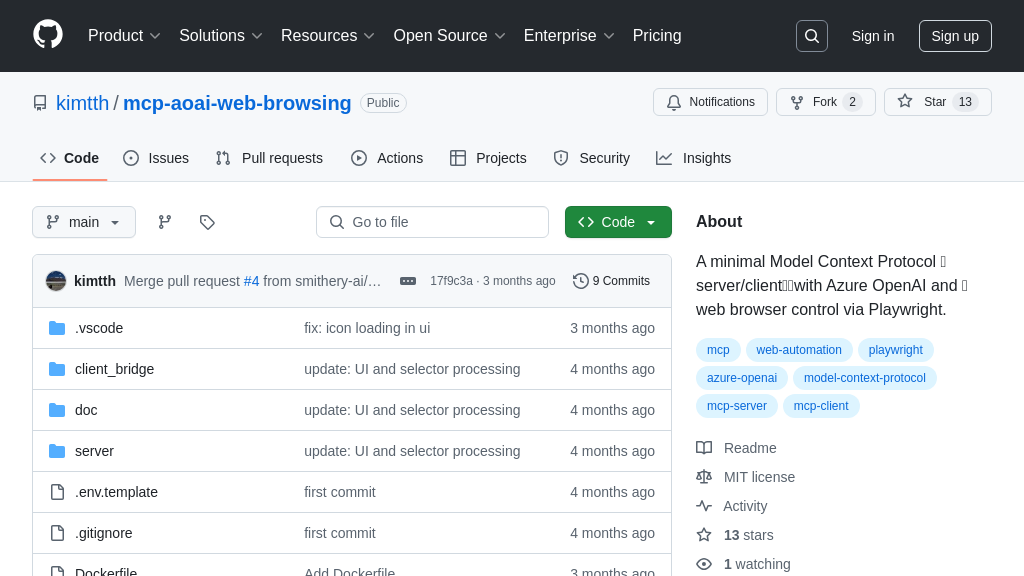

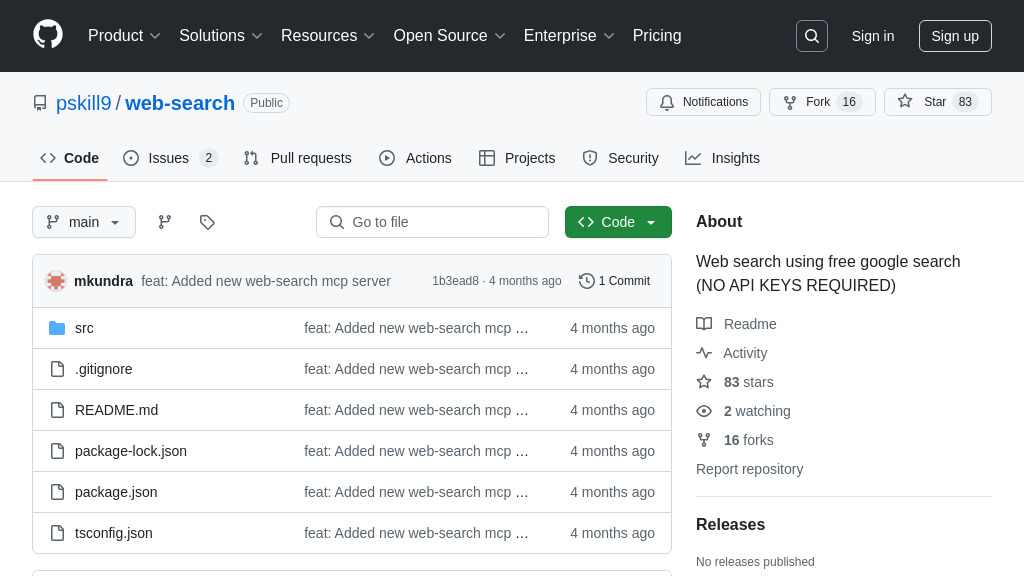

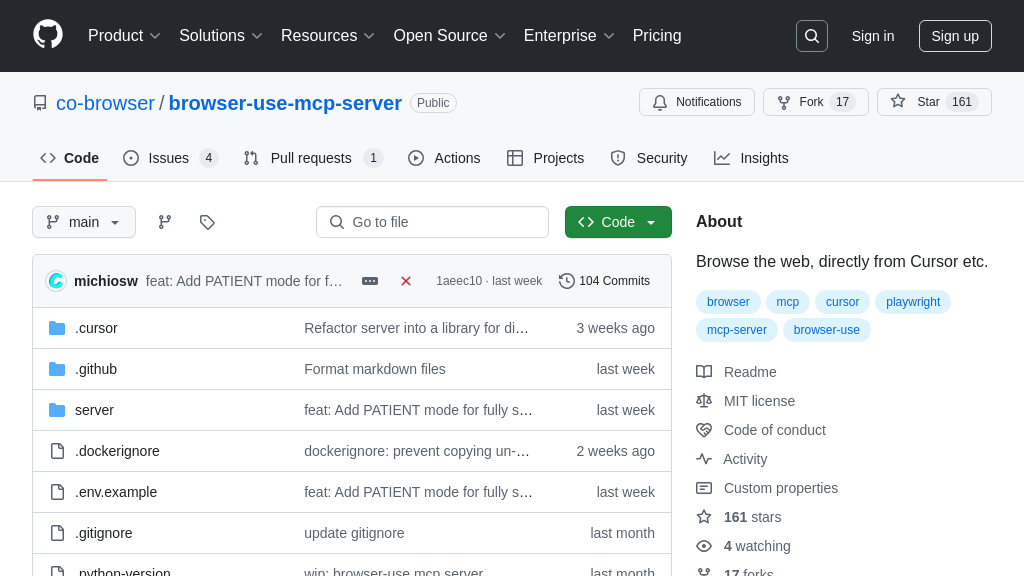

The vectorize-mcp-server offers significant integration advantages within the MCP ecosystem. By adhering to the MCP standard, it provides a consistent and secure interface for AI models to interact with Vectorize's powerful vector retrieval and text extraction capabilities. This eliminates the need for custom integrations and simplifies the process of connecting AI models to external data sources. The server supports standard input/output and HTTP/SSE transport mechanisms, making it compatible with a wide range of AI model clients and server environments. Furthermore, the configuration options provided, such as the VS Code installation and Claude/Windsurf/Cursor/Cline configurations, streamline the deployment process and make it easier for developers to integrate the server into their existing workflows.