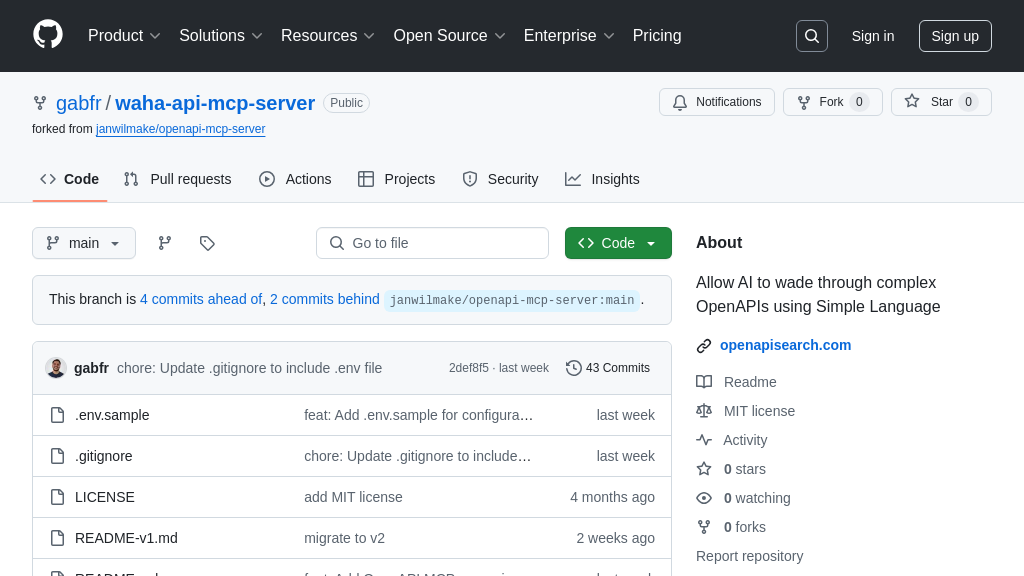

waha-api-mcp-server

waha-api-mcp-server: Connect OpenAPI specs to AI models like Claude as MCP tools.

waha-api-mcp-server Solution Overview

The waha-api-mcp-server is an MCP server designed to bridge the gap between AI models like Claude and APIs defined by OpenAPI specifications. It parses OpenAPI YAML files and automatically generates MCP tools for each API operation, enabling AI to interact with these APIs through natural language. This server handles path parameters, query parameters, and request bodies, making live API calls when the AI model invokes the generated tools.

By exposing API operations as easily accessible tools, it simplifies complex interactions and allows AI to leverage external services seamlessly. The server is configured via environment variables and supports HTTP/SSE transport through API calls. Integrating with Claude Desktop is straightforward, as the server returns API call responses for AI analysis. This solution empowers developers to connect AI models to a wide range of services with minimal effort, unlocking new possibilities for AI-driven applications.

waha-api-mcp-server Key Capabilities

OpenAPI to MCP Translation

The waha-api-mcp-server's core function is to translate OpenAPI specifications into MCP-compatible tools. It parses OpenAPI YAML files, extracting API operations, parameters (path, query, request body), and descriptions. For each operation, it generates a corresponding MCP tool that AI models like Claude can understand and utilize. This translation process bridges the gap between standard API definitions and the specific requirements of AI model interaction within the MCP ecosystem. The server effectively transforms complex API functionalities into easily accessible tools for AI, enabling seamless integration and utilization of external services.

For example, if an OpenAPI spec defines an endpoint for creating a user with parameters like username and email, the server will create an MCP tool that Claude can use by providing the necessary parameters in natural language. Claude can then invoke this tool, and the server will handle the API call.

Dynamic API Interaction

This server enables AI models to interact with live APIs dynamically. When an AI model invokes an MCP tool generated from an OpenAPI specification, the server makes a live API call to the corresponding endpoint. It handles the construction of the API request, including setting the correct headers, parameters, and request body, based on the OpenAPI definition. The server then sends the request to the API and receives the response. This dynamic interaction allows AI models to access and utilize real-time data and services, expanding their capabilities beyond their internal knowledge.

Consider a scenario where Claude needs to retrieve the current weather conditions for a specific city. The server, having parsed an OpenAPI spec for a weather API, can translate Claude's request into a properly formatted API call, retrieve the weather data, and return it to Claude for analysis and response generation.

Streamlined AI Integration

The waha-api-mcp-server simplifies the integration of AI models with external APIs by providing a standardized interface. By exposing API operations as MCP tools, it allows AI models to access a wide range of functionalities without needing to understand the complexities of individual API implementations. This streamlined integration reduces the development effort required to connect AI models to external services and enables AI developers to focus on building intelligent applications rather than dealing with API integration details. The server acts as an intermediary, handling the technical aspects of API communication and providing a consistent interface for AI models to interact with.

For instance, integrating Claude with a CRM system becomes easier. The CRM's OpenAPI spec is parsed, and Claude can then use tools like "createContact" or "updateContact" without needing to know the specific API endpoints or authentication methods. The server handles these details, allowing Claude to focus on the task at hand.

Technical Implementation: OpenAPI Parsing Engine

The server's OpenAPI parsing engine is a critical technical feature. It is responsible for reading and interpreting OpenAPI specification files (YAML format). The engine extracts all relevant information, including paths, operations, parameters, and data types. It then uses this information to generate the corresponding MCP tools. The engine must be robust and handle various OpenAPI specification versions and complexities. Error handling and validation are also crucial to ensure that the server can correctly parse and process different OpenAPI specifications. This component ensures the accurate and reliable conversion of API definitions into usable MCP tools.