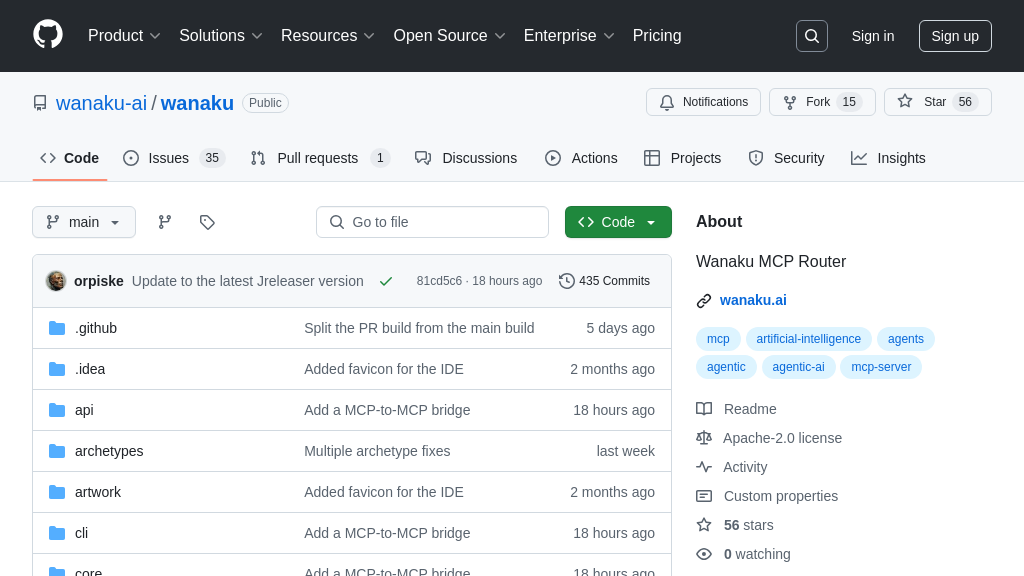

wanaku

Wanaku: An MCP Router connecting AI models and external resources via the Model Context Protocol.

wanaku Solution Overview

Wanaku is an MCP Router (Server) designed to streamline communication between AI models and external resources, adhering to the open Model Context Protocol (MCP). Functioning as a central hub, Wanaku facilitates seamless context provision to Large Language Models (LLMs), enabling AI-powered applications to access and utilize external data effectively.

Key features include its ability to route requests based on the MCP standard, ensuring interoperability and simplifying the integration of diverse data sources. By acting as an intermediary, Wanaku eliminates the complexities of direct connections, offering a unified interface for AI models. This enhances AI model functionality by providing relevant, real-time context, addressing the developer pain point of managing multiple data connections. Built primarily in Java, Wanaku offers a robust and scalable solution for managing context in AI applications.

wanaku Key Capabilities

MCP Routing & Orchestration

Wanaku acts as a central router within the MCP ecosystem, intelligently directing requests between AI models and various external resources. This orchestration is crucial for building complex AI applications that require access to diverse data sources and services. Wanaku simplifies the process of managing these interactions by providing a unified interface for defining routing rules and policies. It ensures that the right data reaches the right model at the right time, optimizing performance and efficiency.

For example, in a customer service application, Wanaku could route a user query to a knowledge base for initial information retrieval, then to a sentiment analysis model to gauge customer emotion, and finally to a customer relationship management (CRM) system to update the customer's record. This complex workflow is managed seamlessly by Wanaku's routing capabilities. The technical implementation likely involves defining routing rules based on request content, metadata, or model capabilities, allowing for dynamic and adaptive routing decisions.

Standardized Context Provision

Wanaku enforces the Model Context Protocol (MCP), ensuring that all interactions between AI models and external resources adhere to a standardized format. This standardization is vital for interoperability and simplifies the development of AI applications. By adhering to MCP, Wanaku allows developers to easily integrate different AI models and data sources without worrying about compatibility issues. This promotes a plug-and-play approach to AI development, accelerating innovation and reducing development costs.

Consider a scenario where an organization uses multiple LLMs from different vendors. Wanaku ensures that the context provided to each model is consistent and adheres to the MCP standard, regardless of the model's specific requirements. This eliminates the need for custom integration code for each model, saving time and resources. The technical implementation involves validating incoming and outgoing messages against the MCP schema and transforming data as needed to ensure compliance.

Abstraction of Data Sources

Wanaku abstracts away the complexities of interacting with different data sources and services. Developers can interact with Wanaku using the MCP, without needing to understand the specific protocols or APIs of each individual data source. This abstraction simplifies the development process and allows developers to focus on building AI-powered features rather than dealing with the intricacies of data integration. Wanaku handles the underlying communication and data transformation, providing a consistent and reliable interface for accessing external resources.

Imagine an AI-powered research assistant that needs to access data from various sources, such as academic databases, news articles, and social media feeds. Wanaku provides a unified interface for accessing these diverse data sources, abstracting away the complexities of each individual API. The research assistant can simply request the data it needs through Wanaku, and Wanaku handles the underlying communication and data transformation. This abstraction is likely achieved through the use of adapters or connectors that translate MCP requests into the specific protocols required by each data source.

Technical Implementation: MCP-to-MCP Bridge

Wanaku includes an MCP-to-MCP bridge, enabling seamless communication between different MCP-compliant systems. This bridge is crucial for building distributed AI applications where different components may be running in different environments or managed by different teams. The MCP-to-MCP bridge allows these components to communicate with each other using the standardized MCP protocol, ensuring interoperability and simplifying integration. This feature enhances the flexibility and scalability of AI applications built on the MCP ecosystem.

For instance, consider a scenario where one team is responsible for developing a data ingestion pipeline that provides context to AI models, while another team is responsible for developing the AI models themselves. The MCP-to-MCP bridge allows these two teams to work independently, as long as both systems adhere to the MCP standard. The bridge facilitates the exchange of context data between the data ingestion pipeline and the AI models, enabling a seamless and efficient workflow. The technical implementation likely involves establishing a communication channel between the two MCP systems and translating messages as needed to ensure compatibility.