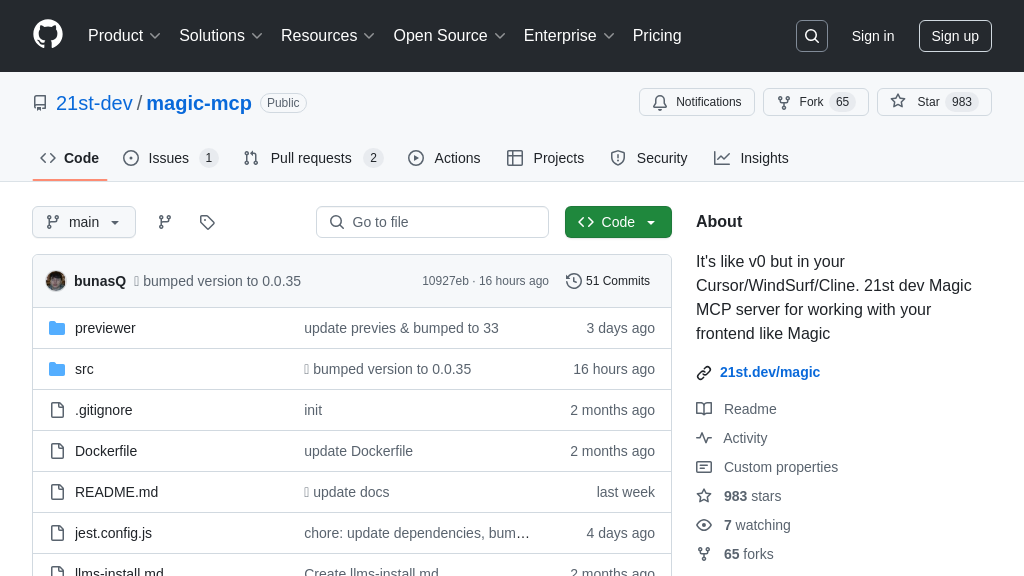

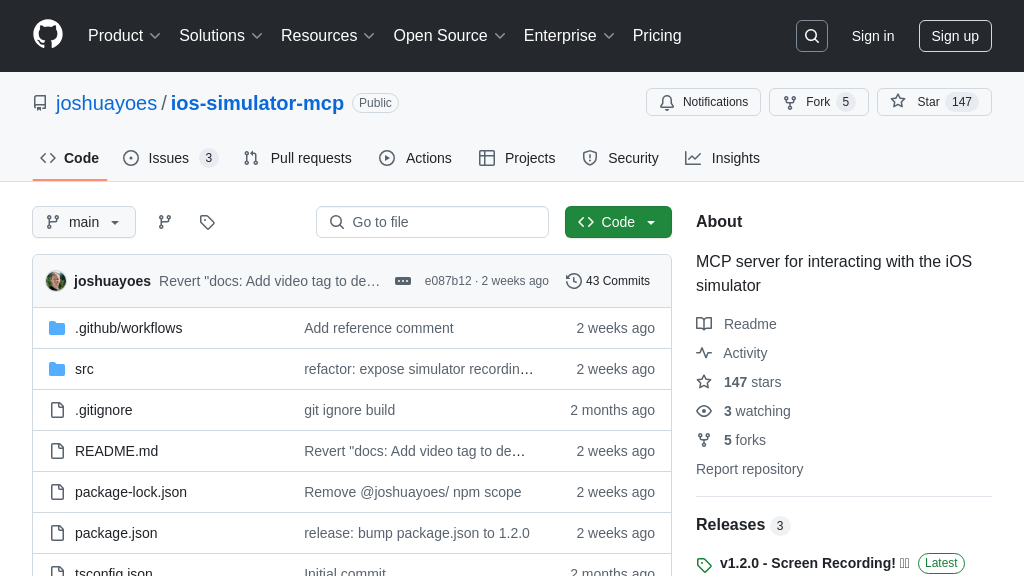

ios-simulator-mcp

ios-simulator-mcp: An MCP server for AI-driven iOS simulator control, enabling automated UI testing and QA workflows.

ios-simulator-mcp Solution Overview

The ios-simulator-mcp is a valuable MCP server designed to bridge the gap between AI models and iOS simulators, enabling automated UI testing and QA processes. It empowers AI agents to interact directly with iOS simulators, offering functionalities like retrieving simulator IDs, accessing UI element descriptions, performing taps and swipes, and inputting text. This server allows for comprehensive UI validation, ensuring consistency and correct behavior immediately after feature implementation.

Key features include the ability to take screenshots and record videos of the simulator screen, providing visual documentation of test results. By leveraging the ios-simulator-mcp, developers can automate QA steps in agent mode, verifying UI elements, confirming text inputs, and validating swipe actions. This server integrates seamlessly through standard MCP client-server architecture, utilizing Node.js and the Facebook IDB tool. It streamlines UI testing, reduces manual effort, and accelerates the development lifecycle.

ios-simulator-mcp Key Capabilities

UI Element Inspection

The ios-simulator-mcp allows AI models to inspect the iOS simulator's UI elements, providing a detailed description of all accessibility elements currently displayed on the screen. This feature enables the AI to understand the structure and content of the application's interface. The server leverages the Facebook IDB tool to interact with the simulator's accessibility APIs, extracting information such as element type, text labels, and positions. This information is then relayed back to the AI model in a structured format, allowing it to reason about the UI and plan subsequent actions.

For example, an AI agent could use this feature to verify that a specific button is present on the screen and that its label matches the expected value. This is crucial for automated QA testing, ensuring UI consistency across different builds and devices. The AI can also use the element descriptions to dynamically adapt its interaction strategy based on the current state of the application.

Coordinate-Based Interaction

This feature enables AI models to interact with the iOS simulator by performing actions at specific screen coordinates. The server supports tapping, text input, and swiping gestures, allowing the AI to simulate user interactions with the application. The AI can specify the coordinates for a tap action, input text into a text field, or define the start and end points for a swipe gesture. This functionality is essential for automating tasks that require precise control over the UI, such as navigating menus, filling out forms, and triggering specific events.

Consider a scenario where an AI agent needs to navigate to a specific settings page within an application. It can use the UI element inspection feature to identify the coordinates of the settings button and then use the coordinate-based interaction feature to tap on that button, effectively navigating to the desired page. This allows for end-to-end testing of application workflows, ensuring that all features are functioning correctly.

Screen Recording

The ios-simulator-mcp provides the capability to record video of the iOS simulator screen. The server allows the AI to start and stop recording, saving the video to a file in the user's downloads directory. This feature is invaluable for debugging and auditing purposes, allowing developers to visually inspect the behavior of the application under different conditions. The AI can trigger recordings based on specific events or conditions, capturing the relevant context for analysis.

For instance, an AI agent could automatically record a video of the simulator screen when it encounters an unexpected error or crash. This video can then be used by developers to diagnose the root cause of the problem and implement a fix. The screen recording feature also facilitates the creation of automated demos and tutorials, showcasing the functionality of the application in a visually appealing manner. The default save location is ~/Downloads/simulator_recording_$DATE.mp4.