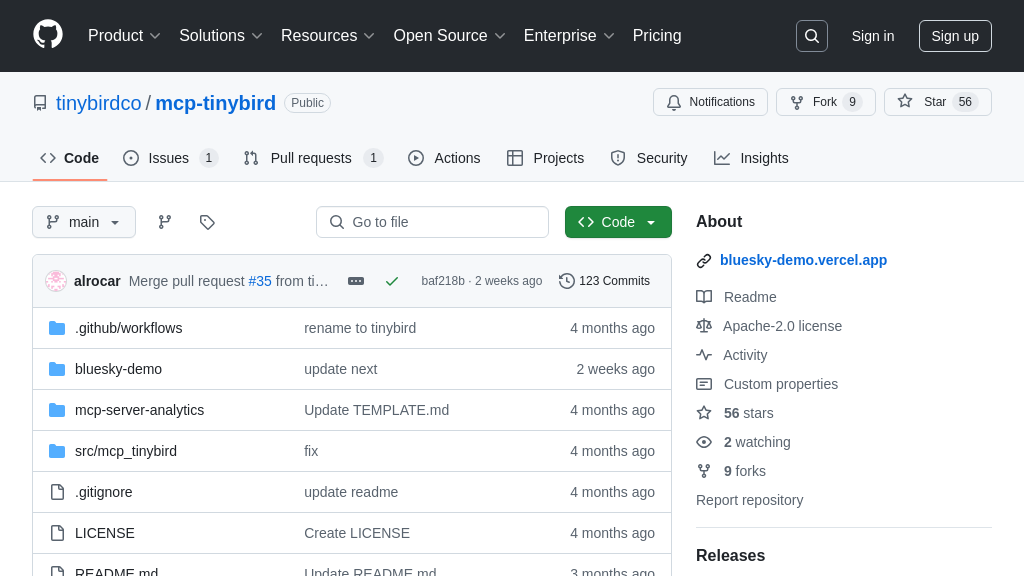

last9-mcp-server

Last9 MCP Server: Real-time production context for AI agents, enabling faster code fixes. Integrates with Claude, Cursor, and Windsurf.

last9-mcp-server Solution Overview

Last9 MCP Server is a Model Context Protocol server designed to seamlessly integrate real-time production context into your AI-assisted development workflow. By connecting to Last9's observability platform, it empowers AI agents with critical insights like logs, metrics, and traces, enabling faster code fixes and improved application reliability.

This server implements key MCP tools, including get_exceptions, get_service_graph, get_logs, get_drop_rules, and add_drop_rule, allowing AI models to query for exceptions, visualize service dependencies, retrieve logs based on service or severity, and manage log filtering rules. It integrates smoothly with popular IDEs like Cursor, VSCode (Github Copilot) and Claude desktop app, providing contextual awareness directly within your development environment.

The Last9 MCP Server enhances AI model functionality by providing access to real-time production data, bridging the gap between development and operations. Installation is straightforward via Homebrew or NPM, and configuration involves setting environment variables for authentication and API access. This solution streamlines debugging, optimizes application performance, and ultimately accelerates the development lifecycle.

last9-mcp-server Key Capabilities

Real-time Production Context Retrieval

The Last9 MCP server empowers AI models with real-time production context by providing access to logs, metrics, and traces directly from the Last9 platform. This allows AI agents to understand the current state of applications and infrastructure, enabling more informed decision-making during code generation, debugging, and optimization. The server acts as a bridge, securely connecting the AI model to Last9's observability data. This eliminates the need for developers to manually gather and feed context to the AI, streamlining the development workflow. For example, when an AI is suggesting a code fix for an exception, it can use the get_logs tool to retrieve relevant logs around the time of the exception, providing deeper insights into the root cause. This feature significantly reduces the time spent on debugging and improves the accuracy of AI-driven code suggestions. The server leverages the Last9 API for data retrieval, ensuring secure and efficient access to observability data.

Automated Exception Analysis

The get_exceptions and get_service_graph tools within the Last9 MCP server enable automated exception analysis for AI models. The get_exceptions tool retrieves a list of server-side exceptions within a specified time range, allowing the AI to identify and prioritize critical issues. The get_service_graph tool then provides a visual representation of the upstream and downstream services related to a specific exception, along with their throughput. This allows the AI to understand the impact of the exception on the overall system and identify potential dependencies. For instance, if an AI is tasked with resolving a performance bottleneck, it can use these tools to identify the root cause of the bottleneck and understand its impact on other services. This automated analysis significantly accelerates the debugging process and enables AI models to provide more targeted and effective solutions. The server uses span names to correlate exceptions with service dependencies, providing a comprehensive view of the issue.

Log Filtering and Analysis

The get_logs tool allows AI models to retrieve logs filtered by service name and/or severity level within a specified time range. This enables AI agents to focus on the most relevant log data, reducing noise and improving the efficiency of log analysis. By providing the AI with the ability to filter logs based on specific criteria, the Last9 MCP server empowers it to identify patterns, anomalies, and potential issues that might be missed by manual inspection. For example, an AI model could use this tool to identify all error logs related to a specific service within the last hour, helping to pinpoint the cause of a recent failure. This targeted log analysis enables AI models to provide more accurate and insightful recommendations for troubleshooting and optimization. The tool supports filtering by service name and severity level, providing flexibility in log data retrieval.

Control Plane Integration for Log Management

The get_drop_rules and add_drop_rule tools provide integration with the Last9 Control Plane, enabling AI models to manage log ingestion and filtering. The get_drop_rules tool retrieves existing drop rules, which determine what logs are filtered out from reaching Last9. The add_drop_rule tool allows the AI to create new drop rules to filter out specific logs based on defined criteria. This integration allows AI models to proactively manage log data, reducing noise and improving the efficiency of observability. For example, an AI model could analyze log data and identify patterns of irrelevant or redundant logs, then automatically create drop rules to filter them out, reducing storage costs and improving query performance. This proactive log management ensures that only the most valuable data is ingested and analyzed. The add_drop_rule tool supports filtering based on attributes and resource attributes, providing granular control over log ingestion.