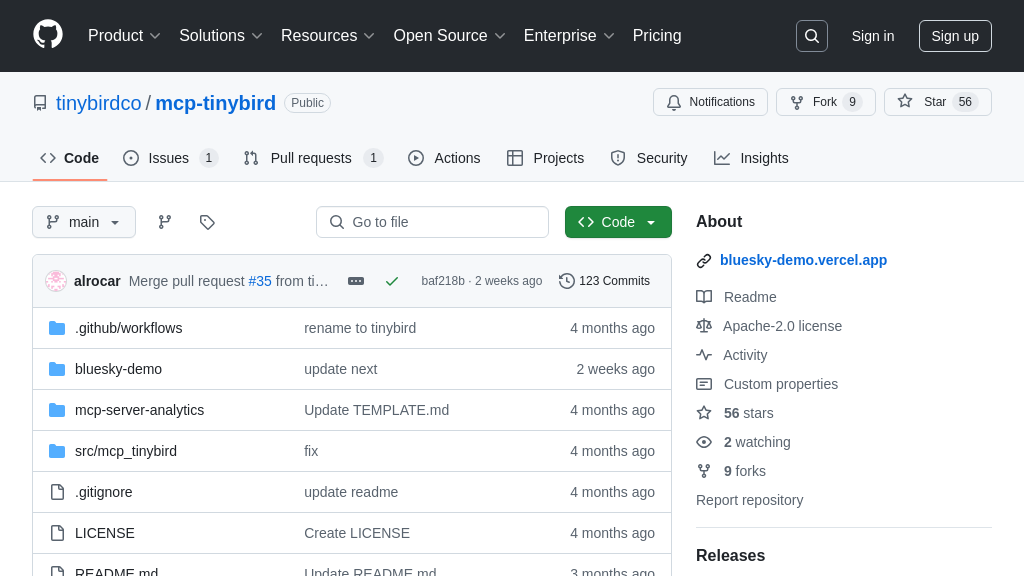

logfire-mcp

Logfire MCP Server: Connect LLMs to your OpenTelemetry data for AI-powered application insights and optimization.

logfire-mcp Solution Overview

Logfire MCP Server is a powerful tool designed to connect your AI models with your application's telemetry data stored in Logfire. As an MCP server, it enables Large Language Models (LLMs) to analyze distributed traces, retrieve metrics, and execute SQL queries against your OpenTelemetry data.

This server offers tools like find_exceptions to identify exception counts by file, find_exceptions_in_file for detailed trace information, and arbitrary_query for custom SQL queries. By providing AI models with direct access to this data, Logfire MCP Server empowers them to understand application behavior, diagnose issues, and provide intelligent insights.

The server integrates seamlessly with popular MCP clients like Cursor, Claude Desktop, and Cline, using standard input/output or HTTP/SSE for communication. Developers can easily deploy it using uvx and configure it with a Logfire read token. The core value lies in enabling AI-driven observability, allowing models to proactively identify and address application issues.

logfire-mcp Key Capabilities

Trace-Based Exception Analysis

Logfire MCP provides tools to analyze exceptions within OpenTelemetry traces. The find_exceptions tool aggregates exception counts by file, allowing developers to quickly identify problematic areas in their codebase. The find_exceptions_in_file tool provides detailed trace information for exceptions within a specific file, including the timestamp, message, exception type, function name, line number, and relevant attributes. This enables developers to pinpoint the root cause of errors and understand the context in which they occur. For example, a developer can use find_exceptions to see that "app/api.py" has a high exception count and then use find_exceptions_in_file to examine the specific errors and trace details within that file. This functionality enhances AI models by providing them with structured insights into application errors, enabling them to suggest fixes, predict future issues, or automate debugging workflows.

Arbitrary SQL Query Execution

The arbitrary_query tool allows users to execute custom SQL queries against their OpenTelemetry traces and metrics stored in Logfire. This provides unparalleled flexibility in data analysis, enabling users to extract specific information and create custom reports tailored to their needs. The tool supports a wide range of SQL operations, including filtering, aggregation, and joining data from different sources. For instance, a user could query for the average response time of a specific service over a given period or identify the most frequent error messages. This feature empowers AI models by providing them with access to a rich and customizable data source, enabling them to perform complex analysis, identify trends, and generate actionable insights. The underlying implementation leverages the Logfire APIs to execute the SQL queries and return the results in a structured format.

OpenTelemetry Schema Retrieval

The get_logfire_records_schema tool allows users to retrieve the OpenTelemetry schema used by Logfire. This schema describes the structure and data types of the trace and metric data stored in Logfire, providing essential information for constructing effective SQL queries and interpreting the results. By providing the schema, Logfire MCP simplifies the process of querying the data and ensures that users can accurately access and analyze their telemetry data. For example, a data scientist can use the schema to understand the available fields and their data types before writing a complex SQL query. This feature benefits AI models by providing them with a clear understanding of the data structure, enabling them to generate more accurate and relevant insights.

Integration Advantages

Logfire MCP offers seamless integration with popular MCP clients like Cursor, Claude Desktop, and Cline. Configuration examples are provided for each client, simplifying the setup process and allowing users to quickly connect their AI models to Logfire's telemetry data. The server can be run manually using uvx or automatically managed by the MCP client. The integration supports environment variables and command-line arguments for configuring the Logfire read token and base URL, providing flexibility and security. This streamlined integration process reduces the friction for developers and enables them to leverage the power of Logfire's telemetry data within their existing AI workflows.