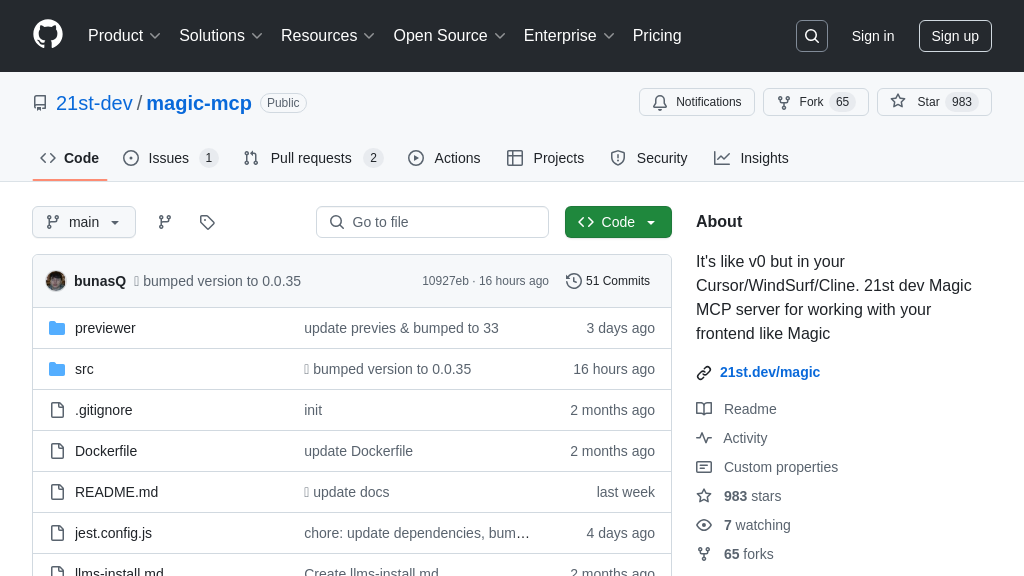

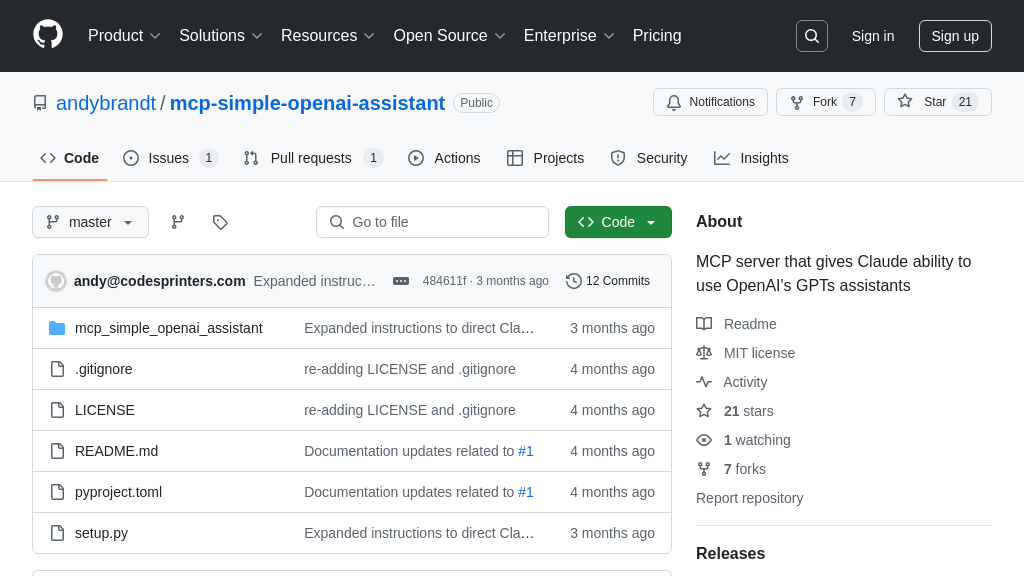

mcp-simple-openai-assistant

An MCP server enabling AI models like Claude to interact with OpenAI Assistants. Extends AI capabilities via a standardized protocol.

mcp-simple-openai-assistant Solution Overview

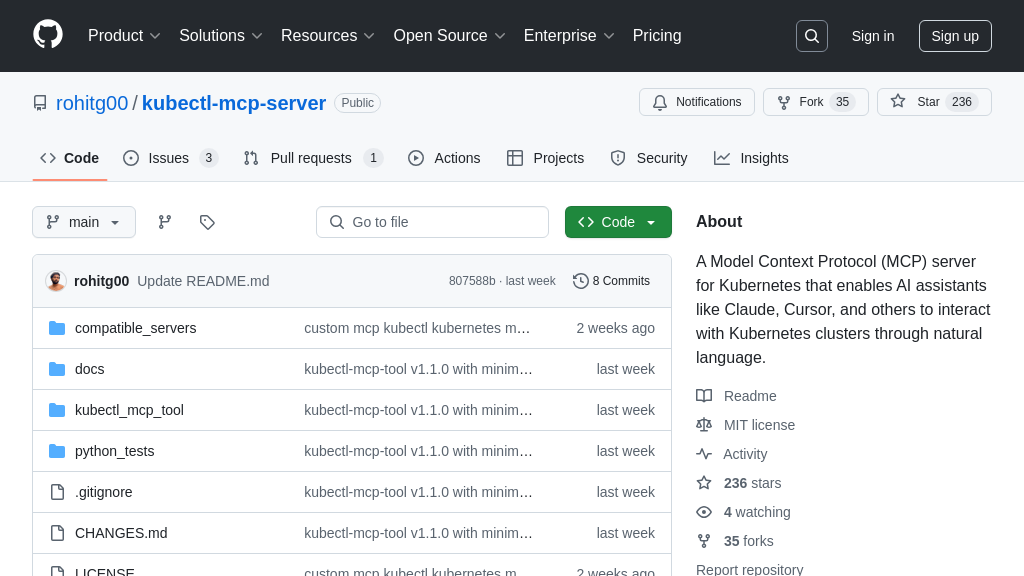

The mcp-simple-openai-assistant is an MCP server designed to seamlessly integrate OpenAI assistants with other AI tools, such as Claude. It empowers these tools to create and interact with OpenAI assistants via the Model Context Protocol, effectively bridging the gap between different AI ecosystems. Key features include the ability to create, manipulate, and converse with OpenAI assistants.

This server addresses the challenge of client timeouts during long OpenAI processing by implementing a two-stage approach: initiating the process in one call and retrieving the response in a subsequent call. By handling all OpenAI API communication, including assistant, thread, and message management, it simplifies the integration process for developers. The core value lies in enabling AI models like Claude to leverage the advanced capabilities of OpenAI's GPTs assistants through a standardized MCP interface. Installation is straightforward using pip, and configuration involves setting the OpenAI API key.

mcp-simple-openai-assistant Key Capabilities

OpenAI Assistant Creation

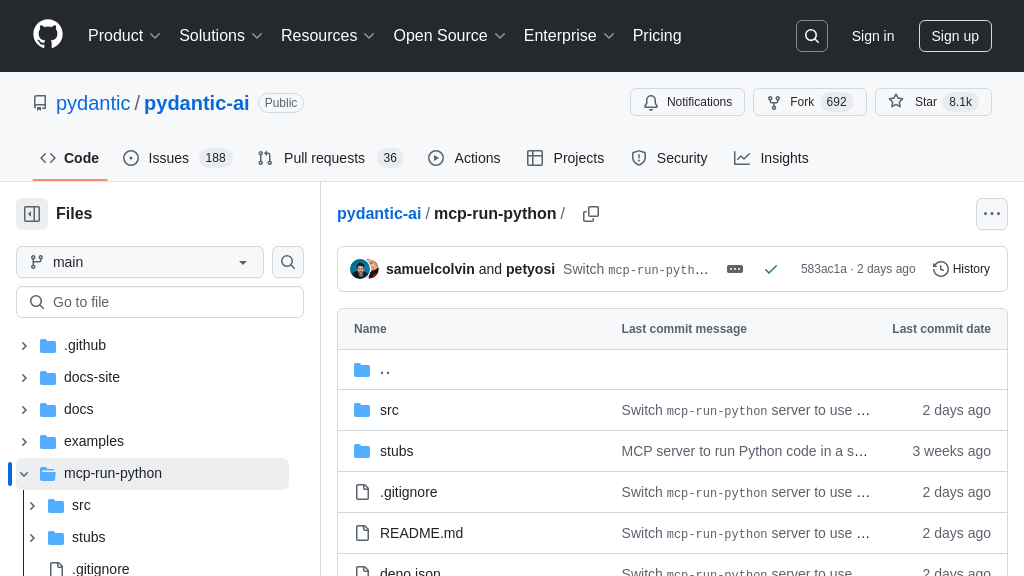

The mcp-simple-openai-assistant server empowers AI models like Claude to create new OpenAI assistants programmatically. This feature allows for dynamic generation of specialized assistants tailored to specific tasks or user needs. The server handles the complexities of the OpenAI API, enabling the AI model to define the assistant's instructions, model, and tools through a standardized MCP request. This eliminates the need for manual configuration within the OpenAI platform, streamlining the process of deploying and managing multiple assistants.

For example, Claude could create an assistant specifically designed for summarizing legal documents by sending a request to the server with the appropriate instructions and specifying the use of a retrieval tool. This dynamically created assistant can then be used immediately without any manual intervention. The technical implementation involves translating the MCP request into the corresponding OpenAI API calls for assistant creation.

Thread and Message Management

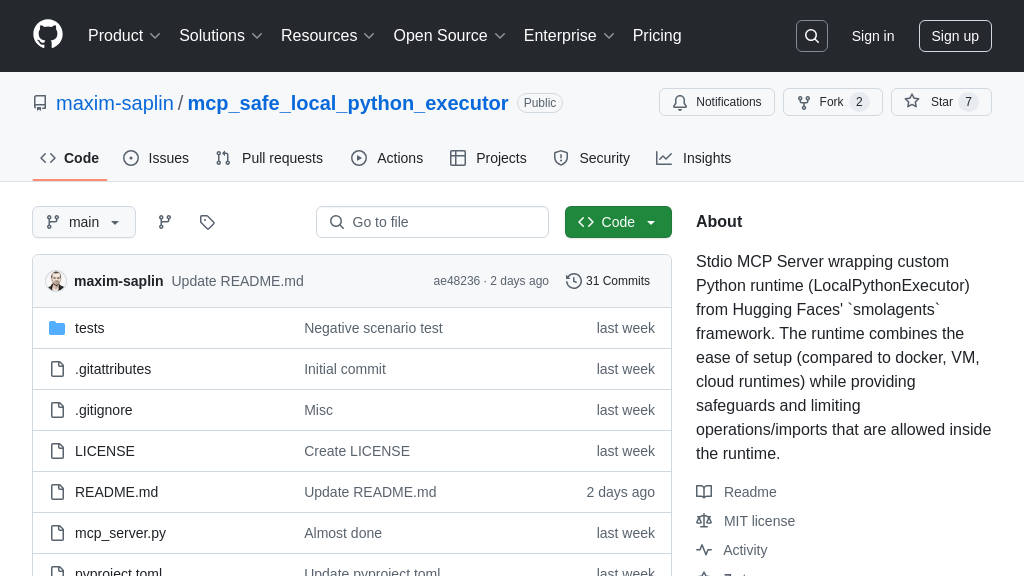

This feature enables AI models to initiate and manage conversations with OpenAI assistants. The server handles the creation of new conversation threads and the sending of messages to these threads, abstracting away the intricacies of the OpenAI API. This allows the AI model to focus on the content of the interaction rather than the underlying technical details. The server also retrieves responses from the OpenAI assistant and relays them back to the AI model in a structured format.

Consider a scenario where Claude needs to gather information from a specific domain. It can create a new thread with an OpenAI assistant specialized in that domain and send a series of questions. The server manages the communication flow, ensuring that messages are delivered correctly and responses are received in a timely manner. The technical implementation involves managing thread IDs and message payloads, and handling the asynchronous nature of the OpenAI API.

Two-Stage Response Handling

Due to potential client timeouts when interacting with OpenAI assistants, the mcp-simple-openai-assistant implements a two-stage approach for retrieving responses. The initial call sends a message to the assistant to begin processing, and a subsequent call retrieves the response, potentially several minutes later. This workaround addresses limitations in current MCP clients that lack keep-alive mechanisms for long-running processes.

For instance, if Claude asks the OpenAI assistant to perform a complex data analysis task, the initial request triggers the analysis. Claude then makes a second request to the server after a reasonable delay to retrieve the completed results. This approach ensures that Claude doesn't time out while waiting for the potentially lengthy processing to finish. The technical implementation involves storing the thread ID and managing the state of the request, allowing the server to retrieve the response when it becomes available.

Integration Advantages

The mcp-simple-openai-assistant offers seamless integration with AI models through the Model Context Protocol (MCP). By adhering to the MCP standard, the server provides a consistent and predictable interface for interacting with OpenAI assistants. This allows different AI models and tools within the MCP ecosystem to leverage the capabilities of OpenAI's GPTs assistants without requiring specific knowledge of the OpenAI API. The standardized interface simplifies the development and deployment of AI-powered applications that rely on external knowledge sources and specialized tools. This promotes interoperability and reduces the complexity of integrating different AI components.