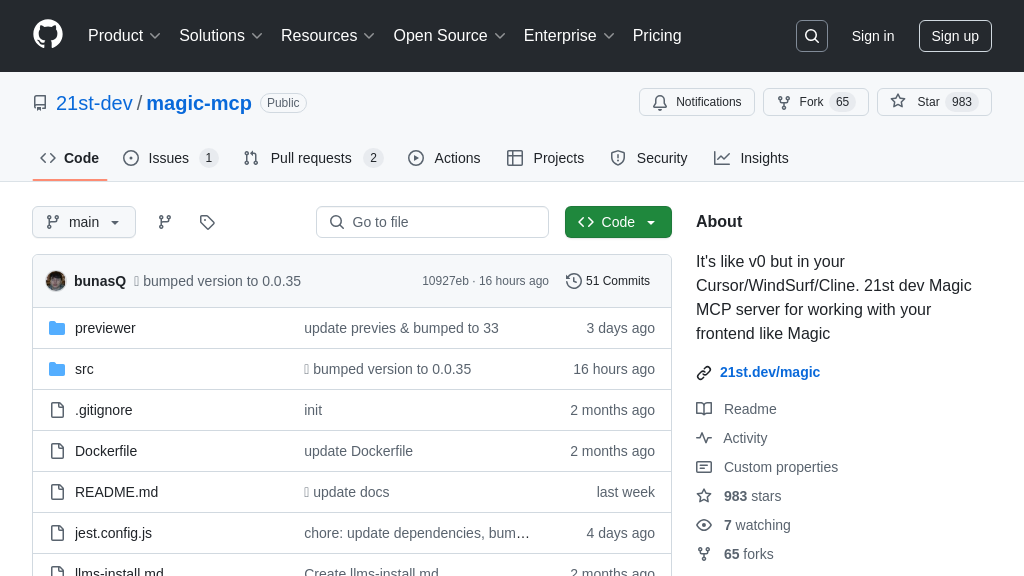

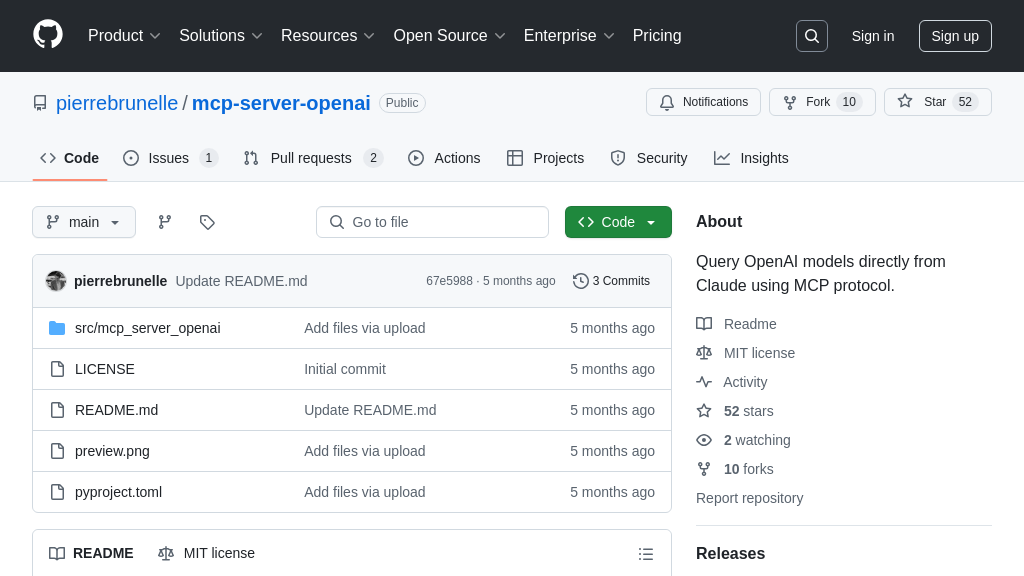

mcp-server-openai

An MCP server for integrating OpenAI models with Claude via the MCP protocol. Simplifies AI model integration.

mcp-server-openai Solution Overview

The mcp-server-openai is an MCP server designed to facilitate communication between AI models and the OpenAI API. It allows applications like Claude to securely access OpenAI models through the standardized MCP protocol. This server acts as a bridge, enabling seamless integration of different AI models within the MCP ecosystem.

Key features include its MCP-compliant communication, Python implementation, and ease of setup. Developers can quickly configure Claude to leverage OpenAI's capabilities by adding the server to their claude_desktop_config.json file. This eliminates the complexities of direct API integrations, offering a streamlined and secure method for AI model interaction. The core value lies in its ability to standardize AI model access, simplifying development workflows and promoting interoperability. It's implemented in Python and easily integrated via pip install, making it accessible to a wide range of developers.

mcp-server-openai Key Capabilities

Secure OpenAI Model Access

The mcp-server-openai acts as a secure gateway, enabling AI models like Claude to access OpenAI's models via the standardized MCP protocol. This eliminates the need for direct, potentially insecure connections between different AI systems. The server handles authentication and authorization, ensuring that only authorized requests are forwarded to OpenAI. This is crucial in scenarios where sensitive data is involved or when access to OpenAI models needs to be strictly controlled. For example, a financial analysis application built on Claude can securely leverage OpenAI's language models for sentiment analysis without exposing its internal data or directly managing OpenAI API keys within the Claude application itself. The server effectively isolates the OpenAI API key, managing it within a controlled environment.

Standardized AI Model Integration

This server promotes interoperability by providing a standardized interface for accessing OpenAI models. By adhering to the MCP protocol, it allows different AI models and applications to seamlessly integrate with OpenAI, regardless of their underlying architectures or programming languages. This simplifies the process of incorporating OpenAI's capabilities into existing AI ecosystems. Imagine a scenario where a company uses a variety of AI models from different providers. The mcp-server-openai allows them to integrate OpenAI models into their workflow without having to write custom integration code for each model. This reduces development time and complexity, fostering a more flexible and adaptable AI infrastructure.

MCP Protocol Compliance

The mcp-server-openai fully implements the MCP protocol for communication. This ensures reliable and consistent interaction between the server and any MCP-compliant client, such as Claude. The server handles the complexities of the MCP protocol, allowing developers to focus on the core functionality of their AI applications. This compliance is vital for maintaining a robust and scalable AI ecosystem. For instance, if a new version of the MCP protocol is released, the mcp-server-openai can be updated to support it, ensuring continued compatibility with all MCP-compliant clients. This future-proofs the integration and reduces the risk of compatibility issues down the line.

Simplified Configuration

The server offers a straightforward configuration process, as demonstrated by the claude_desktop_config.json example. This allows developers to quickly set up and deploy the server with minimal effort. The configuration file specifies the command to run the server, any necessary arguments, and environment variables, such as the OpenAI API key. This ease of configuration reduces the barrier to entry for developers who want to leverage OpenAI models in their AI applications. For example, a developer can quickly deploy the server on a local machine or in a cloud environment by simply modifying the configuration file and running the server. This simplifies the development and testing process, allowing developers to iterate quickly and efficiently.