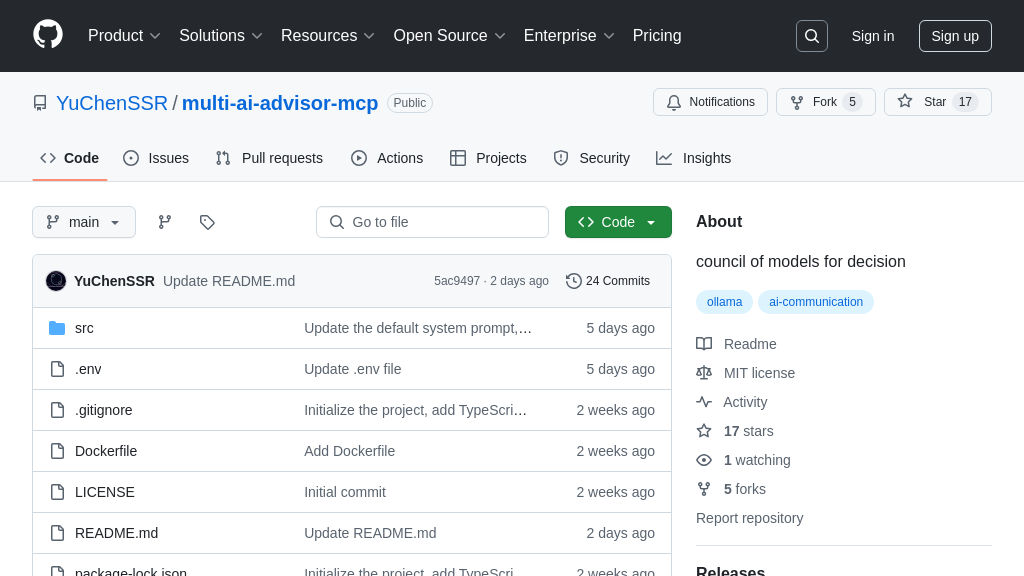

multi-ai-advisor-mcp

Multi-AI-Advisor-MCP: An MCP server for diverse AI insights via multiple Ollama models, enhancing Claude's responses.

multi-ai-advisor-mcp Solution Overview

Multi-Model Advisor is an MCP server designed to provide AI models with diverse perspectives. By querying multiple Ollama models and combining their responses, it offers a "council of models" approach, enabling AI like Claude to synthesize various viewpoints for more comprehensive answers.

This server allows developers to assign distinct roles to each model, customize system prompts, and easily view available models. It seamlessly integrates with Claude for Desktop, enhancing its ability to provide well-rounded advice. Built with Node.js, it utilizes standard input/output for communication and can be extended with HTTP/SSE. The core value lies in its ability to enrich AI reasoning by incorporating multiple expert opinions, leading to more informed and nuanced outputs. Installation is streamlined through Smithery or manual setup, making it accessible for developers seeking to augment their AI's decision-making capabilities.

multi-ai-advisor-mcp Key Capabilities

Multi-Model Concurrent Query

The core function of multi-ai-advisor-mcp is its ability to simultaneously query multiple AI models hosted by Ollama. Instead of relying on a single model's perspective, it leverages several models, each potentially fine-tuned for different tasks or possessing unique datasets. This parallel querying approach allows for a broader range of insights and reduces the risk of bias inherent in individual models. The system aggregates the responses from each model, providing a diverse set of viewpoints on the same query. This is particularly useful in scenarios where a comprehensive understanding requires considering multiple angles, such as complex problem-solving or creative brainstorming.

For example, a user might ask, "What are the potential risks and benefits of implementing a new AI-driven marketing strategy?" The multi-ai-advisor-mcp could query one model specialized in risk assessment, another in market trend analysis, and a third in ethical considerations. The combined responses would offer a more balanced and thorough evaluation than any single model could provide.

Technically, this is achieved by utilizing the query-models tool, which orchestrates the parallel requests to the Ollama API for each configured model.

Customizable Model Roles

Each AI model within the multi-ai-advisor-mcp can be assigned a distinct role or persona through customizable system prompts. This feature allows developers to shape the behavior and output of each model, ensuring that the combined responses are not only diverse but also relevant and targeted. By defining specific roles, the system can simulate a "council of advisors," where each member brings a unique expertise or perspective to the table. This enhances the quality and depth of the insights generated, making them more valuable for decision-making.

Imagine a scenario where a company is developing a new product. Using multi-ai-advisor-mcp, they could assign roles such as "market analyst," "user experience expert," and "technical feasibility assessor" to different models. Each model would then analyze the product concept from its assigned perspective, providing targeted feedback that can be synthesized into a comprehensive product development strategy.

The system prompts are configured via environment variables (e.g., GEMMA_SYSTEM_PROMPT, LLAMA_SYSTEM_PROMPT), allowing for easy customization without modifying the core code.

Seamless Claude Integration

multi-ai-advisor-mcp is designed for seamless integration with Claude for Desktop, enhancing Claude's capabilities by providing access to a diverse range of AI models and perspectives. This integration allows users to leverage the "council of advisors" approach directly within their existing Claude workflow, without needing to switch between different tools or platforms. By simply configuring Claude to recognize the multi-ai-advisor-mcp server, users can unlock a new level of insight and decision-making power.

For instance, a user working on a complex research project within Claude can use multi-ai-advisor-mcp to gather diverse perspectives on a particular topic. By prompting Claude to use the multi-model advisor, the user can receive synthesized responses from multiple models, each offering a unique viewpoint that enriches the research process.

Integration is achieved by adding the MCP server information to Claude's configuration file (claude_desktop_config.json), specifying the command and arguments needed to launch the multi-ai-advisor-mcp server.

Dynamic Model Discovery

The list-available-models tool allows users to dynamically discover which Ollama models are available on their system. This feature is crucial for managing and configuring the multi-ai-advisor-mcp, as it provides a real-time view of the available resources. By knowing which models are installed and ready to use, users can easily customize the system to leverage the most appropriate models for their specific needs. This dynamic discovery capability ensures that the multi-ai-advisor-mcp remains flexible and adaptable to changing environments.

For example, a developer might use the list-available-models tool to check if a newly installed model is correctly recognized by the system. This allows them to quickly troubleshoot any configuration issues and ensure that the model is ready to be used in the multi-model querying process.

This tool directly interacts with the Ollama API to retrieve the list of available models, providing an up-to-date view of the system's capabilities.