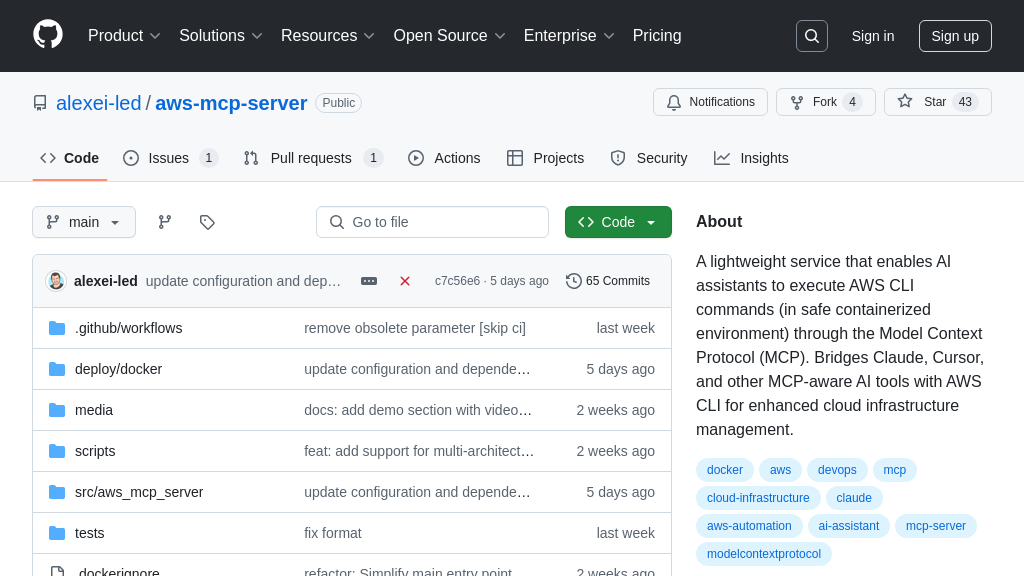

website-downloader

Website Downloader: An MCP server for downloading entire websites, enabling AI models to access and process web data offline.

website-downloader Solution Overview

Website Downloader is an MCP Server designed to equip AI models with the ability to access and process entire websites. It leverages wget to recursively download websites, preserving their original structure and converting links for seamless local access. This server empowers AI models to analyze website content offline, extract data, or use website structure for training purposes.

Key features include customizable download depth, automatic inclusion of CSS, images, and other requisites, and domain restriction to ensure focused data acquisition. By integrating Website Downloader into the MCP ecosystem, developers can overcome the limitations of real-time web scraping and provide AI models with comprehensive, readily available website data. This streamlines data ingestion pipelines and unlocks new possibilities for AI-driven web analysis and content understanding. The server is easily integrated into MCP via a simple configuration, making it a valuable asset for any AI project requiring website data.

website-downloader Key Capabilities

Complete Website Downloading

The website-downloader MCP server utilizes wget to download entire websites, including all associated files such as CSS, images, and JavaScript. This ensures that the downloaded content is a complete and accurate representation of the original website. The server recursively downloads pages, following links to a specified depth (defaulting to infinite), effectively mirroring the entire site structure. This feature is crucial for AI models that require a comprehensive understanding of a website's content and structure for tasks like content analysis, data extraction, or training language models. For example, an AI model designed to summarize news articles from a specific website could use this tool to download the entire archive for training data. The depth parameter allows control over the scope of the download, preventing excessive data retrieval when only a portion of the site is needed.

Local Link Conversion

A key feature of the website-downloader is its ability to convert links within the downloaded website to function correctly locally. This means that all hyperlinks, image sources, and other resource references are modified to point to the downloaded files on the local file system. This is essential for AI models that need to interact with the downloaded website as if it were still live on the internet. For instance, an AI model designed to test website functionality or identify broken links can use this feature to simulate a user navigating the site. Without this conversion, the AI model would encounter broken links and be unable to properly analyze the website's structure and content. The conversion process ensures that the downloaded website remains fully functional and navigable, providing a realistic environment for AI model interaction.

Domain Restriction

The website-downloader restricts downloads to the same domain as the initial URL. This prevents the tool from inadvertently downloading content from external websites linked to the target site. This feature is important for maintaining data integrity and ensuring that the AI model only processes content relevant to the specified website. For example, if an AI model is designed to analyze the content of a specific company's website, restricting the download to that domain prevents the inclusion of irrelevant data from third-party websites, such as advertisements or social media feeds. This restriction improves the accuracy and efficiency of the AI model by focusing its analysis on the intended content. This is achieved through wget's built-in domain restriction flags.

Technical Implementation

The website-downloader MCP server is implemented in JavaScript using Node.js. It leverages the wget command-line tool to perform the actual website downloading. The server exposes a download_website tool that accepts the URL of the website to download, an optional output path, and an optional depth parameter. The server then executes the wget command with the appropriate parameters, including flags to recursively download the website, convert links for local use, add appropriate file extensions, and restrict downloads to the same domain. The use of wget provides a robust and efficient solution for website downloading, while the JavaScript implementation allows for easy integration with other MCP components. The server is designed to be easily installed and configured, requiring only a few steps to build and add to the MCP settings.