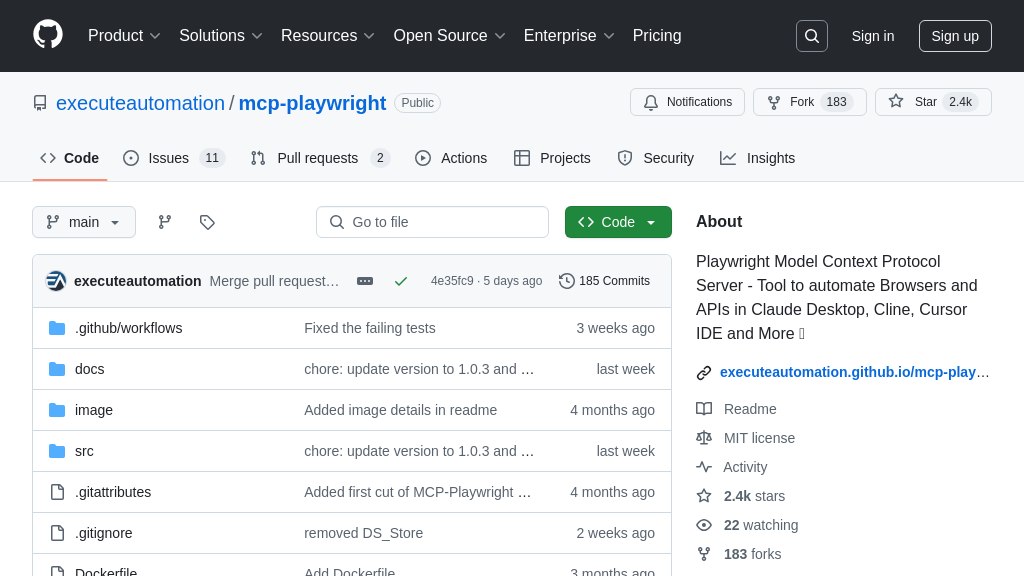

g-search-mcp

G-Search-MCP: An MCP server for parallel Google searches, delivering structured JSON results for AI integration.

g-search-mcp Solution Overview

G-Search MCP is a powerful MCP server designed to enhance AI model capabilities by providing structured Google search results. As an MCP server, it enables parallel searching with multiple keywords simultaneously, significantly improving search efficiency. It addresses the developer pain point of needing to efficiently extract and structure information from Google Search for AI model consumption.

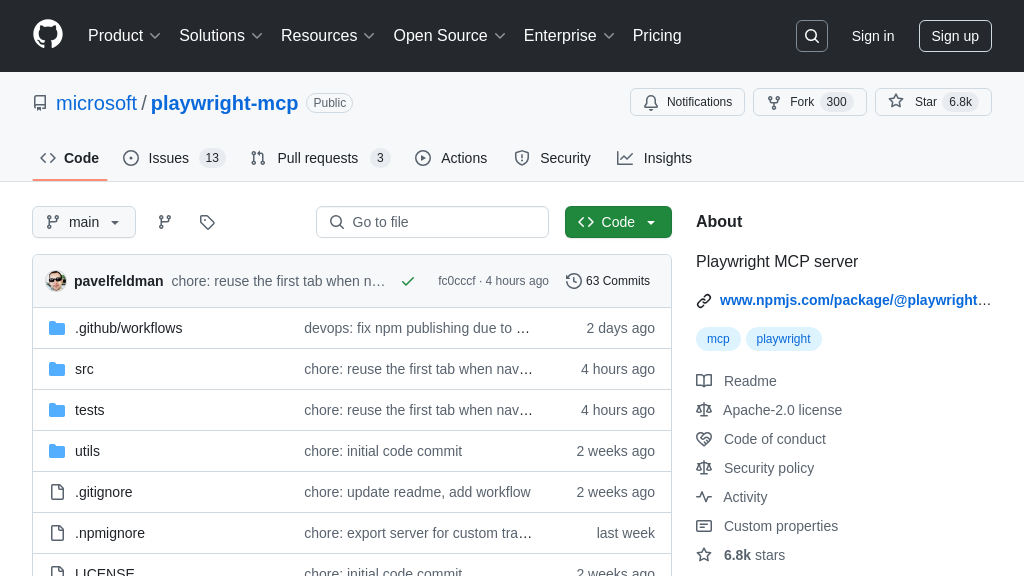

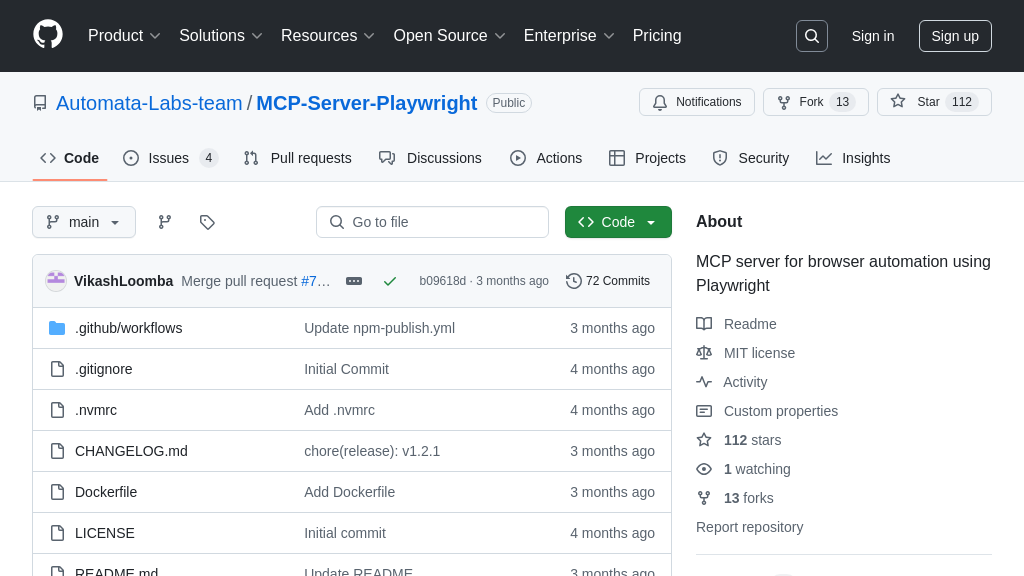

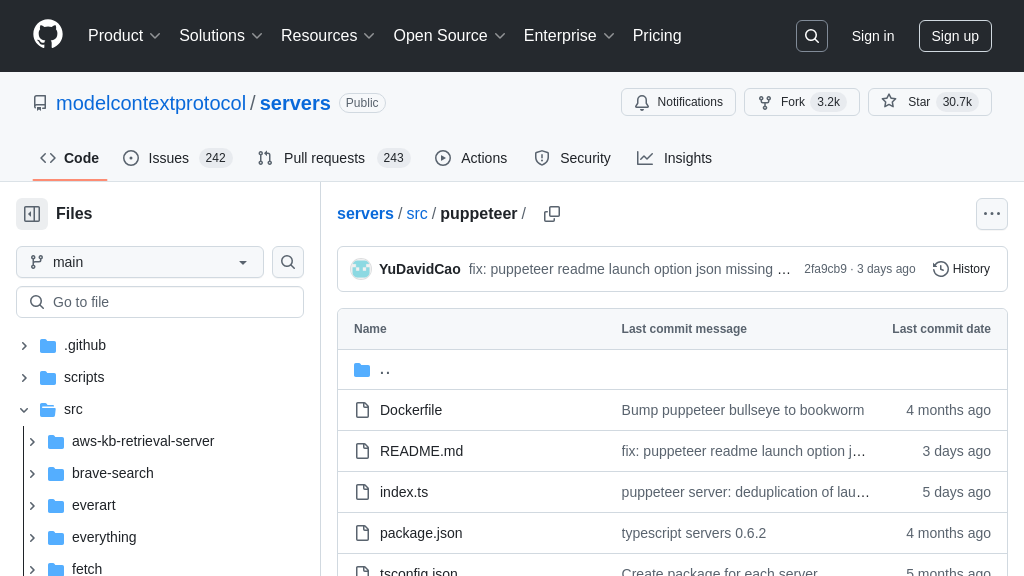

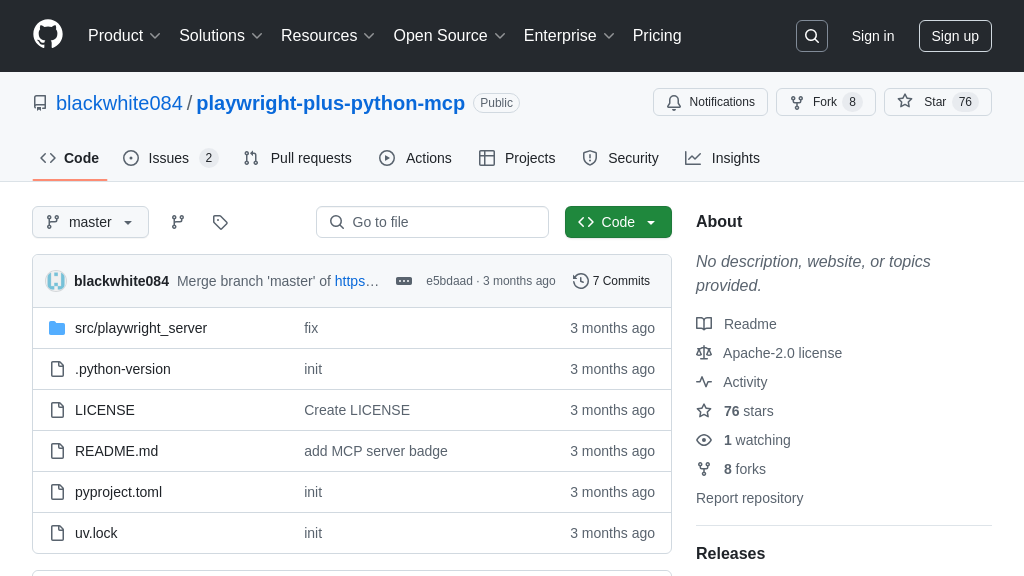

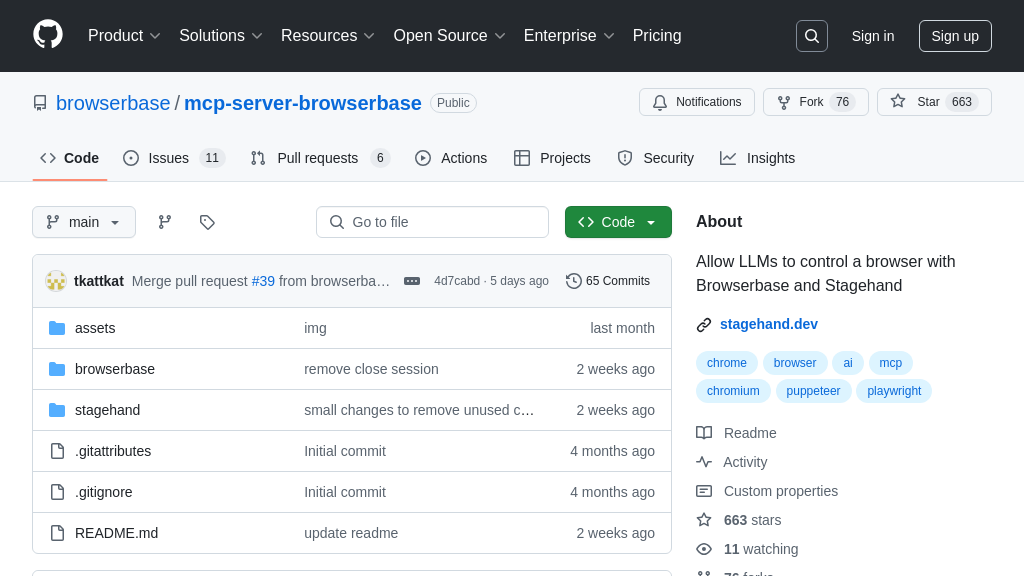

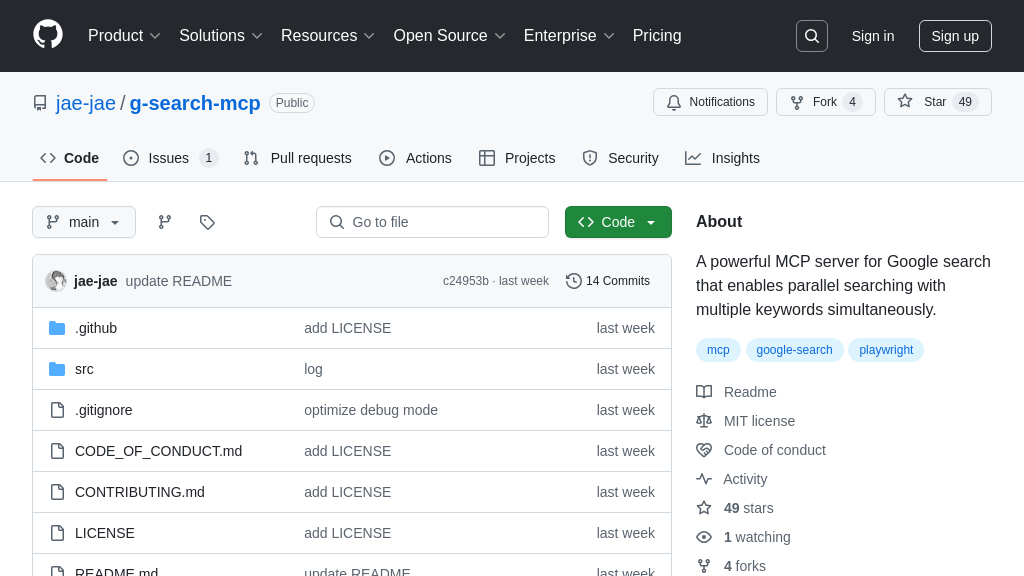

This solution utilizes Playwright to simulate real user browsing patterns, reducing the risk of detection by search engines, and automatically handles CAPTCHAs. G-Search MCP returns search results in JSON format, facilitating easy integration with AI models. Key features include configurable parameters for result limits, timeout settings, and locale, offering flexibility in search customization. By providing a structured and efficient way to access Google Search, G-Search MCP empowers AI models with up-to-date information, enabling more informed and accurate decision-making. It integrates seamlessly into the MCP ecosystem via standard command-line execution, making it easy to deploy and use.

g-search-mcp Key Capabilities

Parallel Keyword Searching

g-search-mcp enables AI models to conduct multiple Google searches simultaneously, significantly reducing the time required to gather information from the web. Instead of sequentially querying Google for each keyword, the server opens multiple browser tabs within a single browser instance to perform parallel searches. This is particularly valuable for AI models that require a broad understanding of a topic, as it allows them to quickly gather diverse perspectives and data points. For example, an AI model researching climate change can simultaneously search for "climate change causes," "climate change effects," and "climate change solutions," receiving results for all queries in a fraction of the time it would take to perform them sequentially. This parallel processing capability is implemented using Playwright, a Node library for automating Chromium, Firefox and WebKit, providing robust browser control and automation.

Structured JSON Output

The server returns search results in a structured JSON format, making it easy for AI models to parse and utilize the information. This eliminates the need for complex text parsing or web scraping, streamlining the data ingestion process for AI models. The JSON format includes key information such as the title of the search result, the URL, and a snippet of the content. This structured data allows AI models to quickly identify relevant information and integrate it into their knowledge base or decision-making processes. For instance, an AI model designed to summarize news articles can use the JSON output to extract the title and summary of multiple articles related to a specific event, enabling it to generate a comprehensive summary in a fraction of the time.

Automatic CAPTCHA Handling

g-search-mcp is designed to intelligently detect CAPTCHAs and automatically enable visible browser mode for user verification when needed. This feature ensures that the search process is not interrupted by CAPTCHA challenges, which are often triggered when search engines detect automated traffic. By simulating real user behavior and providing a mechanism for manual CAPTCHA resolution, the server minimizes the risk of being blocked by search engines. This is crucial for AI models that rely on continuous access to search results, as it ensures that they can consistently gather the information they need without human intervention. The implementation involves monitoring the browser's behavior and detecting CAPTCHA prompts, then dynamically adjusting the browser's visibility to allow for manual resolution.

Configurable Search Parameters

The server supports a wide range of configurable parameters, allowing developers to fine-tune the search process to meet the specific needs of their AI models. These parameters include the number of search results to return per query (limit), the page loading timeout (timeout), and the locale setting for search results (locale). By adjusting these parameters, developers can optimize the search process for different use cases and ensure that their AI models receive the most relevant and accurate information. For example, an AI model targeting a specific geographic region can use the locale parameter to ensure that search results are tailored to that region. Similarly, the timeout parameter can be adjusted to accommodate slow-loading websites, ensuring that the search process is not interrupted by timeouts.